Artificial General Intelligence (AGI)

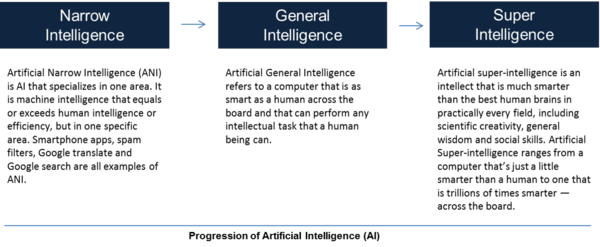

AGI ( Artificial General Intelligence) is a term used to describe Real intelligent systems. Real intelligent systems posses ability to think generally, to take decisions irrespective of any previous training, here decisions are made based on what they’ve learnt on their own. It can be really difficult to design such systems as technology of today is somewhat limited, but we can create so called “ Partial AGI”.[1]

AGI is a single intelligence or algorithm that can learn multiple tasks and exhibits positive transfer when doing so, sometimes called meta-learning. During meta-learning, the acquisition of one skill enables the learner to pick up another new skill faster because it applies some of its previous “know-how” to the new task. In other words, one learns how to learn — and can generalize that to acquiring new skills, the way humans do. As it currently exists, AI shows little ability to transfer learning towards new tasks. Typically, it must be trained anew from scratch. For instance, the same neural network that makes recommendations to you for a Netflix show cannot use that learning to suddenly start making meaningful grocery recommendations. Even these single-instance “narrow” AIs can be impressive, such as IBM’s Watson or Google’s self-driving car tech. However, these aren’t nearly so much so an artificial general intelligence, which could conceivably unlock the kind of recursive self-improvement variously referred to as the “intelligence explosion” or “singularity.”[2]

source: Octane AI

Artificial General Intelligence Approaches

Approaches to Artificial General Intelligence[3]

Artificial General Intelligence (AGI), also known as Strong AI, distinguishes itself from the more general term AI by specifically having its a goal human-level or greater intelligence. The following neural approaches, sometimes called "connectionist", try to imitate the human brain in someway.

- Neuroscience: The neural approaches inspired by neuroscience try to faithfully reproduce the human brain.

- Artificial Neural Network" Artificial Neural Networks, while originally inspired by the human brain in the 1950s, now continue to refer to that basic original architecture and no longer have an explicit goal of accurately modeling the human brain. Most promising in this category are Neural Turing Machines (such as being commercialized by DeepMind, acquired by Google). NTMs add internal state to conventional neural networks.

- Symbolic: The Cyc project is still going on ever since 1984 to encode all of human common sense into a massive graph. A more recent, more comprehensive, and more ambitious project is OpenCog. OpenCog uses hypergraphs (graphs where edges touch more than two vertices), probabilistic reasoning, and a variety of specialized knowledge stores and algorithms.

- Neural/Symbolic Integration: Why settle for either the black box but adaptive approach of neural networks or the white box but less flexible symbolic approaches, when you can have both? It's quite a challenge and there have been a few different projects meeting various levels of success in various domains. LIDA is one example of this genre.

Artificial General Intelligence - Strategies, Techniques and Projects

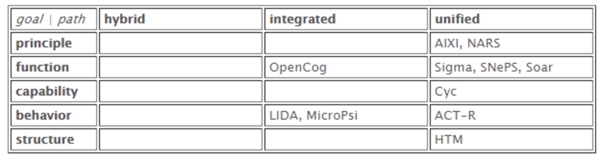

Artificial General Intelligence - Strategies and Techniques along with Representative AGI Projects[4]

Strategies and Techniques

On one hand, the ultimate goal of AGI is to reproduce intelligence as a whole, while on the other hand, engineering practice must be step-by-step. To resolve this dilemma, three overall strategies have been proposed:

- Hybrid Approach: To develop individual functions first (using different theories and techniques), then to connect them together.

- Argument: (AA)AI: More than the Sum of Its Parts, Ronald Brachman

- Difficulty: Compatibility of the theories and techniques

- Integrated Approach: To design an architecture first, then to design its modules (using various techniques) accordingly.

- Argument: Cognitive Synergy: A Universal Principle for Feasible General Intelligence?, Ben Goertzel

- Difficulty: Isolation, specification, and coordination of the functions

- Unified Approach: Using a single technique to start from a core system, then to extend and augment it incrementally.

- Argument: Toward a Unified Artificial Intelligence, Pei Wang

- Difficulty: Versatility and extensibility of the core technique

Representative AGI Projects

The following projects are selected to represent the current AGI research, as for each of them, it can be said that

- It is clearly oriented to AGI (that is why IBM's Watson and Google's AlphaGo are not included)

- It is still very active (that is why Pollock's OSCAR and Brooks' Cog are no longer included)

- It has ample publications on technical details (that is why many recent AGI projects are not included yet)

The projects are listed in alphabetical order below:

- ACT-R: ACT-R is a cognitive architecture: a theory for simulating and understanding human cognition. Researchers working on ACT-R strive to understand how people organize knowledge and produce intelligent behavior. As the research continues, ACT-R evolves ever closer into a system which can perform the full range of human cognitive tasks: capturing in great detail the way we perceive, think about, and act on the world. On the exterior, ACT-R looks like a programming language; however, its constructs reflect assumptions about human cognition. These assumptions are based on numerous facts derived from psychology experiments. Like a programming language, ACT-R is a framework: for different tasks (e.g., Tower of Hanoi, memory for text or for list of words, language comprehension, communication, aircraft controlling), researchers create models (aka programs) that are written in ACT-R and that, beside incorporating the ACT-R's view of cognition, add their own assumptions about the particular task. These assumptions can be tested by comparing the results of the model with the results of people doing the same tasks. ACT-R is a hybrid cognitive architecture. Its symbolic structure is a production system; the subsymbolic structure is represented by a set of massively parallel processes that can be summarized by a number of mathematical equations. The subsymbolic equations control many of the symbolic processes. For instance, if several productions match the state of the buffers, a subsymbolic utility equation estimates the relative cost and benefit associated with each production and decides to select for execution the production with the highest utility. Similarly, whether (or how fast) a fact can be retrieved from declarative memory depends on subsymbolic retrieval equations, which take into account the context and the history of usage of that fact. Subsymbolic mechanisms are also responsible for most learning processes in ACT-R.

- AIXI: An important observation is that most, if not all known facets of intelligence can be formulated as goal driven or, more precisely, as maximizing some utility function. Sequential decision theory formally solves the problem of rational agents in uncertain worlds if the true environmental prior probability distribution is known. Solomonoff's theory of universal induction formally solves the problem of sequence prediction for unknown prior distribution. We combine both ideas and get a parameter-free theory of universal Artificial Intelligence. We give strong arguments that the resulting AIXI model is the most intelligent unbiased agent possible. The major drawback of the AIXI model is that it is uncomputable, ... which makes an implementation impossible. To overcome this problem, we constructed a modified model AIXItl, which is still effectively more intelligent than any other time t and length l bounded algorithm.

- Cyc: Vast amounts of commonsense knowledge, representing human consensus reality, would need to be encoded to produce a general AI system. In order to mimic human reasoning, Cyc would require background knowledge regarding science, society and culture, climate and weather, money and financial systems, health care, history, politics, and many other domains of human experience. The Cyc Project team expected to encode at least a million facts spanning these and many other topic areas. The Cyc knowledge base (KB) is a formalized representation of a vast quantity of fundamental human knowledge: facts, rules of thumb, and heuristics for reasoning about the objects and events of everyday life. The medium of representation is the formal language CycL. The KB consists of terms -- which constitute the vocabulary of CycL -- and assertions which relate those terms. These assertions include both simple ground assertions and rules.

- HTM: At the core of every Grok model is the Cortical Learning Algorithm (CLA), a detailed and realistic model of a layer of cells in the neocortex. Contrary to popular belief, the neocortex is not a computing system, it is a memory system. When you are born, the neocortex has structure but virtually no knowledge. You learn about the world by building models of the world from streams of sensory input. From these models, we make predictions, detect anomalies, and take actions. In other words, the brain can best be described as a predictive modeling system that turns predictions into actions. Three key operating principles of the neocortex are described below: sparse distributed representations, sequence memory, and on-line learning.

- LIDA: Implementing and fleshing out a number of psychological and neuroscience theories of cognition, the LIDA conceptual model aims at being a cognitive "theory of everything." With modules or processes for perception, working memory, episodic memories, "consciousness," procedural memory, action selection, perceptual learning, episodic learning, deliberation, volition, and non-routine problem solving, the LIDA model is ideally suited to provide a working ontology that would allow for the discussion, design, and comparison of AGI systems. The LIDA technology is based on the LIDA cognitive cycle, a sort of "cognitive atom." The more elementary cognitive modules play a role in each cognitive cycle. Higher-level processes are performed over multiple cycles. The LIDA architecture represents perceptual entities, objects, categories, relations, etc., using nodes and links .... These serve as perceptual symbols acting as the common currency for information throughout the various modules of the LIDA architecture.

- MicroPsi: The MicroPsi agent architecture describes the interaction of emotion, motivation and cognition of situated agents, mainly based on the Psi theory of Dietrich Dorner. The Psi theory addresses emotion, perception, representation and bounded rationality, but being formulated within psychology, has had relatively little impact on the discussion of agents within computer science. MicroPsi is a formulation of the original theory in a more abstract and formal way, at the same time enhancing it with additional concepts for memory, building of ontological categories and attention. The agent framework uses semantic networks, called node nets, that are a unified representation for control structures, plans, sensory and action schemas, Bayesian networks and neural nets. Thus it is possible to set up different kinds of agents on the same framework.

- NARS: What makes NARS different from conventional reasoning systems is its ability to learn from its experience and to work with insufficient knowledge and resources. NARS attempts to uniformly explain and reproduce many cognitive facilities, including reasoning, learning, planning, etc, so as to provide a unified theory, model, and system for AI as a whole. The ultimate goal of this research is to build a thinking machine. The development of NARS takes an incremental approach consisting four major stages. At each stage, the logic is extended to give the system a more expressive language, a richer semantics, and a larger set of inference rules; the memory and control mechanism are then adjusted accordingly to support the new logic. In NARS the notion of "reasoning" is extended to represent a system's ability to predict the future according to the past, and to satisfy the unlimited resources demands using the limited resources supply, by flexibly combining justifiable micro steps into macro behaviors in a domain-independent manner.

- OpenCog: OpenCog, as a software framework, aims to provide research scientists and software developers with a common platform to build and share artificial intelligence programs. The long-term goal of OpenCog is acceleration of the development of beneficial AGI. OpenCogPrime is a specific AGI design being constructed within the OpenCog framework. It comes with a fairly detailed, comprehensive design covering all aspects of intelligence. The hypothesis is that if this design is fully implemented and tested on a reasonably-sized distributed network, the result will be an AGI system with general intelligence at the human level and ultimately beyond. While an OpenCogPrime based AGI system could do a lot of things, we are initially focusing on using OpenCogPrime to control simple virtual agents in virtual worlds. We are also experimenting with using it to control a Nao humanoid robot.

- Sigma: The goal of this effort is to develop a sufficiently efficient, functionally elegant, generically cognitive, grand unified, cognitive architecture in support of virtual humans (and hopefully intelligent agents/robots – and even a new form of unified theory of human cognition – as well). The focus is on the development of the Sigma (∑) architecture, which explores the graphical architecture hypothesis that progress at this point depends on blending what has been learned from over three decades worth of independent development of cognitive architectures and graphical models, a broadly applicable state-of-the-art formalism for constructing intelligent mechanisms. The result is a hybrid (discrete+continuous) mixed (symbolic+probabilistic) approach that has yielded initial results across memory and learning, problem solving and decision making, mental imagery and perception, speech and natural language, and emotion and attention.

- SNePS: The long term goal of the SNePS Research Group is to understand the nature of intelligent cognitive processes by developing and experimenting with computational cognitive agents that are able to use and understand natural language, reason, act, and solve problems in a wide variety of domains. The SNePS knowledge representation, reasoning, and acting system has several features that facilitate metacognition in SNePS-based agents. The most prominent is the fact that propositions are represented in SNePS as terms rather than as logical sentences. The effect is that propositions can occur as arguments of propositions, acts, and policies without limit, and without leaving first-order logic.

- Soar: The ultimate in intelligence would be complete rationality which would imply the ability to use all available knowledge for every task that the system encounters. Unfortunately, the complexity of retrieving relevant knowledge puts this goal out of reach as the body of knowledge increases, the tasks are made more diverse, and the requirements in system response time more stringent. The best that can be obtained currently is an approximation of complete rationality. The design of Soar can be seen as an investigation of one such approximation. For many years, a secondary principle has been that the number of distinct architectural mechanisms should be minimized. Through Soar 8, there has been a single framework for all tasks and subtasks (problem spaces), a single representation of permanent knowledge (productions), a single representation of temporary knowledge (objects with attributes and values), a single mechanism for generating goals (automatic subgoaling), and a single learning mechanism (chunking). We have revisited this assumption as we attempt to ensure that all available knowledge can be captured at runtime without disrupting task performance. This is leading to multiple learning mechanisms (chunking, reinforcement learning, episodic learning, and semantic learning), and multiple representations of long-term knowledge (productions for procedural knowledge, semantic memory, and episodic memory). Two additional principles that guide the design of Soar are functionality and performance. Functionality involves ensuring that Soar has all of the primitive capabilities necessary to realize the complete suite of cognitive capabilities used by humans, including, but not limited to reactive decision making, situational awareness, deliberate reasoning and comprehension, planning, and all forms of learning. Performance involves ensuring that there are computationally efficient algorithms for performing the primitive operations in Soar, from retrieving knowledge from long-term memories, to making decisions, to acquiring and storing new knowledge.

source: Pie Wang

The above AGI projects are roughly classified in the following table, according to the type of their answers on their research goals and technical paths.

Controversies and Dangers of Artificial General Intelligence

Understanding the Controversies and Dangers of Artificial General Intelligence[5]

- Feasibility: Opinions vary both on whether and when artificial general intelligence will arrive. At one extreme, AI pioneer Herbert A. Simon wrote in 1965: "machines will be capable, within twenty years, of doing any work a man can do". However, this prediction failed to come true. Microsoft co-founder Paul Allen believed that such intelligence is unlikely in the 21st century because it would require "unforeseeable and fundamentally unpredictable breakthroughs" and a "scientifically deep understanding of cognition". Writing in The Guardian, roboticist Alan Winfield claimed the gulf between modern computing and human-level artificial intelligence is as wide as the gulf between current space flight and practical faster-than-light spaceflight. AI experts' views on the feasibility of AGI wax and wane, and may have seen a resurgence in the 2010s. Four polls conducted in 2012 and 2013 suggested that the median guess among experts for when they'd be 50% confident AGI would arrive was 2040 to 2050, depending on the poll, with the mean being 2081. It is also interesting to note 16.5% of the experts answered with "never" when asked the same question but with a 90% confidence instead.

- Potential threat to human existence: The creation of artificial general intelligence may have repercussions so great and so complex that it may not be possible to forecast what will come afterwards. Thus the event in the hypothetical future of achieving strong AI is called the technological singularity, because theoretically one cannot see past it. But this has not stopped philosophers and researchers from guessing what the smart computers or robots of the future may do, including forming a utopia by being our friends or overwhelming us in an AI takeover. The latter potentiality is particularly disturbing as it poses an existential risk for mankind.

- Self-replicating machines: Smart computers or robots would be able to design and produce improved versions of themselves. A growing population of intelligent robots could conceivably out-compete inferior humans in job markets, in business, in science, in politics (pursuing robot rights), and technologically, sociologically (by acting as one), and militarily. Even nowadays, many jobs have already been taken by intelligent machines. For example, robots for homes, health care, hotels, and restaurants have automated many parts of our lives: virtual bots turn customer service into self-service, big data AI applications are used to replace portfolio managers, and social robots such as Pepper are used to replace human greeters for customer service purpose

- Emergent superintelligence: If research into strong AI produced sufficiently intelligent software, it would be able to reprogram and improve itself – a feature called "recursive self-improvement". It would then be even better at improving itself, and would probably continue doing so in a rapidly increasing cycle, leading to an intelligence explosion and the emergence of superintelligence. Such an intelligence would not have the limitations of human intellect, and might be able to invent or discover almost anything. Hyper-intelligent software might not necessarily decide to support the continued existence of mankind, and might be extremely difficult to stop. This topic has also recently begun to be discussed in academic publications as a real source of risks to civilization, humans, and planet Earth. One proposal to deal with this is to make sure that the first generally intelligent AI is a friendly AI that would then endeavor to ensure that subsequently developed AIs were also nice to us. But friendly AI is harder to create than plain AGI, and therefore it is likely, in a race between the two, that non-friendly AI would be developed first. Also, there is no guarantee that friendly AI would remain friendly, or that its progeny would also all be good.

Artificial General Intelligence Society

The Artificial General Intelligence Society is a nonprofit organization with the goals:[6]

- to promote the study of artificial general intelligence (AGI), and the design of AGI systems.

- to facilitate co-operation and communication among those interested in the study and pursuit of AGI

- to hold conferences and meetings for the communication of knowledge concerning AGI

- to produce publications regarding AGI research and development

- to publicize and disseminate by other means knowledge and views concerning AGI

See Also

Artificial Intelligence (AI)

Artificial Neural Network (ANN)

Human Computer Interaction (HCI)

Human-Centered Design (HCD)

Machine-to-Machine (M2M)

Machine Learning

Cognitive Computing

Predictive Analytics

Data Analytics

Data Analysis

Big Data

References

- ↑ Definition: What does Artificial General Intelligence (AGI) Mean? Towards Data Science

- ↑ What is Artificial General Intelligence (AGI)? Extreme Tech

- ↑ Four Approaches to Artificial General Intelligence Michael Malak

- ↑ Artificial General Intelligence - Strategies and Techniques Pie Wang

- ↑ Controversies and Dangers of Artificial General Intelligence Wikipedia

- ↑ The Artificial General Intelligence Society agi-society.org

Further Reading

- The Path to Artificial General Intelligence Wilder Rodrigues

- What is artificial general intelligence? Nick Heath

- This is when AI’s top researchers think artificial general intelligence will be achieved James Vincent