Data Virtualization

What is Data Virtualization?

Data Virtualization is a software approach that allows organizations to access and manipulate data from multiple sources as if it were a single, unified data source. It enables users to access data in real-time, without having to physically move or replicate the data, thus reducing data redundancy and enhancing data consistency. This approach provides a virtual layer that integrates data from various sources and allows users to interact with it through a unified interface, providing seamless access to the data they need. Data virtualization helps to optimize data management and analysis, which ultimately leads to better decision-making and improved business outcomes.

Data virtualization presents a modern approach to data integration. Unlike ETL solutions, which replicate data, data virtualization leaves the data in source systems, simply exposing an integrated view of all the data to data consumers. As business users drill down into reports, data virtualization fetches the data in real-time from the underlying source systems. Data virtualization proves that connecting to data is far superior to collecting it.

Other Definitions of Data Virtualization Include:

- A single database view/s allowing access to distributed databases and multiple heterogeneous data stores. (DAMA DMBoK2)

- “A technology that delivers information from various data sources, including big data sources such as Hadoop and distributed data stores in real-time and near-real time.” (Boston University)

- Abstraction of IT data resources “that masks the physical nature and boundaries of those resources from resource users.” (Gartner)

- A technical “approach by which data access can be easily centralized, standardized, and secured across the enterprise, no matter the location, design or platform of the data source.” (Indiana University)

- “The process of aggregating data from different sources of information to develop a single, logical, and virtual view of information so that it can be accessed by front-end solutions such as applications, dashboards, and portals without having to know the data’s exact storage location.” (Techopedia)

Capabilities Needed in a Data Virtualization System[1]

Four components are needed to meet urgent business needs with data virtualization

- Agile design and development: You need to be able to introspect available data, discover hidden relationships, model individual views/services, validate views/services, and modify as required. These capabilities automate difficult work, improve time to solution, and increase object reuse.

- High-performance runtime: The application invokes a request, the optimized query executes a single statement, and the result is delivered in proper form. This capability allows for up-to-the-minute data, optimized performance, and less replication.

- Use of caching when appropriate: Caching essential data, the application invokes a request, an optimized query (leveraging cached data) executes, and data is delivered in the proper form. This capability boosts performance, avoids network constraints, and allows 24x7 availability.

- Business directory/catalog to make data easy to find: This capability includes features for search and data categorization, browsing all available data, selecting from a directory of views, and collaborating with IT to improve data quality and usefulness. This capability empowers business users with more data, improves IT/business user effectiveness, and enables data virtualization to be more widely adopted.

The Uses of Data Virtualization[2]

With real-time access to holistic information, businesses across many industries can efficiently execute complex processes.

- Analyze current business performance against previous years.

- Comply with regulations that require the traceability of historical data.

- Search for and discover interrelated data.

- Modernize business applications while replacing legacy systems.

- Migrate from on-premises applications to cloud applications.

- Monetize data by delivering it as a service.

How Data Virtualization Works[3]

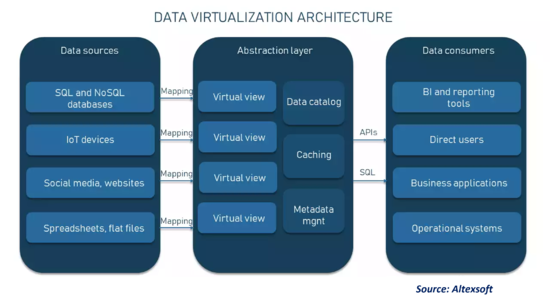

In a nutshell, data virtualization happens via middleware which is nothing but a unified, virtual data access layer built on top of many data sources. This layer presents information, regardless of its type and model, as a single virtual (or logical) view. It all happens on demand and in real-time. Let’s elaborate on the data virtualization architecture to get the complete picture of how things work here. Typically, there are three building blocks comprising the virtualization structure, namely

- Connection layer — a set of connectors to data sources in real time;

- Abstraction layer — services to present, manage, and use logical views of the data; and

- Consumption layer — a range of consuming tools and applications requesting abstract data.

- Connection layer: This layer is responsible for accessing the information scattered across multiple source systems, containing both structured and unstructured data, with the help of connectors and communication protocols. Data virtualization platforms can link to different data sources including

- SQL and NoSQL databases like MySQL, Oracle, and MongoDB;

- CRMs and ERPs;

- cloud data warehouses like Amazon Redshift or Google Big Query;

- data lakes and enterprise data warehouses;

- streaming sources like IoT and IoMT devices;

- SaaS (Software-as-a-Service) applications like Salesforce;

- social media platforms and websites;

- spreadsheets and flat files like CSV, JSON, and XML;

- big data systems like Hadoop, and many more.

When connecting, data virtualization loads metadata (details of the source data) and physical views if available. It maps metadata and semantically similar data assets from different autonomous databases to a common virtual data model or schema of the abstraction layer. Mappings define how the information from each source system should be converted and reformatted for integration needs.

- Abstraction layer: The cornerstone of the whole virtualization framework is the abstraction (sometimes referred to as virtual or semantic) layer that acts as the bridge between all data sources on one side and all business users on the other. This tier itself doesn’t store any data: It only contains logical views and metadata needed to access the sources. With the abstraction layer, end users only see the schematic data models whereas the complexities of the bottom data structures are hidden from them. So, once the data attributes are pulled in, the abstraction layer will allow you to apply joins, business rules, and other data transformations to create logical views on top of the physical views and metadata delivered by the connection layer. These integration processes can be modeled with the help of a drag-and-drop interface or a query language like SQL, depending on the data virtualization tool. Usually, there are various prebuilt templates and components to do all the modeling, matching, converting, and integration jobs. The essential components of the virtual layer are

- Metadata management — to import, document, and maintain metadata attributes such as column names, table structure, tags, etc.

- Dynamic data catalog — to organize data by profiling, tagging, classifying, and mapping it to business definitions so that end-users can easily find what they need.

- Query optimization — to improve query processing performance by caching virtual entities, enabling automatic joins, and supporting push-down querying (pushing down request operation to the source database).

- Data quality control — to ensure that all information is correct by applying data validation logic.

- Data security and governance — to provide different security levels to admins, developers, and consumer groups as well as define clear data governance rules, removing barriers for information sharing.

- Consuming layer: Another tier of the data virtualization architecture provides a single point of access to data kept in the underlying sources. The delivery of abstracted data views happens through various protocols and connectors depending on the type of consumer. They may communicate with the virtual layer via SQL and all sorts of APIs, including access standards like JDBC and ODBC, REST and SOAP APIs, and many others. Most data virtualization software enables access for a wide range of business users, tools, and applications including such popular solutions as Tableau, Cognos, and Power BI.

As mentioned above, such a structure produces self-service capabilities for all consumers. Instead of making queries directly, they interact with the software used in their day-to-day operations, and that software, in turn, interacts with the virtual layer, getting the required data. In this way, consumers don’t need to care about the format of the data or its location as these complexities are masked from them.

Use Cases & Systems Used With Data Virtualization Technology[4]

- DevOps: For teams that need to transform app-driven customer experiences, oftentimes everything is automated except for the data. Data virtualization enables teams to deliver production-quality data to enterprise stakeholders for all phases of application development.

- ERP Upgrades: Over half of all ERP projects run past schedule and budget. The main reason? Standing up and refreshing project environments is slow and complex. Data virtualization can cut complexity, lower TCO, and accelerate projects by delivering virtual data copies to ERP teams more efficiently than legacy processes.

- Cloud Migration: Data virtualization technology can provide a secure and efficient mechanism to replace TB-size datasets from on-premise to the cloud, before spinning up space-efficient data environments needed for testing and cutover rehearsal.

- Analytics and Reporting: Virtual data copies can provide a sandbox for destructive query and report design and facilitate on-demand, data access across sources for BI projects that require data integration (MDM, M&A, global financial close, etc.)

- Backup and Production Support: In the event of a production issue, the ability to provide complete virtual data environments can help teams identify the root cause and validate that any change does not cause unanticipated regressions.

Data Virtualization Drivers[5]

Data virtualization allows for federated and real-time data access, integration, and sharing – without the need for replication or additional data silos. Here are the key business drivers for data virtualization:

- Cost: Data virtualization is a cost-effective approach to data integration, which can even replace ETL when Transformation (the “T” in “ETL”) requirements are light (or non-existent).

- Data sharing: Data virtualization is useful for integrated data access and sharing, especially in light of regulatory constraints on the physical movement of data. It also allows for cross-platform data analysis, such as combining historical data from a data warehouse with data from an external third-party data source to respond to a particular query.

- Experimentation: Less technical roles, like domain-based citizen integrators, can participate in federated data access, to obtain better business outcomes. An integrated dataset quickly provisioned as a “sandbox”, can provide citizen integrators with the ability to do experimental integration and to get access to data for ad-hoc queries that arise.

- Flexibility: Data virtualization lends flexibility to enterprise data integration architectures, by allowing users to virtualize and integrate different data models and technologies – without physically moving data into a repository – including unstructured and diverse types of data (e.g., IoT and time series data).

- Reusability: With data virtualization, enterprises benefit from the reusability of integration artifacts across a wide range of business needs, including logical data warehousing, virtualized operational data stores, and master data management (via MDM tools).

Data Virtualization Design Considerations<trf>Data Virtualization Design Considerations</ref>

Data Virtualization platforms deliver many benefits over traditional data solutions. However, there are certain constraints that need to be considered when designing your solution:

- Data virtualization technology works by accessing source data in real time via production systems. This is in contrast to a data warehouse or master data management solution where data is often stored in pre-aggregated/sorted storage and therefore typically provides faster response times.

- Data virtualization is not capable of providing a historical analysis of data. A data warehouse or analytical database is typically required which isn’t the original concept of data virtualization.

- Data cleansing and/or data transformation can still be a complex task in the virtual layer.

- Changes to the virtual data model can sometimes be associated with increased effort because, for a change to be fully applied, it has to be accepted by all-consuming applications and users.

- The original requirement of data virtualization was to retrieve data using a single query language to provide a speedy query response and to quickly assemble different data models or views of the data to meet specific needs. However, in reality, this goal has still not been realized in every product.

Data Virtualization Vs. Data Warehousing[6]

Some enterprise landscapes are filled with disparate data sources including multiple data warehouses, data marts, and/or data lakes, even though a Data Warehouse, if implemented correctly, should be unique and a single source of truth. Data virtualization can efficiently bridge data across data warehouses, data marts, and data lakes without having to create a whole new integrated physical data platform. Existing data infrastructure can continue performing its core functions while the data virtualization layer just leverages the data from those sources. This aspect of data virtualization makes it complementary to all existing data sources and increases the availability and usage of enterprise data.[citation needed]

Data virtualization may also be considered as an alternative to ETL and data warehousing but for performance considerations, it's not really recommended for a very large data warehouse. Data virtualization is inherently aimed at producing quick and timely insights from multiple sources without having to embark on a major data project with extensive ETL and data storage. However, data virtualization may be extended and adapted to serve data warehousing requirements also. This will require an understanding of the data storage and history requirements along with planning and design to incorporate the right type of data virtualization, integration, storage strategies, and infrastructure/performance optimizations (e.g., streaming, in-memory, hybrid storage)

Benefits and Drawbacks of Data Virtualization[7]

Data Virtualization is versatile and applicable in several domains. The followings are some benefits and drawbacks of implementing data visualization as a solution.

Benefits of Data Virtualization

- The virtual clones use minimal storage in comparison to the source data.

- Snapshotting the source data is a very fast process that can be done in seconds.

- Connectivity with various types of data sources is possible with data virtualization. Data virtualization can be used with structured sources such as relational database management systems, semi-structured sources such as web services, and unstructured data sources such as file documents.

- Simplifies data management, and reduces system workload by creating virtual clones.

- Usually only requires a single-pass data cleansing operation. Integrating external data cleaning and data quality solutions can also be supported using APIs.

- Data Virtualization is highly adept at reading and transforming unstructured or semi-structured data.

- Depending on the source and type of data, data virtualization solutions have built-in mechanisms for accessing data. This provides transparency for users who are generating analytics or reports from these data sources.

- Since users only have access to virtual clones, the source data is secure, and no unintentional changes can be made to it.

- Data virtualization allows the user access to near real-time data, which means any downstream analytics done with the data are able to illustrate information accurately at the time of generation.

Drawbacks of Data Virtualization

- Badly designed data virtualization platforms cannot cope with very large or unanticipated queries.

- Setting up a data virtualization platform can be very difficult, and the initial investment costs can be high.

- Searching for appropriate data sources for analytics purposes, for example, can be very time-consuming.

- Data virtualization isn’t suitable for keeping track of historical data; for such purposes, a Data Warehouse is more suitable.

- Direct access to systems for reporting and analytics via a data virtualization platform may cause too much disruption and lead to performance deterioration.

See Also

References

- ↑ Required Data Virtualization Components for Business Needs

- ↑ What are the Uses of Data Virtualization?

- ↑ How data virtualization works: Main architecture layers

- ↑ Common Use Cases & Systems Used With Data Virtualization Technology

- ↑ Data Virtualization Drivers

- ↑ Data virtualization and data warehousing

- ↑ What are the Benefits and Drawbacks of Data Virtualization?