Difference between revisions of "IT Operations Analytics (ITOA)"

| Line 2: | Line 2: | ||

*Proactively avoid outages. | *Proactively avoid outages. | ||

*Achieve faster mean time to repair (MTTR). | *Achieve faster mean time to repair (MTTR). | ||

| − | *Realize cost savings through greater operational efficiency.<ref> | + | *Realize cost savings through greater operational efficiency.<ref>Defining IT Operations Analytics (ITOA)? [https://www.ibm.com/cloud-computing/xx-en/products/hybrid-it-management/it-operations-analytics/]</ref> |

| + | |||

| + | |||

| + | == Definition of IT Operations Analytics (ITOA)<ref>Definition - What is of IT Operations Analytics (ITOA)? [https://www.extrahop.com/company/blog/2016/it-operations-analytics-itoa-big-data/ Eric Giesa]</ref> == | ||

| + | IT Operations Analytics (ITOA) is an approach that allows you to eradicate traditional data siloes using Big Data principles. By analyzing all your data via multiple sources including log, agent, and wire data, you'll be able to support proactive and data-driven operations with clear, contextualized operational intelligence: | ||

| + | |||

| + | [[File:ITOA.png|400px|IT Operations Analytics (ITOA)]]<br /> | ||

| + | |||

| + | |||

| + | === ITOA Uses Big Data Principles === | ||

| + | Nearly any Big Data initiative has the objective of transforming an organization's access to data for better and more agile business insights and actions. However, this can only be achieved through the extraction, indexing, storage, and analysis of many different data sources coupled with the flexibility to add more data sources as they become available or as opportunities arise. | ||

| + | To provide the greatest flexibility and avoid vendor lock-in, more organizations are adopting open source technologies like Elasticsearch, MongoDB, Spark, Cassandra, and Hadoop as their common data store. This same approach is at the heart of ITOA. | ||

| + | |||

| + | [[File:ITOA_Big_Data_Approach.png|400px|The Big Data Approach to ITOA]]<br /> | ||

| + | |||

| + | A Big Data practice provides the flexibility to combine and correlate different data sets and their sources to derive unexpected and new insights. The same objective applies to IT Operations Analytics. | ||

| + | |||

| + | |||

| + | === The Shift from Tools-Driven to Data-Driven IT === | ||

| + | The last couple decades has seen an accumulation of disjointed tools, resulting in islands of data that prevent you from getting a complete picture of your environment. This is the antithesis of Big Data. If the CIO wants their organization to be data-driven with the ability to provide better performance, availability, and security analysis while making more informed investment decisions, they must design a data-driven monitoring practice. This requires a shift in thinking from today's tool-centric approach to a data-driven model similar to a Big Data initiative. | ||

| + | |||

| + | |||

| + | [[File:Tools_Driven_ITOA.png|400px|Tool-Driven IT Prevents Insight and Agility]]<br /> | ||

| + | Tool-centric approaches create data silos, tool bloat, and frequent cross-team dysfunction. | ||

| + | |||

| + | |||

| + | *If the CISO wants better security insight, monitoring, and surveillance, they must think in terms of continuous pervasive monitoring and correlated data sources, not in terms of analyzing the data silos of log management, anomaly detection, packet capture systems, or malware monitoring systems. | ||

| + | *If the VP of Application Development wants better cross-team collaboration, faster, more reliable and predictable application upgrades and rollouts for both on-premises and cloud-based workloads, they must have a continuous data-driven monitoring architecture and practice. The ITOA monitoring architecture must span the entire application delivery chain, not just the application stack. Because of all the workload interdependencies, without this data an organization will be flying blind resulting in project delays, capacity issues, cross-team dysfunction, and increased costs. | ||

| + | *If the VP of Operations wants to cut their mean time to resolution (MTTR) in half, dramatically reduce downtime, and improve end-user experience while instituting a continuous improvement effort, they must have the ability to unify and analyze across operational data sets. | ||

| + | *The CIO, security, application, network, and operations teams can achieve these objectives by drawing from the exact same data sets that are the foundation of ITOA. This effort should not be difficult, costly or take years to implement. In fact, this new data-driven approach to IT is actually being accomplished today; we're just codifying the design principles and practices we've observed and learned from our own customers who are the inspiration behind it. | ||

| + | |||

| + | |||

| + | == IT Operations Analytics (ITOA) Architecture<ref>The Four Primary Sources of Data for IT Operations Analytics (ITOA) [https://www.extrahop.com/company/blog/2013/four-data-sources-for-it-operations-analytics/ Tyson Supasatit]</ref> == | ||

| + | Fundamentally, there are four primary sources of intelligence and insight about your applications and infrastructure. To get a complete picture, you need some way of capturing each of these sources of IT visibility: | ||

| + | *Machine data (logs, WMI, SNMP, etc.) – This is information gathered by the system itself and helps IT teams identify overburdened machines, plan capacity, and perform forensic analysis of past events. New distributed log-file analysis solutions such as Splunk, Loggly, Log Insight, and Sumo Logic enable IT organizations to address a broader set of tasks with machine data, including answering business-related questions. | ||

| + | *Agent data (code-level instrumentation) – Code diagnostic tools including byte-code instrumentation and call-stack sampling have traditionally been the purview of Development and QA teams, helping to identify hotspots or errors in the software code. These tools are especially popular for custom-developed Java and .NET web applications. New SaaS vendors such as New Relic and AppDynamics have dramatically simplified the deployment of agent-based products. | ||

| + | *Synthetic data (service checks and synthetic transactions) –This data enables IT teams to test common transactions from locations around the globe or to determine if there is a failure in their network infrastructure. Although synthetic transactions are no replacement for real-user data, they can help IT teams to discover errors or outages at 2 a.m. when users have not yet logged in. Tools that fit into this category include Nimsoft, Pingdom, Gomez, and Keynote. | ||

| + | *Wire data (L2-L7 communications, including full bi-directional payloads) – The information available off the wire, which has traditionally included HTTP traffic analysis and packet capture, historically has been used for measuring, mapping, and forensics. However, products from vendors such as Riverbed and NetScout barely scratch the surface of what's possible with this data source. For one thing, they do not analyze the full bi-directional payload and so cannot programmatically extract, measure, or visualize anything in the transaction payload. NetFlow and other flow records—although technically machine data generated by network devices—could be considered wire data because they provide information about which systems are talking to other systems, similar to a telephone bill. | ||

| + | Every IT organization needs visibility into these four sources of data. Each data source serves an important purpose, although the purposes are evolving as new technologies open up new vistas in terms of what's possible. In the realm of wire data, ExtraHop unlocks the tremendous potential of this data source by making it available in real-time and in a way that makes sense for all IT teams. | ||

| + | |||

| + | |||

| + | [[File:ITOA_Architecture.png|300px|The Four Primary Sources of Data for IT Operations Analytics (ITOA) ]]<br /> | ||

| + | source: Extrahop<br /> | ||

Revision as of 16:13, 19 July 2019

IT operations analytics involves collecting IT data from different sources, examining that data in a broader context, and proactively identifying problems in advance of their occurrence. With the right ITOA solution, you can detect patterns early to predict issues before they arise. You can extract insight from key operational data types, such as log files, performance metrics, events and trouble tickets, so you can:

- Proactively avoid outages.

- Achieve faster mean time to repair (MTTR).

- Realize cost savings through greater operational efficiency.[1]

Definition of IT Operations Analytics (ITOA)[2]

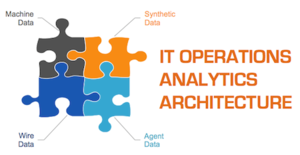

IT Operations Analytics (ITOA) is an approach that allows you to eradicate traditional data siloes using Big Data principles. By analyzing all your data via multiple sources including log, agent, and wire data, you'll be able to support proactive and data-driven operations with clear, contextualized operational intelligence:

ITOA Uses Big Data Principles

Nearly any Big Data initiative has the objective of transforming an organization's access to data for better and more agile business insights and actions. However, this can only be achieved through the extraction, indexing, storage, and analysis of many different data sources coupled with the flexibility to add more data sources as they become available or as opportunities arise. To provide the greatest flexibility and avoid vendor lock-in, more organizations are adopting open source technologies like Elasticsearch, MongoDB, Spark, Cassandra, and Hadoop as their common data store. This same approach is at the heart of ITOA.

A Big Data practice provides the flexibility to combine and correlate different data sets and their sources to derive unexpected and new insights. The same objective applies to IT Operations Analytics.

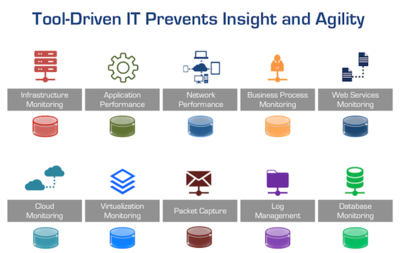

The Shift from Tools-Driven to Data-Driven IT

The last couple decades has seen an accumulation of disjointed tools, resulting in islands of data that prevent you from getting a complete picture of your environment. This is the antithesis of Big Data. If the CIO wants their organization to be data-driven with the ability to provide better performance, availability, and security analysis while making more informed investment decisions, they must design a data-driven monitoring practice. This requires a shift in thinking from today's tool-centric approach to a data-driven model similar to a Big Data initiative.

Tool-centric approaches create data silos, tool bloat, and frequent cross-team dysfunction.

- If the CISO wants better security insight, monitoring, and surveillance, they must think in terms of continuous pervasive monitoring and correlated data sources, not in terms of analyzing the data silos of log management, anomaly detection, packet capture systems, or malware monitoring systems.

- If the VP of Application Development wants better cross-team collaboration, faster, more reliable and predictable application upgrades and rollouts for both on-premises and cloud-based workloads, they must have a continuous data-driven monitoring architecture and practice. The ITOA monitoring architecture must span the entire application delivery chain, not just the application stack. Because of all the workload interdependencies, without this data an organization will be flying blind resulting in project delays, capacity issues, cross-team dysfunction, and increased costs.

- If the VP of Operations wants to cut their mean time to resolution (MTTR) in half, dramatically reduce downtime, and improve end-user experience while instituting a continuous improvement effort, they must have the ability to unify and analyze across operational data sets.

- The CIO, security, application, network, and operations teams can achieve these objectives by drawing from the exact same data sets that are the foundation of ITOA. This effort should not be difficult, costly or take years to implement. In fact, this new data-driven approach to IT is actually being accomplished today; we're just codifying the design principles and practices we've observed and learned from our own customers who are the inspiration behind it.

IT Operations Analytics (ITOA) Architecture[3]

Fundamentally, there are four primary sources of intelligence and insight about your applications and infrastructure. To get a complete picture, you need some way of capturing each of these sources of IT visibility:

- Machine data (logs, WMI, SNMP, etc.) – This is information gathered by the system itself and helps IT teams identify overburdened machines, plan capacity, and perform forensic analysis of past events. New distributed log-file analysis solutions such as Splunk, Loggly, Log Insight, and Sumo Logic enable IT organizations to address a broader set of tasks with machine data, including answering business-related questions.

- Agent data (code-level instrumentation) – Code diagnostic tools including byte-code instrumentation and call-stack sampling have traditionally been the purview of Development and QA teams, helping to identify hotspots or errors in the software code. These tools are especially popular for custom-developed Java and .NET web applications. New SaaS vendors such as New Relic and AppDynamics have dramatically simplified the deployment of agent-based products.

- Synthetic data (service checks and synthetic transactions) –This data enables IT teams to test common transactions from locations around the globe or to determine if there is a failure in their network infrastructure. Although synthetic transactions are no replacement for real-user data, they can help IT teams to discover errors or outages at 2 a.m. when users have not yet logged in. Tools that fit into this category include Nimsoft, Pingdom, Gomez, and Keynote.

- Wire data (L2-L7 communications, including full bi-directional payloads) – The information available off the wire, which has traditionally included HTTP traffic analysis and packet capture, historically has been used for measuring, mapping, and forensics. However, products from vendors such as Riverbed and NetScout barely scratch the surface of what's possible with this data source. For one thing, they do not analyze the full bi-directional payload and so cannot programmatically extract, measure, or visualize anything in the transaction payload. NetFlow and other flow records—although technically machine data generated by network devices—could be considered wire data because they provide information about which systems are talking to other systems, similar to a telephone bill.

Every IT organization needs visibility into these four sources of data. Each data source serves an important purpose, although the purposes are evolving as new technologies open up new vistas in terms of what's possible. In the realm of wire data, ExtraHop unlocks the tremendous potential of this data source by making it available in real-time and in a way that makes sense for all IT teams.

- ↑ Defining IT Operations Analytics (ITOA)? [1]

- ↑ Definition - What is of IT Operations Analytics (ITOA)? Eric Giesa

- ↑ The Four Primary Sources of Data for IT Operations Analytics (ITOA) Tyson Supasatit