Difference between revisions of "MLOps"

m (The LinkTitles extension automatically added links to existing pages (https://github.com/bovender/LinkTitles).) |

|||

| Line 1: | Line 1: | ||

| − | '''MLOps (Machine Learning Operations)''' is a set of practices that provide determinism, scalability, [[Agility|agility]], and [[Governance|governance]] in the model development and deployment pipeline. This new paradigm focuses on four key areas within model training, tuning, and deployment (inference): machine learning must be reproducible, it must be collaborative, it must be scalable, and it must be continuous.<ref>Definition - What does MLOps Mean? [https://blog.paperspace.com/what-is-mlops/ Paperspace Blog]</ref> | + | '''MLOps (Machine Learning Operations)''' is a set of practices that provide determinism, scalability, [[Agility|agility]], and [[Governance|governance]] in the [[model]] development and deployment pipeline. This new paradigm focuses on four key areas within model training, tuning, and deployment (inference): machine learning must be reproducible, it must be collaborative, it must be scalable, and it must be continuous.<ref>Definition - What does MLOps Mean? [https://blog.paperspace.com/what-is-mlops/ Paperspace Blog]</ref> |

| − | MLOps is modeled on the existing discipline of [[DevOps]], the modern practice of efficiently writing, deploying and running enterprise applications. DevOps got its start a decade ago as a way warring tribes of [[Software|software]] developers (the Devs) and [[IT Operations (Information Technology Operations)|IT operations]] teams (the Ops) could collaborate. MLOps adds to the team the data scientists, who curate datasets and build AI models that analyze them. It also includes ML engineers, who run those datasets through the models in disciplined, automated ways. It’s a big challenge in raw performance as well as management rigor. Datasets are massive and growing, and they can change in real time. [[Artificial Intelligence (AI)|AI]] models require careful tracking through cycles of experiments, tuning and retraining. So, MLOps needs a powerful AI infrastructure that can scale as companies grow.<ref>What is MLOps? [https://blogs.nvidia.com/blog/2020/09/03/what-is-mlops/ Nvidia blog]</ref> | + | MLOps is modeled on the existing discipline of [[DevOps]], the modern practice of efficiently writing, deploying and running enterprise applications. DevOps got its start a decade ago as a way warring tribes of [[Software|software]] developers (the Devs) and [[IT Operations (Information Technology Operations)|IT operations]] teams (the Ops) could collaborate. MLOps adds to the team the [[data]] scientists, who curate datasets and build AI models that analyze them. It also includes ML engineers, who run those datasets through the models in disciplined, automated ways. It’s a big challenge in raw performance as well as [[management]] rigor. Datasets are massive and growing, and they can change in real time. [[Artificial Intelligence (AI)|AI]] models require careful tracking through cycles of experiments, tuning and retraining. So, MLOps needs a powerful AI infrastructure that can scale as companies grow.<ref>What is MLOps? [https://blogs.nvidia.com/blog/2020/09/03/what-is-mlops/ Nvidia blog]</ref> |

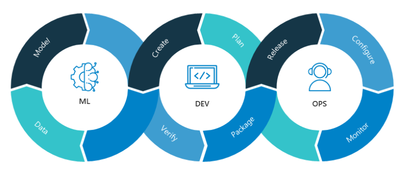

'''MLOps combine machine learning, applications development and IT operations'''<br /> | '''MLOps combine machine learning, applications development and IT operations'''<br /> | ||

[[File:MLOps.png|400px|MLOps]]<br /> | [[File:MLOps.png|400px|MLOps]]<br /> | ||

| − | source: Neal Analytics | + | source: Neal [[Analytics]] |

| Line 13: | Line 13: | ||

When approached from the perspective of a model – or even ML code, machine learning cannot be developed truly collaboratively as most of what makes the model is hidden. MLOps encourages teams to make everything that goes into producing a machine learning model visible – from data extraction to model deployment and monitoring. Turning tacit knowledge into code makes machine learning collaborative. | When approached from the perspective of a model – or even ML code, machine learning cannot be developed truly collaboratively as most of what makes the model is hidden. MLOps encourages teams to make everything that goes into producing a machine learning model visible – from data extraction to model deployment and monitoring. Turning tacit knowledge into code makes machine learning collaborative. | ||

*Reproducible: Machine learning should be reproducible. | *Reproducible: Machine learning should be reproducible. | ||

| − | Data scientists should be able to audit and reproduce every production model. In software development, version control for code is standard, but machine learning requires more than that. Most importantly, it means versioning data as well as parameters and metadata. Storing all model training related artifacts ensures that models can always be reproduced. | + | Data scientists should be able to audit and reproduce every production model. In [[software]] development, version [[control]] for code is [[standard]], but machine learning requires more than that. Most importantly, it means versioning data as well as parameters and [[metadata]]. Storing all model training related artifacts ensures that models can always be reproduced. |

*Continuous: Machine learning should be continuous. | *Continuous: Machine learning should be continuous. | ||

| − | A machine learning model is temporary. The lifecycle of a trained model depends entirely on the use-case and how dynamic the underlying data is. Building a fully automatic, self-healing system may have diminishing returns based on your use-case, but machine learning should be thought of as a continuous process and as such, retraining a model should be as close to effortless as possible. | + | A machine learning model is temporary. The lifecycle of a trained model depends entirely on the use-case and how dynamic the underlying data is. Building a fully automatic, self-healing [[system]] may have diminishing returns based on your use-case, but machine learning should be thought of as a continuous [[process]] and as such, retraining a model should be as close to effortless as possible. |

*Tested: Machine learning should be tested & monitored. | *Tested: Machine learning should be tested & monitored. | ||

Testing and monitoring are part of engineering practices, and machine learning should be no different. In the machine learning context, the meaning of performance is not only focused on technical performance (such as latency) but, more importantly, predictive performance. MLOps best practices encourage making expected behavior visible and to set standards that models should adhere to, rather than rely on a gut feeling. | Testing and monitoring are part of engineering practices, and machine learning should be no different. In the machine learning context, the meaning of performance is not only focused on technical performance (such as latency) but, more importantly, predictive performance. MLOps best practices encourage making expected behavior visible and to set standards that models should adhere to, rather than rely on a gut feeling. | ||

| Line 25: | Line 25: | ||

The predicted growth in machine learning includes an estimated doubling of ML pilots and implementations from 2017 to 2018, and again from 2018 to 2020. Spending on machine learning is estimated to reach $57.6 billion by 2021, a compound annual growth rate (CAGR) of 50.1%. | The predicted growth in machine learning includes an estimated doubling of ML pilots and implementations from 2017 to 2018, and again from 2018 to 2020. Spending on machine learning is estimated to reach $57.6 billion by 2021, a compound annual growth rate (CAGR) of 50.1%. | ||

| − | Reports show a majority (up to 88%) of corporate AI initiatives are struggling to move beyond test stages[citation needed]. However, those organizations that actually put AI and machine learning into production saw a 3-15% profit margin increases. | + | Reports show a majority (up to 88%) of corporate AI initiatives are struggling to move beyond test stages[citation needed]. However, those organizations that actually put AI and machine learning into production saw a 3-15% [[profit]] [[margin]] increases. |

In 2018, after having one presentation about ML productionization from Google, MLOps and approaches to it began to gain traction among AI/ML experts, companies, and technology journalists as a solution that can address the complexity and growth of machine learning in businesses. | In 2018, after having one presentation about ML productionization from Google, MLOps and approaches to it began to gain traction among AI/ML experts, companies, and technology journalists as a solution that can address the complexity and growth of machine learning in businesses. | ||

| − | In October 2020, ModelOp, Inc. launched ModelOp.io, an online hub for MLOps and ModelOps resources. Alongside the launch of this website, ModelOp released a Request for Proposal (RFP) template. Resulting from interviews with industry experts and analysts, this RFP template was designed to address the functional requirements of ModelOps and MLOps solutions. | + | In October 2020, ModelOp, Inc. launched ModelOp.io, an online hub for MLOps and ModelOps resources. Alongside the launch of this [[website]], ModelOp released a [[Request for Proposal (RFP)]] [[template]]. Resulting from interviews with [[industry]] experts and analysts, this RFP template was designed to address the functional requirements of ModelOps and MLOps solutions. |

Latest revision as of 16:54, 6 February 2021

MLOps (Machine Learning Operations) is a set of practices that provide determinism, scalability, agility, and governance in the model development and deployment pipeline. This new paradigm focuses on four key areas within model training, tuning, and deployment (inference): machine learning must be reproducible, it must be collaborative, it must be scalable, and it must be continuous.[1]

MLOps is modeled on the existing discipline of DevOps, the modern practice of efficiently writing, deploying and running enterprise applications. DevOps got its start a decade ago as a way warring tribes of software developers (the Devs) and IT operations teams (the Ops) could collaborate. MLOps adds to the team the data scientists, who curate datasets and build AI models that analyze them. It also includes ML engineers, who run those datasets through the models in disciplined, automated ways. It’s a big challenge in raw performance as well as management rigor. Datasets are massive and growing, and they can change in real time. AI models require careful tracking through cycles of experiments, tuning and retraining. So, MLOps needs a powerful AI infrastructure that can scale as companies grow.[2]

MLOps combine machine learning, applications development and IT operations

source: Neal Analytics

The Guiding Principles of MLOps[3]

- Collaborative: Machine Learning should be collaborative.

When approached from the perspective of a model – or even ML code, machine learning cannot be developed truly collaboratively as most of what makes the model is hidden. MLOps encourages teams to make everything that goes into producing a machine learning model visible – from data extraction to model deployment and monitoring. Turning tacit knowledge into code makes machine learning collaborative.

- Reproducible: Machine learning should be reproducible.

Data scientists should be able to audit and reproduce every production model. In software development, version control for code is standard, but machine learning requires more than that. Most importantly, it means versioning data as well as parameters and metadata. Storing all model training related artifacts ensures that models can always be reproduced.

- Continuous: Machine learning should be continuous.

A machine learning model is temporary. The lifecycle of a trained model depends entirely on the use-case and how dynamic the underlying data is. Building a fully automatic, self-healing system may have diminishing returns based on your use-case, but machine learning should be thought of as a continuous process and as such, retraining a model should be as close to effortless as possible.

- Tested: Machine learning should be tested & monitored.

Testing and monitoring are part of engineering practices, and machine learning should be no different. In the machine learning context, the meaning of performance is not only focused on technical performance (such as latency) but, more importantly, predictive performance. MLOps best practices encourage making expected behavior visible and to set standards that models should adhere to, rather than rely on a gut feeling.

History of MLOps[4]

The challenges of the ongoing use of machine learning in applications were highlighted in a 2015 paper titled, Hidden Technical Debt in Machine Learning Systems.

The predicted growth in machine learning includes an estimated doubling of ML pilots and implementations from 2017 to 2018, and again from 2018 to 2020. Spending on machine learning is estimated to reach $57.6 billion by 2021, a compound annual growth rate (CAGR) of 50.1%.

Reports show a majority (up to 88%) of corporate AI initiatives are struggling to move beyond test stages[citation needed]. However, those organizations that actually put AI and machine learning into production saw a 3-15% profit margin increases.

In 2018, after having one presentation about ML productionization from Google, MLOps and approaches to it began to gain traction among AI/ML experts, companies, and technology journalists as a solution that can address the complexity and growth of machine learning in businesses.

In October 2020, ModelOp, Inc. launched ModelOp.io, an online hub for MLOps and ModelOps resources. Alongside the launch of this website, ModelOp released a Request for Proposal (RFP) template. Resulting from interviews with industry experts and analysts, this RFP template was designed to address the functional requirements of ModelOps and MLOps solutions.

See Also

ModelOps

Machine Learning

Machine-to-Machine (M2M)

Artificial Intelligence (AI)

Artificial General Intelligence (AGI)

Artificial Neural Network (ANN)

References

- ↑ Definition - What does MLOps Mean? Paperspace Blog

- ↑ What is MLOps? Nvidia blog

- ↑ The Guiding Principles of MLOps Valohai

- ↑ History of MLOps Wikipedia