Test Driven Development (TDD)

Test-driven development (TDD) is a software development process that relies on the repetition of a very short development cycle: first the developer writes an (initially failing) automated test case that defines a desired improvement or new function, then produces the minimum amount of code to pass that test, and finally refactors the new code to acceptable standards.[1]

Test Driven Development is about writing the test first before adding new functionality to the system. This seems backwards as first, but doing this:

- Defines success up front.

- Helps break our design down into little pieces, and

- Leaves us with a nice suite of unit tests proving our stuff works.

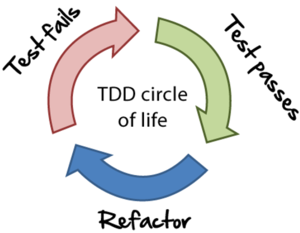

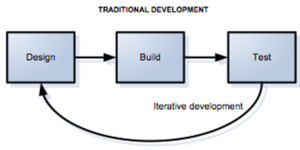

- Agile developers work in this circle of life when adding new code. Write the test first. Make it pass. Then refactor.(See Figure 1.)[2]

Figure 1. source: Agile in a Nutshell

Origins of Test Driven Development (TDD) [3]

While the idea of having test elaboration precede programming is not original to the Agile community, TDD constitutes a breakthrough insofar as it combines that idea with that of "developer testing", providing developer testing with renewed respectability. 1976: publication of "Software Reliability" by Glenford Myers, which states as an "axiom" that "a developer should never test their own code" (Dark Ages of Developer Testing) 1990: testing discipline dominated by "black box" techniques, in particular in the form of "capture and replay" testing tools 1991: independent creation of a testing framework at Taligent with striking similarities to SUnit (source) 1994: Kent Beck writes the SUnit testing framework for Smalltalk (source) 1998: article on Extreme Programming mentions that "we usually write the test first" (source) 1998 to 2002: "Test First" is elaborated into "Test Driven", in particular on the C2.com Wiki 2000: Mock Objects are among the novel techniques developed during that period (source) 2003: publication of "Test Driven Development: By Example" by Kent Beck By 2006 TDD is a relatively mature discipline which has started encouraging further innovations derived from it, such as ATDD or BDD).

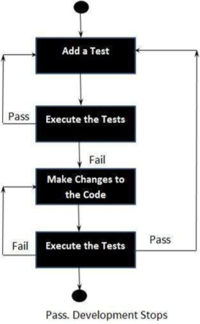

Test-Driven Development Process (Figure 2.)[4]

- Add a Test

- Run all tests and see if the new one fails

- Write some code

- Run tests and Refactor code

- Repeat

Figure 2. source: TutotialsPoint

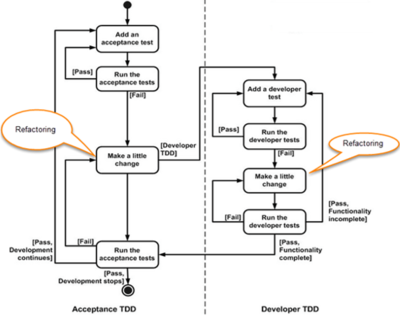

Levels of Test Driven Development (TDD) (Figure 3.)[5]

There are two levels of TDD

- Acceptance TDD (ATDD): With ATDD you write a single acceptance test. This test fullfill the requirement of the specification or satisfies the behavior of the system. After that write just enough production/functionality code to fulfill that acceptance test. Acceptance test focuses on the overall behavior of the system. ATDD also known as Behavioral Driven Development (BDD).

- Developer TDD: With Developer TDD you write single developer test i.e. unit test and then just enough production code to fulfill that test. The unit test focuses on every small functionality of the system. Developer TDD is simply called as TDD.

The main goal of ATDD and TDD is to specify detailed, executable requirements for your solution on a just in time (JIT) basis. JIT means taking only those requirements in consideration that are needed in the system. So increase efficiency.

Figure 3. source: Guru99

Characteristics of a Good Unit Test [6]

A good unit test has the following characteristics.

- Runs fast, runs fast, runs fast. If the tests are slow, they will not be run often.

- Separates or simulates environmental dependencies such as databases, file systems, networks, queues, and so on. Tests that exercise these will not run fast, and a failure does not give meaningful feedback about what the problem actually is.

- Is very limited in scope. If the test fails, it's obvious where to look for the problem. Use few Assert calls so that the offending code is obvious. It's important to only test one thing in a single test.

- Runs and passes in isolation. If the tests require special environmental setup or fail unexpectedly, then they are not good unit tests. Change them for simplicity and reliability. Tests should run and pass on any machine. The "works on my box" excuse doesn't work.

- Often uses stubs and mock objects. If the code being tested typically calls out to a database or file system, these dependencies must be simulated, or mocked. These dependencies will ordinarily be abstracted away by using interfaces.

- Clearly reveals its intention. Another developer can look at the test and understand what is expected of the production code.

Test Driven Development (TDD): Best Practices [7]

- Test structure: Effective layout of a test case ensures all required actions are completed, improves the readability of the test case, and smooths the flow of execution. Consistent structure helps in building a self-documenting test case. A commonly applied structure for test cases has (1) setup, (2) execution, (3) validation, and (4) cleanup.

- Setup: Put the Unit Under Test (UUT) or the overall test system in the state needed to run the test.

- Execution: Trigger/drive the UUT to perform the target behavior and capture all output, such as return values and output parameters. This step is usually very simple.

- Validation: Ensure the results of the test are correct. These results may include explicit outputs captured during execution or state changes in the UUT.

- Cleanup: Restore the UUT or the overall test system to the pre-test state. This restoration permits another test to execute immediately after this one.

- Individual best practices: Individual best practices states that one should:

- Separate common set-up and teardown logic into test support services utilized by the appropriate test cases.

- Keep each test oracle focused on only the results necessary to validate its test.

- Design time-related tests to allow tolerance for execution in non-real time operating systems. The common practice of allowing a 5-10 percent margin for late execution reduces the potential number of false negatives in test execution.

- Treat your test code with the same respect as your production code. It also must work correctly for both positive and negative cases, last a long time, and be readable and maintainable.

- Get together with your team and review your tests and test practices to share effective techniques and catch bad habits. It may be helpful to review this section during your discussion.

- Practices to avoid, or "anti-patterns"

- Having test cases depend on system state manipulated from previously executed test cases (i.e., you should always start a unit test from a known and pre-configured state).

- Dependencies between test cases. A test suite where test cases are dependent upon each other is brittle and complex. Execution order should not be presumed. Basic refactoring of the initial test cases or structure of the UUT causes a spiral of increasingly pervasive impacts in associated tests.

- Interdependent tests. Interdependent tests can cause cascading false negatives. A failure in an early test case breaks a later test case even if no actual fault exists in the UUT, increasing defect analysis and debug efforts.

- Testing precise execution behavior timing or performance.

- Building "all-knowing oracles". An oracle that inspects more than necessary is more expensive and brittle over time. This very common error is dangerous because it causes a subtle but pervasive time sink across the complex project.

- Testing implementation details.

- Slow running tests.

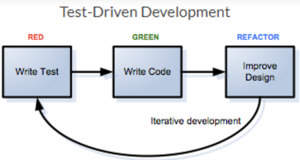

Test Driven Development (TDD) Vs. Traditional Development (Figure 4A and 4B) [8]

In TDD, test cases are written before the code itself; at that point, they are unpassable (‘red’). Code is written specifically to pass a given test case. When the written code successfully passes the test (‘green’), the passing code is refactored into a more elegant module – without introducing any new functional elements. Known as ‘red-green-refractor,’ this process is the mantra of TDD. If implemented correctly, it can be carried out five times in an hour. Iterations continuously integrated into the overall application build, ensuring the end product has been successfully tested at every step in its development.

Figure 4A source: Base36

The TDD methodology exists in contrast to traditional development modes, which concentrate testing at the end of the development process:

Figure 4B source: Base36

Clearly, traditional development methods lack the continuously integrated granular test cases that correlate to specific pieces of logic characteristic of the TDD model. This is a big stumbling block – it means that with traditionally developed software, there is no effective way to test how a given change will affect the greater system.

Benefits of Test-Driven Development [9]

- Acceptance Criteria: When writing some new code, you usually have a list of features that are required, or acceptance criteria that needs to be met. You can use either of these as a means to know what you need to test and then, once you've got that list in the form of test code, you can rest safely in the knowledge that you haven't missed any work.

- Focus: You're more productive while coding, and TDD helps keep that productivity high by narrowing your focus. You'll write one failing test, and focus solely on that to get it passing. It forces you to think about smaller chunks of functionality at a time rather than the application as a whole, and you can then incrementally build on a passing test, rather than trying to tackle the bigger picture from the get-go, which will probably result in more bugs, and therefore a longer development time.

- Interfaces: Because you're writing a test for a single piece of functionality, writing a test first means you have to think about the public interface that other code in your application needs to integrate with. You don't think about the private methods or inner workings of what you're about to work on. From the perspective of the test, you're only writing method calls to test the public methods. This means that code will read well and make more sense.

- Tidier Code: Continuing on from the point above, your tests are only interfacing with public methods, so you have a much better idea of what can be made private, meaning you don't accidentally expose methods that don't need to be public. If you weren't TDD'ing, and you made a method public, you'd then possibly have to support that in the future, meaning you've created extra work for yourself over a method that was only intended to be used internally in a class.

- Dependencies: Will your new code have any dependencies? When writing your tests, you'll be able to mock these out without really worrying about what they are doing behind the scenes, which lets you focus on the logic within the class you're writing. An additional benefit is that the dependencies you mock would potentially be faster when running the tests, and not bring additional dependencies to your test suite, in the form of filesystems, networks, databases etc.

- Safer Refactoring: Once you've got a test passing, it's then safe to refactor it, secure in the knowledge that the test cases will have your back. If you're having to work with legacy code, or code that someone else has written, and no tests have been written, you can still practice TDD. You needn't have authored the original code in order for you to TDD. Rather than thinking you can only TDD code that you have written, think of it more as you can only TDD any code you are about to write. So if you inherit someone else's untested code, before you start work, write a test that covers as much as you can. That puts you in a better position to refactor, or even to add new functionality to that code, whilst being confident that you won't break anything.

- Fewer Bugs: TDD results in more tests, which can often result in longer test run times. However, with better code coverage, you save time down the line that would be spent fixing bugs that have popped up and need time to figure out. This is not to say that you might be able to think of every test case, but if a bug does come up, you can still write a test first before attempting to fix the problem, to ensure that the bug won't come up again. This also helps define what the bug actually is, as you always need reproducible steps.

- Increasing Returns: The cost to TDD is higher at first, when compared to not writing any tests, though projects that don't have tests written first usually end up costing more. This stems from them not having decent test code coverage, or any at all, making them more susceptible to bugs and issues, which means more time is spent in the long run fixing those. More time equals more money, which makes the project more expensive overall. Not only does TDD save time on fixing bugs, it also means that the cost to change functionality is less, because the tests act as a safety net that ensure your changes won't break existing functionality.

- Living Documentation: Tests can serve as documentation to a developer. If you're unsure of how a class or library works, go and have a read through the tests. With TDD, tests usually get written for different scenarios, one of which is probably how you want to use the class. So you can see the expected inputs a method requires and what you can expect as outcome, all based on the assertions made in the test.

Cons of Test-Driven Development [10]

In the absence of a lot of statistical evidence, it’s tough to say TDD definitely delivers. There’s no such thing as a one-size-fits-all solution in software development. Now, let’s take a look at some of the potential disadvantages:

- It necessitates a lot of time and effort up front, which can make development feel slow to begin with.

- Focusing on the simplest design now and not thinking ahead can mean major refactoring requirements.

- It’s difficult to write good tests that cover the essentials and avoid the superfluous.

- It takes time and effort to maintain the test suite – it must be reconfigured for maximum value.

- If the design is changing rapidly, you’ll need to keep changing your tests. You could end up wasting a lot of time writing tests for features that are quickly dropped.

Things to Avoid While Writing Test Cases [11]

- Don’t write test cases that depend upon system state generated from the execution of last test case.

- Test cases should not be dependent on each other. For example test cases belonging to a test suite that are dependent. Their order of execution is presumed not analyzed.

- Interdependent test cases could cause a failure. For example a test case failed at an early stage could cause the remaining test cases to fail.

- Testing the execution behavior timing or performance.

- Testing the implementation details.

- Writing test cases which run more slowly than the actual time of execution.

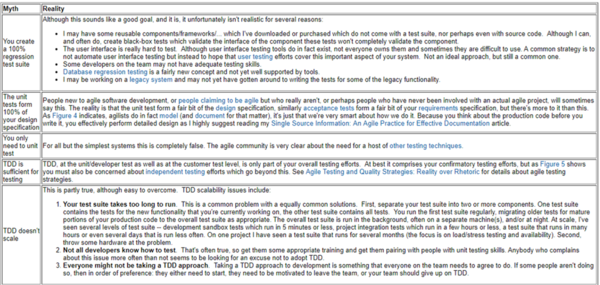

Myths and Misconceptions [12]

There are several common myths and misconceptions which people have regarding TDD which I would like to clear up if possible. The Table below lists these myths and describes the reality.

Addressing the myths and misconceptions surrounding TDD

source: Agile Data

References

- ↑ Definition of Test Driven Development? -Technology Conversations

- ↑ What is Test Driven Development?-Agile in a Nutshell

- ↑ Origins of Test Driven Development (TDD) -agilealliance.org

- ↑ The Test-Driven Development Process-TutotialsPoint

- ↑ What are the Levels of Test Driven Development (TDD)? -Guru99

- ↑ What are the Characteristics of a Good Unit Test? -Microsoft

- ↑ Best Practices for Test Driven Development (TDD) -Wikipedia

- ↑ Test Driven Development (TDD) Vs. Traditional Development -Base36

- ↑ What are the benefits of Test-Driven Development? -Made Tech

- ↑ Cons of Test-Driven Development -Lean Testing

- ↑ Things to Avoid While Writing Test Cases -Kualitatem

- ↑ Myths and Misconceptions-Agile Data

Further Reading

- Improving Application Quality Using Test-Driven Development (TDD) -Mehods and Tools

- What Really Matters in Test-Driven Development -Visual Studio Magazine

- Why Test-Driven Development Is Like Surgery-10Clouds

- The Value of Test-Driven Development when Writing Changeable Code -Sticky Minds

- TDD Should Be Fun-James Sinclair