User-Centered Evaluation (UCE)

User-centered evaluation is understood as evaluation conducted with methods suited for the framework of user-centered design as it is described in ISO 13407 (ISO, 1999). Within the framework of user-centered design, it is typically focused on evaluation the usability (ISO, 1994) of the system, possibly along with additional evaluations of the users' subjective experiences including factors like enjoyment and trust (Brandtzæg, Følstad, & Heim, 2002; Egger, 2002; Jordan, 2001; Ljungblad, Skog, & Holmquist, 2002). User-centered evaluation may also focus on the user experience of a company or service through all channels of communication.[1]

User-Centered Evaluation (UCE) is defined as an empirical evaluation obtained by assessing user performance and user attitudes toward a system, by gathering subjective user feedback on effectiveness and satisfaction, quality of work, support and training costs or user health and well-being.[2]

The term user-centered evaluation refers to evaluating the utility and value of software to the intended end-users. While usability (ease of use of software) is certainly a necessary condition, it is not sufficient.

Goals of User-Centered Evaluation (UCE)[3]

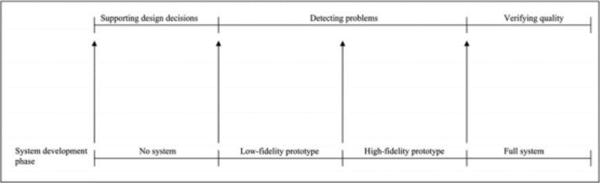

User-centered evaluation (UCE) can serve three goals: supporting decisions, detecting problems and verifying the quality of a product (De Jong & Schellens, 1997). These functions make UCE a

valuable tool for developers of all kinds of systems, because they can justify their efforts, improve upon a system or help developers to decide which version of a system to release. In the end, this

may lead to higher adoption of the system, more ease of use and a more pleasant user experience.

Figure 1. source: Lex Van Velsen et al.

Formative User-Centered Evaluation[4]

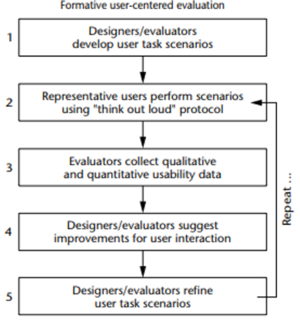

Formative user-centered evaluation is an empirical, observational evaluation method that ensures usability of interactive systems by including users early and continually throughout user interaction development. The method relies heavily on usage context (for example, user task, user motivation, and so on) as well as a solid understanding of human-computer interaction (and in the case of VEs, human-VE interaction). Therefore, a usability specialist generally proctors formative user-centered evaluations. Formative evaluation aims to iteratively and quantifiably assess and improve a user interaction design. Figure 2 shows the steps of a typical formative evaluation cycle. The cycle begins with development of user task scenarios, which are specifically designed to exploit and explore all identified task, information, and work flows. Note that user task scenarios derive from results of the user task analysis. Moreover, these scenarios should provide adequate coverage of tasks as well as accurate sequencing of tasks identified during the user task analysis. Representative users perform these tasks

as evaluators collect data. These data are then analyzed to identify user interaction components or features that both support and detract from user task performance. These observations are in turn used to suggest user interaction design changes as well as formative evaluation scenario and observation (re)design. Note that in the formative evaluation process both qualitative and quantitative data are collected from representative users during their performance of task scenarios. Developers often have the false impression that usability evaluation has no “real” process and no “real” data. To the contrary, experienced usability evaluators collect large volumes of both qualitative data and quantitative data. Qualitative data are typically in the form of critical incidents, which occur while a user performs task scenarios. A critical incident is an event that has a significant effect, either positive or negative, on user task performance or user satisfaction with the interface. Events that affect user performance or satisfaction therefore have an impact on usability. Typically, a critical incident is a problem encountered by a user (such as an error, being unable to complete a task scenario, or user confusion) that noticeably affects task flow or task performance. Quantitative data are generally related, for example, to how long it takes and the number of

errors committed while a user performs task scenarios. These data are then compared to appropriate baseline metrics. Quantitative data generally indicate that a problem has occurred; qualitative data indicate where (and sometimes why) it occurred.

Figure 2. source: Joseph L. Gabbard et al.

See Also

- Usability Testing

- User Experience (UX) Research

- User-Centered Design (UCD)

- Heuristic Evaluation

- Cognitive Walkthrough Method

- User Surveys

- User Persona

- Task Analysis

- A/B Testing

- Affinity Diagramming

References

- ↑ What is User-Centered Evaluation (UCE)? Asbjørn Følstad, Odd-Wiking Rahlff

- ↑ [Definition of User-Centered Evaluation (UCE) This definition is partly based on the definition of human-centered design in ISO guideline 13407: ‘human-centered design processes for interactive systems’ (ISO, 1999)]

- ↑ Goals of User-Centered Evaluation (UCE) Lex Van Velsen et al.

- ↑ What is Formative User-Centered Evaluation? Joseph L. Gabbard,Deborah Hix, J. Edward Swan II

Further Reading

- User centered evaluation of interactive data visualization forms for document management systems Antje Heinicke Chen Liao Katrin Walbaum et al.

- User-Centered Evaluation Methodology for Interactive Visualizations NIST

- User-centered evaluation of adaptive and adaptable systems: a literature review University of Twente