Search Engine Optimization (SEO)

Search Engine Optimization is the process of improving the visibility of a website on organic ("natural" or un-paid) search engine result pages (SERPs), by incorporating search engine-friendly elements into a website. A successful search engine optimization campaign will have, as part of the improvements, carefully select, relevant, keywords that the on-page optimization will be designed to make prominent for search engine algorithms. Search engine optimization is broken down into two basic areas: on-page, and off-page optimization. On-page optimization refers to website elements that comprise a web page, such as HTML code, textual content, and images. Off-page optimization refers, predominantly, to backlinks (links pointing to the site which is being optimized, from other relevant websites).[1]

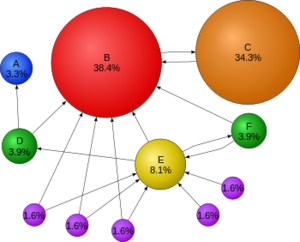

Search engines use complex mathematical algorithms to guess which websites a user seeks. In the diagram below, if each bubble represents a website, programs sometimes called spiders examine which sites link to which other sites, with arrows representing these links. Websites getting more inbound links, or stronger links, are presumed to be more important than what the user is searching for. In this example, since website B is the recipient of numerous inbound links, it ranks more highly in a web search. And the links "carry through," such that website C, even though it only has one inbound link, has an inbound link from a highly popular site (B) while site E does not. Note: percentages are rounded.[2]

History of SEO[3]

Webmasters and content providers began optimizing websites for search engines in the mid-1990s, as the first search engines were cataloging the early Web. Initially, all webmasters needed only to submit the address of a page, or URL, to the various engines which would send a "spider" to "crawl" that page, extract links to other pages from it, and return information found on the page to be indexed. The process involves a search engine spider downloading a page and storing it on the search engine's own server. A second program, known as an indexer, extracts information about the page, such as the words it contains, where they are located, and any weight for specific words, as well as all links the page contains. All of this information is then placed into a scheduler for crawling at a later date.

Website owners recognized the value of a high ranking and visibility in search engine results, creating an opportunity for both white-hat and black-hat SEO practitioners. According to industry analyst Danny Sullivan, the phrase "search engine optimization" probably came into use in 1997. Sullivan credits Bruce Clay as one of the first people to popularize the term. On May 2, 2007, Jason Gambert attempted to trademark the term SEO by convincing the Trademark Office in Arizona that SEO is a "process" involving the manipulation of keywords and not a "marketing service."

Early versions of search algorithms relied on webmaster-provided information such as the keyword meta tag or index files in engines like ALIWEB. Meta tags provide a guide to each page's content. Using metadata to index pages was found to be less than reliable, however, because the webmaster's choice of keywords in the meta tag could potentially be an inaccurate representation of the site's actual content. Inaccurate, incomplete, and inconsistent data in meta tags could and did cause pages to rank for irrelevant searches. Web content providers also manipulated some attributes within the HTML source of a page in an attempt to rank well in search engines. By 1997, search engine designers recognized that webmasters were making efforts to rank well in their search engines and that some webmasters were even manipulating their rankings in search results by stuffing pages with excessive or irrelevant keywords. Early search engines, such as Altavista and Infoseek, adjusted their algorithms to prevent webmasters from manipulating rankings.

By relying so much on factors such as keyword density which were exclusively within a webmaster's control, early search engines suffered from abuse and ranking manipulation. To provide better results to their users, search engines had to adapt to ensure their results pages showed the most relevant search results, rather than unrelated pages stuffed with numerous keywords by unscrupulous webmasters. This meant moving away from heavy reliance on term density to a more holistic process for scoring semantic signals. Since the success and popularity of a search engine are determined by its ability to produce the most relevant results to any given search, poor quality or irrelevant search results could lead users to find other search sources. Search engines responded by developing more complex ranking algorithms, taking into account additional factors that were more difficult for webmasters to manipulate. In 2005, an annual conference, AIRWeb, Adversarial Information Retrieval on the Web was created to bring together practitioners and researchers concerned with search engine optimization and related topics.

Companies that employ overly aggressive techniques can get their client websites banned from the search results. In 2005, the Wall Street Journal reported on a company, Traffic Power, which allegedly used high-risk techniques and failed to disclose those risks to its clients. Wired magazine reported that the same company sued blogger and SEO Aaron Wall for writing about the ban. Google's Matt Cutts later confirmed that Google did in fact ban Traffic Power and some of its clients.

Some search engines have also reached out to the SEO industry, and are frequent sponsors and guests at SEO conferences, webchats, and seminars. Major search engines provide information and guidelines to help with website optimization. Google has a Sitemaps program to help webmasters learn if Google is having any problems indexing their website and also provides data on Google traffic to the website. Bing Webmaster Tools provides a way for webmasters to submit a sitemap and web feeds, allows users to determine the "crawl rate", and track the web pages index status.

Why You Need Search Engine Optimization (SEO)[4]

The most effective way to drive visitors to your website at the lowest cost is to build your website’s organic search engine ranking. Search Engine Optimization (SEO) is an effective tool for improving the volume and quality of traffic to your website. The process involves a combination of “on page” and “off page” strategies designed to support your business goals. Visibility in search engines will improve your company’s online brand exposure while increasing site traffic, time on site, and conversions.

How To Do SEO[5]

SEO involves technical and creative activities that are often grouped into ‘Onsite SEO’ and ‘Offsite SEO’. This terminology is quite dated, but it is useful to understand, as it splits practices that can be performed on a website, and away from a website. These activities require expertise, often from multiple individuals as the skillsets required to carry them out at a high level, are quite different – but they can also be learned. The other option is to hire a professional SEO agency, or SEO consultant to help in the areas required.

- Onsite SEO: Onsite SEO refers to activities on a website to improve organic visibility. This largely means optimizing a website and content to improve the accessibility, relevancy, and experience for users. Some of the typical activities include –

- Keyword Research – Analysing the types of words and frequency used by prospective customers to find a brand's services or products. Understanding their intent and a user's expectations from their search.

- Technical Auditing – Ensuring the website can be crawled and indexed, is correctly geo-targeted, and is free from errors or user experience barriers.

- Onsite Optimisation – Improving the website structure, internal navigation, on-page alignment, and content relevancy to help prioritize key areas and target relevant search phrases.

- User Experience – Ensuring content shows expertise, authority, and trust is simple to use, fast, and ultimately provides the best possible experience to users against the competition.

The above list only touches upon a small number of activities involved in Onsite SEO as an overview.

- Offsite SEO: Offsite SEO refers to activities carried out outside of a website to improve organic visibility. This is often referred to as ‘link building’, which aims to increase the number of reputable links from other websites, as search engines use them as scoring as a vote of trust. Links from websites and pages with more trust, popularity, and relevancy will pass more value to another website, than an unknown, poor website that isn’t trusted by the search engines. So the quality of a link is the most important signal. Some of the typical activities include –

- Content (‘Marketing’) – Reputable websites link to exceptional content. So creating amazing content will help attract links. This might include a how-to guide, a story, a visualization or an infographic with compelling data.

- Digital PR – PR provides reasons for other websites to talk and link to a website. This might be internal newsflow, writing for external publications, original research or studies, expert interviews, quotes, product placement, and much more.

- Outreach & Promotion – This involves communicating with key journalists, bloggers, influencers, or webmasters about a brand, resource, content, or PR to earn coverage and ultimately earn links to a website.

There’s obviously a huge number of reasons why a website might link to another and not all of them fit into the categories above. A good rule of thumb on whether a link is valuable is to consider the quality of referral traffic (visitors that might click on the link to visit your website). If the site won’t send any visitors, or the audience is completely unrelated and irrelevant, then it might not really be a link that’s worth pursuing.

It’s important to remember Link schemes such as buying links, exchanging links excessively, or low-quality directories and articles that aim to manipulate Google’s rankings, are against their guidelines and Google can take action by penalizing a website. The best and most sustainable approach to improve the inbound links to a website is earning them, by providing genuine and compelling reasons for websites to cite and link to the brand and content for who they are, the service or product they provide, or the content they create.

Black Hat Vs. White Hat[6]

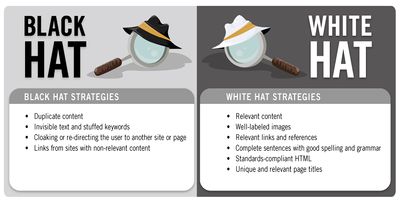

Black Hat This type of SEO focuses on optimizing your content only for the search engine, not considering humans at all. Since there are lots of ways to bend and break the rules to get your sites to rank high, these are prime ways for black hat SEOs to make a few thousand dollars fast. Ultimately, this approach results in spammy pages that often get banned very fast. This often leads to severe punishment for the marketer, ruining their chance of building something sustainable in the future and continuously having to be on the lookout for search engine updates and come up with new ways to dodge the rules.

White Hat White hat SEO, on the other hand, is the way to build a sustainable online business. This type of SEO focuses on your human audience. It gives the audience the best content possible and makes it easily accessible to them by playing according to the search engine’s rules.

Grey Hat SEO and Why it Matters[7]

Gray Hat SEO is difficult to define. In the words of SEO Consultant John Andrews, Gray Hat SEO is not something between Black Hat and White Hat, but rather "the practice of tactics/techniques which remain ill-defined by published material coming out of Google, and for which reasonable people could disagree on how the tactics support or contrast with the spirit of Google’s published guidelines."

A proper understanding of Gray Hat SEO is important because it could improve your site's ranking without negative consequences, or it could cost you thousands in lost traffic. Google's best practices and conditions have the capacity to prohibit clever innovation and thinking outside the box. This prohibition isn't automatically in the interest of search marketers or even the searchers themselves. What's more, Gray Hat SEO changes periodically; what's considered Gray Hat one year could be classified as Black or What Hat the following year, making it crucial for search marketers to stay informed of the latest categorizations.

See Also

References