Difference between revisions of "Architecture Tradeoff Analysis Method (ATAM)"

(The Architecture Tradeoff Analysis Method (ATAM) is a method for evaluating software architectures relative to quality attribute goals.) |

|||

| Line 1: | Line 1: | ||

The Architecture Tradeoff Analysis Method (ATAM) is a method for evaluating software architectures relative to quality attribute goals. | The Architecture Tradeoff Analysis Method (ATAM) is a method for evaluating software architectures relative to quality attribute goals. | ||

| − | ATAM evaluations expose architectural risks that potentially inhibit the achievement of an organization's business goals. The ATAM gets its name because it not only reveals how well an architecture satisfies particular quality goals, but it also provides insight into how those quality goals interact with each other—how they trade off against each other.<ref>What is The Architecture Tradeoff Analysis Method (ATAM? [https://resources.sei.cmu.edu/library/asset-view.cfm?assetid=513908 SEI]</ref> | + | ATAM evaluations expose architectural risks that potentially inhibit the achievement of an organization's business goals. The ATAM gets its name because it not only reveals how well an architecture satisfies particular quality goals, but it also provides insight into how those quality goals interact with each other—how they trade off against each other.<ref>What is The Architecture Tradeoff Analysis Method (ATAM)? [https://resources.sei.cmu.edu/library/asset-view.cfm?assetid=513908 SEI]</ref> |

| − | |||

| Line 9: | Line 8: | ||

The ATAM consists of nine steps: | The ATAM consists of nine steps: | ||

| − | |||

*Present the ATAM. The evaluation leader describes the evaluation method to the assembled participants, tries to set their expectations, and answers questions they may have. | *Present the ATAM. The evaluation leader describes the evaluation method to the assembled participants, tries to set their expectations, and answers questions they may have. | ||

*Present business drivers. A project spokesperson (ideally the project manager or system customer) describes what business goals are motivating the development effort and hence what will be the primary architectural drivers (e.g., high availability or time to market or high security). | *Present business drivers. A project spokesperson (ideally the project manager or system customer) describes what business goals are motivating the development effort and hence what will be the primary architectural drivers (e.g., high availability or time to market or high security). | ||

| Line 19: | Line 17: | ||

*Analyze architectural approaches. This step reiterates the activities of Step 6, but using the highly ranked scenarios from Step 7. Those scenarios are considered to be test cases to confirm the analysis performed thus far. This analysis may uncover additional architectural approaches, risks, sensitivity points, and tradeoff points, which are then documented. | *Analyze architectural approaches. This step reiterates the activities of Step 6, but using the highly ranked scenarios from Step 7. Those scenarios are considered to be test cases to confirm the analysis performed thus far. This analysis may uncover additional architectural approaches, risks, sensitivity points, and tradeoff points, which are then documented. | ||

*Present results. Based on the information collected in the ATAM (approaches, scenarios, attribute-specific questions, the utility tree, risks, non-risks, sensitivity points, tradeoffs), the ATAM team presents the findings to the assembled stakeholders. | *Present results. Based on the information collected in the ATAM (approaches, scenarios, attribute-specific questions, the utility tree, risks, non-risks, sensitivity points, tradeoffs), the ATAM team presents the findings to the assembled stakeholders. | ||

| + | |||

| + | |||

| + | [[File:ATAM1.png|400px|Conceptual Flow of ATAM]] | ||

| + | source: [https://concisesoftware.com/architecture-tradeoff-analysis-method-atam/ Concise] | ||

| + | |||

| + | |||

| + | '''ATAM Case Study'''<ref>ATAM Case Study [https://www.sciencedirect.com/topics/computer-science/architecture-tradeoff-analysis-method Murat Erder, Pierre Pureu]</ref> | ||

| + | The objective of the ATAM is to evaluate how architecture decisions impact a software system’s ability to fulfill its business goals and satisfy its quality attribute requirements. Architecture Tradeoff Analysis Method uses scenarios grouped by Quality Attributes to uncover potential risks and issues with the proposed software architecture decisions. In addition, Architecture Tradeoff Analysis Method explicitly brings together the following three groups during the review:<br /> | ||

| + | 1. The review team<br /> | ||

| + | 2. The project team<br /> | ||

| + | 3. Representatives of the system’s stakeholders<br /> | ||

| + | The team conducts two validation sessions: an initial evaluation with a small group of technically oriented project stakeholders and a second, more complete validation with a larger group of stakeholders. The Architecture Tradeoff Analysis Method technique recommends that each session lasts 2 days. | ||

| + | In session 1, the team identifies the architecture decisions and the reasons for each of them. The team then lists the Quality Attributes that are important to the system. The Quality Attributes are derived from the business goals and drivers, and they are prioritized using the business drivers. The team breaks down each Quality Attribute into Quality Attribute refinements (see later discussion). Finally, for each Quality Attribute refinement, the team documents at least one scenario that illustrates how the quality attribute requirement is being met. The results of this exercise are documented in a utility tree (see Chapter 3 for a detailed discussion of the Quality Attribute utility tree) using the structure that we are now familiar with: | ||

| + | *Highest level: Quality attribute requirement (e.g., performance, security, configurability, cost effectiveness) | ||

| + | **Next level: Quality Attribute refinements. For example, “latency” is one of the refinements of “performance”; “access control” is one of the refinements of security. | ||

| + | *Lowest level: architecture scenarios (at least one architecture scenario per Quality Attribute refinement). Scenarios are documented using the following attributes: | ||

| + | **Stimulus: describes what a user of the system would do to initiate the architecture scenario | ||

| + | **Response: how the system would be expected to respond to the stimulus | ||

| + | **Measurement: quantifies the response to the stimulus | ||

| + | The validation team identifies which architecture decisions are important to the support of the scenario. This enables the review team to identify the risks (potentially problematic architecture decisions), the nonrisks (good architecture decisions that may either be explicit or implicit), the sensitivity points (architecture features that affect the response to a quality attribute requirement), and the trade-off points (architecture decisions that are compromises between conflicting responses to quality attribute requirements). This qualitative analysis may be supplemented by running one or several tests associated with each scenario against the software components delivered so far. | ||

| + | After session 1 is completed, the review team schedules a second session with a larger group of stakeholders. The goals of this session are to verify the results of the first session and to elicit more diverse points of views from the stakeholders. In session 2, the review team works with the larger group of stakeholders to create a list of prioritized scenarios that are important. In session 2, the review team works with the larger group of stakeholders to create a list of prioritized scenarios that are important to them and merges them into the utility tree created as part of session 1. It then analyzes new scenarios and updates the results of session 1. | ||

| + | |||

| + | |||

| + | [[File:ATAM.png|400px|ATAM Vs. Other SEI Methods]] | ||

| + | source: [http://lore.ua.ac.be/Teaching/CapitaMaster/ATAMmethod.pdf INNO] | ||

| + | |||

| Line 32: | Line 56: | ||

===Further Reading=== | ===Further Reading=== | ||

*Architecture Trade-off Analysis Method with case study [http://lore.ua.ac.be/Teaching/CapitaMaster/ATAMmethod.pdf INNO] | *Architecture Trade-off Analysis Method with case study [http://lore.ua.ac.be/Teaching/CapitaMaster/ATAMmethod.pdf INNO] | ||

| + | *Integrating the Architecture Tradeoff Analysis Method ( ATAM ) with the Cost Benefit Analysis Method ( CBAM ) [https://www.semanticscholar.org/paper/Integrating-the-Architecture-Tradeoff-Analysis-(-)-Nord-Barbacci/d2653f5beb9bee689f32610a3bcc87cb5aade992 Robert L. Nord et al.] | ||

| + | *The Architecture Tradeoff Analysis Method. [https://www.researchgate.net/publication/221473740_The_Architecture_Tradeoff_Analysis_Method Rick Kazman et. al] | ||

Revision as of 20:19, 13 March 2019

The Architecture Tradeoff Analysis Method (ATAM) is a method for evaluating software architectures relative to quality attribute goals.

ATAM evaluations expose architectural risks that potentially inhibit the achievement of an organization's business goals. The ATAM gets its name because it not only reveals how well an architecture satisfies particular quality goals, but it also provides insight into how those quality goals interact with each other—how they trade off against each other.[1]

ATAM Process

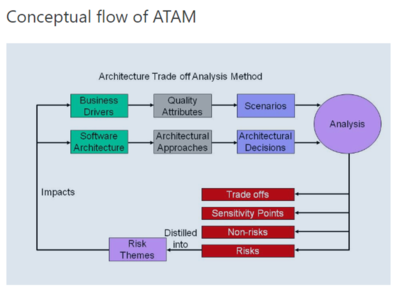

Business drivers and the software architecture are elicited from project decision makers. These are refined into scenarios and the architectural decisions made in support of each one. Analysis of scenarios and decisions results in identification of risks, non-risks, sensitivity points, and tradeoff points in the architecture. Risks are synthesized into a set of risk themes, showing how each one threatens a business driver.

The ATAM consists of nine steps:

- Present the ATAM. The evaluation leader describes the evaluation method to the assembled participants, tries to set their expectations, and answers questions they may have.

- Present business drivers. A project spokesperson (ideally the project manager or system customer) describes what business goals are motivating the development effort and hence what will be the primary architectural drivers (e.g., high availability or time to market or high security).

- Present architecture. The architect will describe the architecture, focusing on how it addresses the business drivers.

- Identify architectural approaches. Architectural approaches are identified by the architect, but are not analyzed.

- Generate quality attribute utility tree. The quality factors that comprise system "utility" (performance, availability, security, modifiability, usability, etc.) are elicited, specified down to the level of scenarios, annotated with stimuli and responses, and prioritized.

- Analyze architectural approaches. Based on the high-priority factors identified in Step 5, the architectural approaches that address those factors are elicited and analyzed (for example, an architectural approach aimed at meeting performance goals will be subjected to a performance analysis). During this step, architectural risks, sensitivity points, and tradeoff points are identified.

- Brainstorm and prioritize scenarios. A larger set of scenarios is elicited from the entire group of stakeholders. This set of scenarios is prioritized via a voting process involving the entire stakeholder group.

- Analyze architectural approaches. This step reiterates the activities of Step 6, but using the highly ranked scenarios from Step 7. Those scenarios are considered to be test cases to confirm the analysis performed thus far. This analysis may uncover additional architectural approaches, risks, sensitivity points, and tradeoff points, which are then documented.

- Present results. Based on the information collected in the ATAM (approaches, scenarios, attribute-specific questions, the utility tree, risks, non-risks, sensitivity points, tradeoffs), the ATAM team presents the findings to the assembled stakeholders.

source: Concise

source: Concise

ATAM Case Study[2]

The objective of the ATAM is to evaluate how architecture decisions impact a software system’s ability to fulfill its business goals and satisfy its quality attribute requirements. Architecture Tradeoff Analysis Method uses scenarios grouped by Quality Attributes to uncover potential risks and issues with the proposed software architecture decisions. In addition, Architecture Tradeoff Analysis Method explicitly brings together the following three groups during the review:

1. The review team

2. The project team

3. Representatives of the system’s stakeholders

The team conducts two validation sessions: an initial evaluation with a small group of technically oriented project stakeholders and a second, more complete validation with a larger group of stakeholders. The Architecture Tradeoff Analysis Method technique recommends that each session lasts 2 days.

In session 1, the team identifies the architecture decisions and the reasons for each of them. The team then lists the Quality Attributes that are important to the system. The Quality Attributes are derived from the business goals and drivers, and they are prioritized using the business drivers. The team breaks down each Quality Attribute into Quality Attribute refinements (see later discussion). Finally, for each Quality Attribute refinement, the team documents at least one scenario that illustrates how the quality attribute requirement is being met. The results of this exercise are documented in a utility tree (see Chapter 3 for a detailed discussion of the Quality Attribute utility tree) using the structure that we are now familiar with:

- Highest level: Quality attribute requirement (e.g., performance, security, configurability, cost effectiveness)

- Next level: Quality Attribute refinements. For example, “latency” is one of the refinements of “performance”; “access control” is one of the refinements of security.

- Lowest level: architecture scenarios (at least one architecture scenario per Quality Attribute refinement). Scenarios are documented using the following attributes:

- Stimulus: describes what a user of the system would do to initiate the architecture scenario

- Response: how the system would be expected to respond to the stimulus

- Measurement: quantifies the response to the stimulus

The validation team identifies which architecture decisions are important to the support of the scenario. This enables the review team to identify the risks (potentially problematic architecture decisions), the nonrisks (good architecture decisions that may either be explicit or implicit), the sensitivity points (architecture features that affect the response to a quality attribute requirement), and the trade-off points (architecture decisions that are compromises between conflicting responses to quality attribute requirements). This qualitative analysis may be supplemented by running one or several tests associated with each scenario against the software components delivered so far. After session 1 is completed, the review team schedules a second session with a larger group of stakeholders. The goals of this session are to verify the results of the first session and to elicit more diverse points of views from the stakeholders. In session 2, the review team works with the larger group of stakeholders to create a list of prioritized scenarios that are important. In session 2, the review team works with the larger group of stakeholders to create a list of prioritized scenarios that are important to them and merges them into the utility tree created as part of session 1. It then analyzes new scenarios and updates the results of session 1.

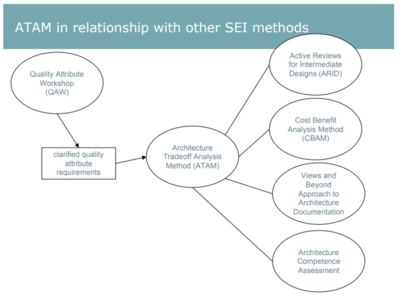

source: INNO

source: INNO

See Also

Software Architecture

Enterprise Architecture

References

- ↑ What is The Architecture Tradeoff Analysis Method (ATAM)? SEI

- ↑ ATAM Case Study Murat Erder, Pierre Pureu

Further Reading

- Architecture Trade-off Analysis Method with case study INNO

- Integrating the Architecture Tradeoff Analysis Method ( ATAM ) with the Cost Benefit Analysis Method ( CBAM ) Robert L. Nord et al.

- The Architecture Tradeoff Analysis Method. Rick Kazman et. al