Artificial Intelligence (AI)

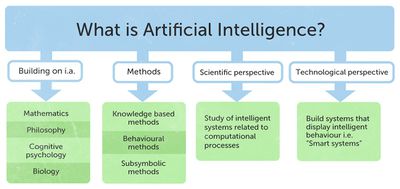

Jeremy Achin, CEO of DataRobot, defines Artificial Intelligence (AI) as, " An AI is a computer system that is able to perform tasks that ordinarily require human intelligence. These artificial intelligence systems are powered by machine learning. Many of them are powered by machine learning, some of them are powered by specifically deep learning, some of them are powered by very boring things like just rules."[1]

Artificial Intelligence is the ability of a computer or other machines to perform actions thought to require intelligence. Among these actions are logical deduction and inference, make decisions based on past experience, solve problems with insufficient or conflicting information and artifical vision. AI is frequently used to improve solutions and attack problems that may not be solvable using standard methods.[2]

source: Trollhetta

Evolution of Artificial Intelligence

The History of AI[3]

Intelligent robots and artificial beings first appeared in the ancient Greek myths of Antiquity. Aristotle's development of the syllogism and it's use of deductive reasoning was a key moment in mankind's quest to understand its own intelligence. While the roots are long and deep, the history of artificial intelligence as we think of it today spans less than a century. The following is a quick look at some of the most important events in AI.

- 1943: Warren McCullough and Walter Pitts publish "A Logical Calculus of Ideas Immanent in Nervous Activity." The paper proposed the first mathematic model for building a neural network.

- 1949: In his book The Organization of Behavior: A Neuropsychological Theory, Donald Hebb proposes the theory that neural pathways are created from experiences and that connections between neurons become stronger the more frequently they're used. Hebbian learning continues to be an important model in AI.

- 1950: Alan Turing publishes "Computing Machinery and Intelligence, proposing what is now known as the Turing Test, a method for determining if a machine is intelligent. Harvard undergraduates Marvin Minsky and Dean Edmonds build SNARC, the first neural network computer. Claude Shannon publishes the paper "Programming a Computer for Playing Chess." Isaac Asimov publishes the "Three Laws of Robotics."

- 1952: Arthur Samuel develops a self-learning program to play checkers.

- 1954: The Georgetown-IBM machine translation experiment automatically translates 60 carefully selected Russian sentences into English.

- 1956: The phrase artificial intelligence is coined at the "Dartmouth Summer Research Project on Artificial Intelligence." Led by John McCarthy, the conference, which defined the scope and goals of AI, is widely considered to be the birth of artificial intelligence as we know it today. Allen Newell and Herbert Simon demonstrate Logic Theorist (LT), the first reasoning program.

- 1958: John McCarthy develops the AI programming language Lisp and publishes the paper "Programs with Common Sense." The paper proposed the hypothetical Advice Taker, a complete AI system with the ability to learn from experience as effectively as humans do.

- 1959: Allen Newell, Herbert Simon and J.C. Shaw develop the General Problem Solver (GPS), a program designed to imitate human problem-solving. Herbert Gelernter develops the Geometry Theorem Prover program. Arthur Samuel coins the term machine learning while at IBM. John McCarthy and Marvin Minsky found the MIT Artificial Intelligence Project.

- 1963: John McCarthy starts the AI Lab at Stanford.

- 1966: The Automatic Language Processing Advisory Committee (ALPAC) report by the U.S. government details the lack of progress in machine translations research, a major Cold War initiative with the promise of automatic and instantaneous translation of Russian. The ALPAC report leads to the cancellation of all government-funded MT projects.

- 1969: The first successful expert systems are developed in DENDRAL, a XX program, and MYCIN, designed to diagnose blood infections, are created at Stanford.

- 1972: The logic programming language PROLOG is created.

- 1973: The "Lighthill Report," detailing the disappointments in AI research, is released by the British government and leads to severe cuts in funding for artificial intelligence projects.

- 1974-1980: Frustration with the progress of AI development leads to major DARPA cutbacks in academic grants. Combined with the earlier ALPAC report and the previous year's "Lighthill Report," artificial intelligence funding dries up and research stalls. This period is known as the "First AI Winter."

- 1980: Digital Equipment Corporations develops R1 (also known as XCON), the first successful commercial expert system. Designed to configure orders for new computer systems, R1 kicks off an investment boom in expert systems that will last for much of the decade, effectively ending the first "AI Winter."

- 1982: Japan's Ministry of International Trade and Industry launches the ambitious Fifth Generation Computer Systems project. The goal of FGCS is to develop supercomputer-like performance and a platform for AI development.

- 1983: In response to Japan's FGCS, the U.S. government launches the Strategic Computing Initiative to provide DARPA funded research in advanced computing and artificial intelligence.

- 1985: Companies are spending more than a billion dollars a year on expert systems and an entire industry known as the Lisp machine market springs up to support them. Companies like Symbolics and Lisp Machines Inc. build specialized computers to run on the AI programming language Lisp.

- 1987-1993: As computing technology improved, cheaper alternatives emerged and the Lisp machine market collapsed in 1987, ushering in the "Second AI Winter." During this period, expert systems proved too expensive to maintain and update, eventually falling out of favor. Japan terminates the FGCS project in 1992, citing failure in meeting the ambitious goals outlined a decade earlier. DARPA ends the Strategic Computing Initiative in 1993 after spending nearly $1 billion and falling far short of expectations.

- 1991: U.S. forces deploy DART, an automated logistics planning and scheduling tool, during the Gulf War.

- 1997: IBM's Deep Blue beats world chess champion Gary Kasparov

- 2005: STANLEY, a self-driving car, wins the DARPA Grand Challenge. The U.S. military begins investing in autonomous robots like Boston Dynamic's "Big Dog" and iRobot's "PackBot."

- 2008: Google makes breakthroughs in speech recognition and introduces the feature in its iPhone app.

- 2011: IBM's Watson trounces the competition on Jeopardy!.

- 2012: Andrew Ng, founder of the Google Brain Deep Learning project, feeds a neural network using deep learning algorithms 10 million YouTube videos as a training set. The neural network learned to recognize a cat without being told what a cat is, ushering in breakthrough era for neural networks and deep learning funding.

- 2014: Google makes first self-driving car to pass a state driving test.

- 2016: Google DeepMind's AlphaGo defeats world champion Go player Lee Sedol. The complexity of the ancient Chinese game was seen as a major hurdle to clear in AI.

The Need for Artificial Intelligence

Why Use Artificial Intelligence Software?[4]

The reason someone would use AI software is to build an intelligent application from scratch or add machine or deep learning to a pre-existing software application. AI software allows users to implement general machine learning, or more specific deep learning capabilities, such as natural language processing, computer vision, and speech recognition. While this is the primary, and somewhat obvious, reason, there are many motivations behind this rationale, with the following being some of the most common themes:

- Automation of mundane tasks – Business may implement machine learning to help automate tedious actions that employees are required to do in their day-to-day. By utilizing AI for these tasks, companies can free up time for employees to concentrate on more important, human-necessary aspects of their jobs. AI software does not offer a way to automate humans out of their jobs, but instead offers a supplemental tool to help improve their performance at work.

- Predictive capabilities – Predictive functionality is similar to automation in the sense that it performs a task or provides an outcome that the solutions assumes is correct instead of a human needing to do it manually. This can be as simple as expense management solutions adding an expense to a report on its own. How would a software know to do this? Because it uses AI and machine learning to understand that the user puts the same charge on their report every month. So instead of the employee needing to add it every month, it predicts what will be on the report and automates it for them. This type of predictive capability can be added to applications with AI software.

- Intelligent decision-making — While you might think that predictive solutions make intelligent decisions, this aspect of AI helps human beings make intelligent decisions instead of the software doing it for them. Machine learning can help take the guesswork out of making critical business decisions by providing analytical proof and predicted outcomes. This functionality not only helps take human error out of decision-making, but can help arm users with the information necessary to defending the decisions that they make.

- Personalization — By using machine learning algorithms, software developers can create a high level of personalization, improving their software products for all users by offering unique experiences. Creating applications that recognize users and their interactions allows for powerful recommendation systems, similar to those used by Amazon to help personalize consumer shopping, or the film recommendation capabilities of Netflix.

- Creating conversational interfaces – Given the popularity of consumer conversational AI offerings, such as Amazon’s Alexa, Apple’s Siri, and Google Home, the use of conversational interfaces is broaching into the B2B world. For software companies trying to innovate and keep up with these advancements, AI software is the place to start. Implementing speech recognition into a software can allow users to interact with the application in a streamlined, unique manner.

The AI Technology Landscape

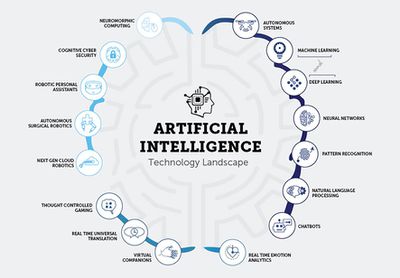

What the AI technology lanscape looks like[5]

AI is computer systems that exhibit human like intelligence. It is a group of science fields and technologies concerned with creating

machines that take intelligent actions based on inputs

source: Callaghan Innovation

- Deep learning. A high powered type of machine learning algorithm that uses a cascade of many computing layers. Each layer uses the input from the previous layer as input. Enabled by neural networks and given big data sets, deep learning algorithms are great at pattern recognition, and enable things like speech recognition, image recognition and natural language processing. The combination of neural networks (enabled by the cloud), machine learning technology, and massive data sets (the internet), has made deep learning one of the most exciting AI sub-fields recently.

- Thought controlled gaming The application of AI, wearable technology, and brain computing interface technology to enable seamless interaction with social gaming environments in real-time, through avatars without the need for joystick-type devices.

- Real-time universal translation The application of natural language processing to enable two humans (with no common language) to understand each other in real-time.

- Neuromorphic computing. Future generation computing hardware that mimics the function of the human brain in silicon chips.

- Machine Learning. Algorithms that can learn from and make predictions on data. Overlaps with computational and Bayesian statistics. Underpins predictive analytics and data-mining.

- Next gen cloud robotics. Convergence of AI, big data, cloud and the as-a-service model will enable a cloud-based robotic brain that robots can use for high powered intelligent and intuitive collaboration with humans.

- Robotic personal assistants. Cloud-based AI learns from big data to enable human-like social robots that can perform usefully as personal assistants.

- Real-time emotion analytics. The application of AI to analyse brain signals, voice and facial expression to detect human emotions.

- Autonomous systems. Autonomous robots, self-driving vehicles, drones, all enabled by AI.

- Natural language processing. Technologies that enable computer systems to interact seamlessly with human languages. Includes: Written language and speech recognition, sentiment analysis, translation, understanding meaning within text/speech, language generation.

- Cognitive cyber security. Cloud-based AI systems trained on historical cyber threat data, capable of mitigating real-time cyber threats.

- Autonomous surgical robotics. Cloud-based AI platforms can help robotic surgeons to perform precise surgeries by learning from large historical surgical data sets (like video).

- Patttern recognition. A branch of machine learning and deep learning which focuses on recognition of patterns in data.

- Virtual companions. Cloud-connected, virtual realitybased avatars powered by AI engines that can behave and interact just as a human would.

- Chatbots. A software robot that interacts with humans online, receiving and sending conversational text with the aim of emulating the way a human communicates. An example of natural language processing.

- Neural networks. Computing systems that organise the computing elements in a layered way that is loosely modelled on the human brain. Enables deep learning.

The Importance of Artificial Intelligence

Why is artificial intelligence important?[6]

- AI automates repetitive learning and discovery through data. But AI is different from hardware-driven, robotic automation. Instead of automating manual tasks, AI performs frequent, high-volume, computerized tasks reliably and without fatigue. For this type of automation, human inquiry is still essential to set up the system and ask the right questions.

- AI adds intelligence to existing products. In most cases, AI will not be sold as an individual application. Rather, products you already use will be improved with AI capabilities, much like Siri was added as a feature to a new generation of Apple products. Automation, conversational platforms, bots and smart machines can be combined with large amounts of data to improve many technologies at home and in the workplace, from security intelligence to investment analysis.

- AI adapts through progressive learning algorithms to let the data do the programming. AI finds structure and regularities in data so that the algorithm acquires a skill: The algorithm becomes a classifier or a predictor. So, just as the algorithm can teach itself how to play chess, it can teach itself what product to recommend next online. And the models adapt when given new data. Back propagation is an AI technique that allows the model to adjust, through training and added data, when the first answer is not quite right.

- AI analyzes more and deeper data using neural networks that have many hidden layers. Building a fraud detection system with five hidden layers was almost impossible a few years ago. All that has changed with incredible computer power and big data. You need lots of data to train deep learning models because they learn directly from the data. The more data you can feed them, the more accurate they become.

- AI achieves incredible accuracy through deep neural networks – which was previously impossible. For example, your interactions with Alexa, Google Search and Google Photos are all based on deep learning – and they keep getting more accurate the more we use them. In the medical field, AI techniques from deep learning, image classification and object recognition can now be used to find cancer on MRIs with the same accuracy as highly trained radiologists.

- AI gets the most out of data. When algorithms are self-learning, the data itself can become intellectual property. The answers are in the data; you just have to apply AI to get them out. Since the role of the data is now more important than ever before, it can create a competitive advantage. If you have the best data in a competitive industry, even if everyone is applying similar techniques, the best data will win.

Artificial Intelligence Applications

The Industrial Applications of Artificial Intelligences[7]

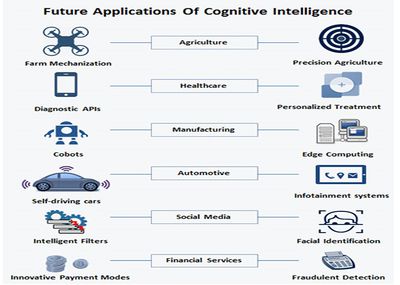

Although AI is a branch of computer science, today there’s no field which is left unaffected by this technology. The aim is to teach the machines to think intelligently just the way humans do. Till now, the machines have been doing what they were told to do but with AI they will be able to think and behave like a human being. The study focuses on observing the thinking and learning a pattern of humans and then the outcome is used to develop intelligent softwares and system. Below is a glimpse of some industrial applications of AI

source: Frost & Sullivan

- Automobiles: It’s a well-established fact that Google’s Driverless cars and Tesla’s Autopilot features have already paved their way towards the introduction of AI in automobiles. Whether it’s self-parking, detecting collision, blind spot monitoring, voice recognition, or navigation, it’s almost like the car is acting as an assistant to the owner and teaching different ways of a safe driving. Elon Musk, popularly known as the founder of Tesla Motors, tweeted that soon a day will come when people will be able to ‘Summon’ their car wherever they want to and it will reach there on its own using navigation and track the location of the person. He is even working on introducing a fully automated transportation system that will use levitation for the commute.

- Banking and Finance: Owing to the increase in the amount of financial data, a lot of financial services have resorted to Artificial Intelligence. Robots are much quicker in analyzing market data to forecast change in stock trends and manage finances as compared to the human counterparts. They can even use algorithms to offer suggestions to the clients involving simple problems. Similarly, banks are using AI to keep a track of the customer base, addressing their needs, suggesting them about different schemes and what not. Often when there is a suspicious transaction from the users’ account, they immediately get a mail to confirm that it’s not an outsider who is carrying out that particular transaction.

Healthcare: Artificial Intelligence is also performing a lot of important work for the healthcare industry. It is being used by doctors in assisting with the diagnosis and treatment procedures. This reduces the need for multiple machines and equipment which in turn brings down the cost. Sedasys System by Johnson & Johnson has been approved by FDA to deliver anesthesia to patients automatically in standard procedures. Apart from this, IBM has introduced Watson which is an artificial intelligent application. It has been designed to suggest different kinds of treatments based on the patients’’ medical history and has been proved to be very effective. Manufactuting: Manufacturing is one of the first industries that has been using AI from the very beginning. Robotic parts are used in the factories to assemble different parts and then pack them without needing any manual help. Right from the raw materials to the shipped final products, robotic parts play an imminent role in most of the entities. However, artificial intelligence is going to make more modifications in a way that more complicated goods will be manufactured and assembled with the help of machines like automobiles and electronic goods.

Artificial Intelligence and Employment

The Impact of Artificial Intelligence on Jobs[8]

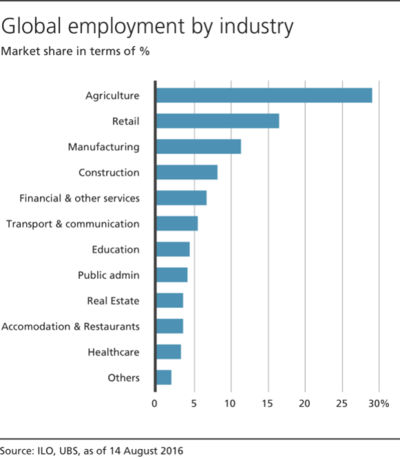

The increasing integration of AI will ultimately yield greater productivity in the near term, the result of which has historically led to culls in employment.

The concerns are legitimate, but during this period AI will not nearly be at such a developmental stage that its widespread adoption will trigger mass layoffs. The technology will still be used in relatively niche applications and will not yet achieve a level of critical mass that would threaten employment on a global scale.

source: UBS

However, global employment will not escape unscathed. By automating tasks that rely on analyses, subtle judgments and problem solving, AI can be a threat to low-skill, predictable and routine jobs in industries like retail and financial services and indirectly through the broader automation of the auto industry and certain other manufacturing industries. While it is difficult to project the exact impact at this stage, assuming 5% of the jobs in these industries are routine in nature, we expect 50-75 million jobs globally, or 2% of the worldwide work force, will be potentially affected due to the advent of AI – a significant number, but one that pales in comparison to the opportunities AI will create.

AI's rise and ensuing surge in productivity will spur a plethora of opportunities for employees to upgrade their skills and focus on creative aspects. With the emergence of other disruptive business models like apps or sharing economies highly likely in a post-AI era, there is increased scope for jobs that require a high level of personalization, creativity or craftsmanship - tasks that will still need a person. These occupations are hard to imagine at this point, hence the job-related anxiety associated with AI's widespread integration; but they will quickly proliferate as new specializations are needed - comparable to the post-Industrial revolution bloom of factory workers.

The Future of Artificial Intelligence

Seven stages in the future evolution of Artificial Intelligence[9]

With literally hundreds of thousands of developers and data scientists across the planet now working on AI, the pace of development is accelerating, with increasingly eye catching breakthroughs being announced on a daily basis. This has also led to a lot of confusion about what we can do with AI today and what might be possible at some point in the future.

To provide some clarity on the possible development path, seven distinct stages in the evolution of AI’s capabilities have been identified:

- Stage 1 – Rule Based Systems – These now surround us in everything from business software (RPA) and domestic appliances through to aircraft autopilots. They are the most common manifestations of AI in the world today.

- Stage 2 – Context Awareness and Retention – These algorithms build up a body of information about the specific domain they are being applied in. They are trained on the knowledge and experience of the best humans, and their knowledge base can be updated as new situations and queries arise. The most common manifestations include chatbots- often used in frontline customer enquiry handling – and the “roboadvisors” that are helping with everything from suggesting the right oil for your motorbike through to investment advice.

- Stage 3 – Domain Specific Expertise – These systems can develop expertise in a specific domain that extends beyond the capability of humans because of the sheer volume of information they can access to make each decision. Their use has been seen in applications such as cancer diagnosis. Perhaps the most commonly sighted example is Google Deepmind’s AlphaGo. The system was given a set of learning rules and objective of winning and then it taught itself how to play Go with human support to nudge it back on course when it made poor decisions. Go reportedly has more moves than there are atoms in the universe – so you cannot teach it in the same way as you might with a chess playing program. In March 2016, AlphaGo defeated the 18-time world Go champion Lee Sedol by four games to one. The following year, AlphaGo Zero was created, and given no guidance or human support. Equipped only with her learning rules, she watched thousands of Go games and developed her own strategies. After three days she took on AlphaGo and won by 100 games to nil. Such applications are an example of what is possible when machines can acquire human scale intelligence. However, at present they are limited to one domain and currently, AlphaGo Zero would forget what she knows about playing Go if you started to teach her how to spot fraudulent transactions in an accounting audit.

- Stage 4 – Reasoning Machines – These algorithms have a “theory of mind” – some ability to attribute mental states to themselves and others e.g. they have a sense of beliefs, intentions, knowledge, and how their own logic works. Hence, they have the capacity to reason, negotiate, and interact with humans and other machines. Such algorithms are currently at the development stage, but we can expect to see them in commercial applications in the next few years.

- Stage 5 – Self Aware Systems / Artificial General Intelligence (AGI) – This is the goal of many working in the AI field – creating systems with human like intelligence. No such applications are in evidence today, however some say we could see them in as little as five years, while others believe we may never truly achieve this level of machine intelligence. There are many examples of AGI in the popular media ranging from HAL the ship computer in 2001 A Space Odyssey, to the “Synths” in the television series Humans. For decades now, writers and directors have tried to convey a world where the machines can function at a similar level to humans.

- Stage 6 – Artificial SuperIntelligence (ASI) – This is the notion of developing AI algorithms that are capable of outperforming the smartest of humans in every domain. Clearly, it is hard to articulate what the capabilities might be of something that exceeds human intelligence, but we could imagine ASI solving current world problems such as hunger and dangerous climate change. Such systems might also invent new fields of science, redesign economic systems, and evolve wholly new models of governance. Again, expert views vary as to when and whether such a capability might ever be possible, but few think we will see it in the next decade. Films like Her and Ex Machina providing interesting depictions of the possibilities in a world where our technology might outsmart us.

- Stage 7 – Singularity and Transcendence – This is the notion that the exponential development path enabled by ASI could lead to a massive expansion in human capability. We might one day be sufficiently augmented and enhanced such that humans could connect our brains to each other and to a future successor of the current internet. This “hive mind” would allow us to share ideas, solve problems collectively, and even give others access to our dreams as observers or participants. Taking things a stage further, we might also transcend the limits of the human body and connect to other forms of intelligence on the planet – animals, plants, weather systems, and the natural environment. Some proponents of the singularity such as Ray Kurzweil, Google’s Director of Engineering, suggest that we could see the Singularity happen by 2045 as a result of exponential rates of progress across a range of science and technology disciplines. Others argue fervently that it is simply impossible and that we will never be able to capture and digitise human consciousness.

Artificial Intelligence - Risks

The potential harm from Artificial Intelligence[10]

Widespread use of artificial intelligence could have unintended consequences that are dangerous or undesirable. Scientists from the Future of Life Institute, among others, described some short-term research goals to see how AI influences the economy, the laws and ethics that are involved with AI and how to minimize AI security risks. In the long-term, the scientists have proposed to continue optimizing function while minimizing possible security risks that come along with new technologies.

- Existential Risk: Physicist Stephen Hawking, Microsoft founder Bill Gates, and SpaceX founder Elon Musk have expressed concerns about the possibility that AI could evolve to the point that humans could not control it, with Hawking theorizing that this could "spell the end of the human race". The development of full artificial intelligence could spell the end of the human race. Once humans develop artificial intelligence, it will take off on its own and redesign itself at an ever-increasing rate. Humans, who are limited by slow biological evolution, couldn't compete and would be superseded. — Stephen Hawking. In his book Superintelligence, Nick Bostrom provides an argument that artificial intelligence will pose a threat to humankind. He argues that sufficiently intelligent AI, if it chooses actions based on achieving some goal, will exhibit convergent behavior such as acquiring resources or protecting itself from being shut down. If this AI's goals do not reflect humanity's—one example is an AI told to compute as many digits of pi as possible—it might harm humanity in order to acquire more resources or prevent itself from being shut down, ultimately to better achieve its goal. Concern over risk from artificial intelligence has led to some high-profile donations and investments. A group of prominent tech titans including Peter Thiel, Amazon Web Services and Musk have committed $1billion to OpenAI, a nonprofit company aimed at championing responsible AI development. The opinion of experts within the field of artificial intelligence is mixed, with sizable fractions both concerned and unconcerned by risk from eventual superhumanly-capable AI. In January 2015, Elon Musk donated ten million dollars to the Future of Life Institute to fund research on understanding AI decision making. The goal of the institute is to "grow wisdom with which we manage" the growing power of technology. Musk also funds companies developing artificial intelligence such as Google DeepMind and Vicarious to "just keep an eye on what's going on with artificial intelligence. I think there is potentially a dangerous outcome there." For this danger to be realized, the hypothetical AI would have to overpower or out-think all of humanity, which a minority of experts argue is a possibility far enough in the future to not be worth researching. Other counterarguments revolve around humans being either intrinsically or convergently valuable from the perspective of an artificial intelligence.

- Devaluation of humanity: Joseph Weizenbaum wrote that AI applications cannot, by definition, successfully simulate genuine human empathy and that the use of AI technology in fields such as customer service or psychotherapy was deeply misguided. Weizenbaum was also bothered that AI researchers (and some philosophers) were willing to view the human mind as nothing more than a computer program (a position is now known as computationalism). To Weizenbaum these points suggest that AI research devalues human life.

- Social justice: One concern is that AI programs may be programmed to be biased against certain groups, such as women and minorities, because most of the developers are wealthy Caucasian men. Support for artificial intelligence is higher among men (with 47% approving) than women (35% approving).

- Decrease in demand for human labor: The relationship between automation and employment is complicated. While automation eliminates old jobs, it also creates new jobs through micro-economic and macro-economic effects. Unlike previous waves of automation, many middle-class jobs may be eliminated by artificial intelligence; The Economist states that "the worry that AI could do to white-collar jobs what steam power did to blue-collar ones during the Industrial Revolution" is "worth taking seriously". Subjective estimates of the risk vary widely; for example, Michael Osborne and Carl Benedikt Frey estimate 47% of U.S. jobs are at "high risk" of potential automation, while an OECD report classifies only 9% of U.S. jobs as "high risk". Jobs at extreme risk range from paralegals to fast food cooks, while job demand is likely to increase for care-related professions ranging from personal healthcare to the clergy.[343] Author Martin Ford and others go further and argue that a large number of jobs are routine, repetitive and (to an AI) predictable; Ford warns that these jobs may be automated in the next couple of decades, and that many of the new jobs may not be "accessible to people with average capability", even with retraining. Economists point out that in the past technology has tended to increase rather than reduce total employment, but acknowledge that "we're in uncharted territory" with AI.

- Autonomous weapons: Currently, 50+ countries are researching battlefield robots, including the United States, China, Russia, and the United Kingdom. Many people concerned about risk from superintelligent AI also want to limit the use of artificial soldiers and drones.

See Also

Artificial General Intelligence (AGI)

Artificial Neural Network (ANN)

Human Computer Interaction (HCI)

Human-Centered Design (HCD)

Machine-to-Machine (M2M)

Machine Learning

Predictive Analytics

Data Analytics

Data Analysis

Big Data

References

- ↑ Definition: What does Artificial Intelligence (AI) Mean? [1]

- ↑ What is Artificial Intelligence Trollhetta

- ↑ The Historical Overview of Artificial Intelligence Builtin

- ↑ Why Use Artificial Intelligence Software? G2Crowd

- ↑ What does the AI technology lanscape look like ?Callaghan Innovation

- ↑ Why is artificial intelligence important? SAS

- ↑ he Industrial Applications of Artificial Intelligences Engineers Garage

- ↑ Technological unemployment is unfortunately a byproduct of progress UBS

- ↑ Seven stages in the future evolution of Artificial Intelligence EM360

- ↑ The potential harm from Artificial Intelligence Wikipedia

Further Reading

- What is artificial intelligence? Brookings.edu

- 10 Powerful Examples Of Artificial Intelligence In Use Today Forbes

- Artificial Intelligence for the Real World HBR

- State of AI in the Enterprise, 2nd Edition Deloitte

- The promise and challenge of the age of artificial intelligence McKinsey

- ‘Artificial Intelligence’ Has Become Meaningless The Atlantic

- Should We Worry About Artificial Intelligence (AI)? Coding Dojo