Data Integration

Data integration is the combination of technical and business processes used to combine data from disparate sources into meaningful and valuable information. A complete data integration solution delivers trusted data from various sources.[1]

Data integration primarily supports the analytical processing of large data sets by aligning, combining and presenting each data set from organizational departments and external remote sources to fulfill integrator objectives. Data integration is generally implemented in data warehouses (DW) through specialized software that hosts large data repositories from internal and external resources. Data is extracted, amalgamated and presented as a unified form. For example, a user’s complete data set may include extracted and combined data from marketing, sales and operations, which is combined to form a complete report. An example of data integration in a smaller paradigm is spreadsheet integration in a Microsoft Word document.[2]

Data Integration creates a unified view that can help inform critical business processes in the future. In a commercial situation, the overall goal of data integration is to connect and combine business information that, when initially collected, may have been placed into separate silos managed by unique stakeholders. Generally, these silos are overseen by business units that have little regular interaction with each other, causing a lack of shared insight that can result in missed opportunities for the company as a whole. Data integration helps to unite them these siloes, empowering the company to take a holistic approach to serving each customer, asset or initiative.Data integration may take place in a physical or virtual data warehouse, utilizing software that hosts large repositories of data from internal and external resources. One approach is to use an extract, transform and load method (ETL) to extract the necessary data given the integration objective, amalgamate the relevant information and then present it as a unified set of content for analysis.[3]

Data integration tools have traditionally been delivered via a set of related markets, with vendors in each market offering a specific style of data integration tool. In recent years, most of the activity has been within the ETL tool market. Markets for replication tools, data federation (EII) and other submarkets each included vendors offering tools optimized for a particular style of data integration, and periphery markets (such as data quality tools, adapters and data modeling tools) also overlapped with the data integration tool space. The result of all this historical fragmentation in the markets is the equally fragmented and complex way in which data integration is accomplished in large enterprises — different teams using different tools, with little consistency, lots of overlap and redundancy, and no common management or leverage of metadata. Technology buyers have been forced to acquire a portfolio of tools from multiple vendors to amass the capabilities necessary to address the full range of their data integration requirements. This situation is now changing, with the separate and distinct data integration tool submarkets converging at the vendor and technology levels. This is being driven by buyer demands as organizations realize they need to think about data integration holistically and have a common set of data integration capabilities they can use across the enterprise. It is also being driven by the actions of vendors, such as those in individual data integration submarkets organically expanding their capabilities into neighboring areas, as well as by acquisition activity that brings vendors from multiple submarkets together. The result is a market for complete data integration tools that address a range of different data integration styles and are based on common design tooling, metadata and runtime architecture.[4]

Data Integration Historical Background[5]

Issues with combining heterogeneous data sources, often referred to as information silos, under a single query interface have existed for some time. In the early 1980s, computer scientists began designing systems for interoperability of heterogeneous databases. The first data integration system driven by structured metadata was designed at the University of Minnesota in 1991, for the Integrated Public Use Microdata Series (IPUMS). IPUMS used a data warehousing approach, which extracts, transforms, and loads data from heterogeneous sources into a single view schema so data from different sources become compatible. By making thousands of population databases interoperable, IPUMS demonstrated the feasibility of large-scale data integration. The data warehouse approach offers a tightly coupled architecture because the data are already physically reconciled in a single queryable repository, so it usually takes little time to resolve queries. The data warehouse approach is less feasible for datasets that are frequently updated, requiring the extract, transform, load (ETL) process to be continuously re-executed for synchronization. Difficulties also arise in constructing data warehouses when one has only a query interface to summary data sources and no access to the full data. This problem frequently emerges when integrating several commercial query services like travel or classified advertisement web applications. As of 2009 the trend in data integration favored loosening the coupling between data and providing a unified query-interface to access real time data over a mediated schema, which allows information to be retrieved directly from original databases. This is consistent with the SOA approach popular in that era. This approach relies on mappings between the mediated schema and the schema of original sources, and transform a query into specialized queries to match the schema of the original databases. Such mappings can be specified in 2 ways : as a mapping from entities in the mediated schema to entities in the original sources (the "Global As View" (GAV) approach), or as a mapping from entities in the original sources to the mediated schema (the "Local As View" (LAV) approach). The latter approach requires more sophisticated inferences to resolve a query on the mediated schema, but makes it easier to add new data sources to a (stable) mediated schema. As of 2010 some of the work in data integration research concerns the semantic integration problem. This problem addresses not the structuring of the architecture of the integration, but how to resolve semantic conflicts between heterogeneous data sources. For example, if two companies merge their databases, certain concepts and definitions in their respective schemas like "earnings" inevitably have different meanings. In one database it may mean profits in dollars (a floating-point number), while in the other it might represent the number of sales (an integer). A common strategy for the resolution of such problems involves the use of ontologies which explicitly define schema terms and thus help to resolve semantic conflicts. This approach represents ontology-based data integration. On the other hand, the problem of combining research results from different bioinformatics repositories requires bench-marking of the similarities, computed from different data sources, on a single criterion such as positive predictive value. This enables the data sources to be directly comparable and can be integrated even when the natures of experiments are distinct. As of 2011 it was determined that current data modeling methods were imparting data isolation into every data architecture in the form of islands of disparate data and information silos. This data isolation is an unintended artifact of the data modeling methodology that results in the development of disparate data models. Disparate data models, when instantiated as databases, form disparate databases. Enhanced data model methodologies have been developed to eliminate the data isolation artifact and to promote the development of integrated data models. One enhanced data modeling method recasts data models by augmenting them with structural metadata in the form of standardized data entities. As a result of recasting multiple data models, the set of recast data models will now share one or more commonality relationships that relate the structural metadata now common to these data models. Commonality relationships are a peer-to-peer type of entity relationships that relate the standardized data entities of multiple data models. Multiple data models that contain the same standard data entity may participate in the same commonality relationship. When integrated data models are instantiated as databases and are properly populated from a common set of master data, then these databases are integrated. Since 2011, data hub approaches have been of greater interest than fully structured (typically relational) Enterprise Data Warehouses. Since 2013, data lake approaches have risen to the level of Data Hubs. These approaches combine unstructured or varied data into one location, but do not necessarily require an (often complex) master relational schema to structure and define all data in the Hub.

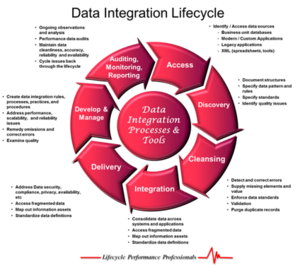

The Data Integration Lifecycle[6]

Data integration is the process of combining data residing at different sources and providing the user with a unified view of the data.

- Access – Data comes from many sources, including legacy application and systems, databases, modern applications, various XML messages and numerous types of documents (spreadsheets, project plans, text documents, etc). Identifying and accessing these sources is the first step to data integration.

- Discovery – This involves bringing all data sources out into the open, and documenting the uses and structures of poorly understood or described sources. This is also the point at which data semantics (patterns or rules that emerge from its structure and use) and quality issue should be noted and flagged for further action.

- Cleansing – Data is cleaned up for accuracy and integrity. Clean-up can involve detecting and correcting errors, supplying missing elements and value, enforcing data standards, validating data and purging duplicate entries.

- Integration – This step involves consolidating data across all systems and applications, accessing their fragmented data, creating an accurate and consistent view of their information assets, and leveraging those assets to drive business decisions and operations. This often means resolving inconsistent utilization and definition for identical terms across different contexts.

- Delivery – Correct, relevant data is made available in proper form, in a timely manner, to all users and applications that need such access. This might mean responding to queries that result in single records or small answer sets to delivering entire data sets for trend analysis or enterprise-wide reporting. This step also addresses needs for data security, availability, privacy and compliance requirements related to access and use.

- Development and Management – This is where XML-based toolsets enable those who manage data; business analysts, architects, developers and managers to work together in creating a comprehensive set of data integration rules, processes, practices and procedures, thereby capturing and implementing all the substantive work done in the five preceding steps. This step also tackles issues related to performance, scalability and reliability needs for key enterprise applications and services.

- Auditing, Monitoring and Reporting – Once its semantics and uses have been captured, omissions remedied, errors corrected, and quality examined and assured, ongoing observation and analysis is required to keep the data clean, correct, reliable and available. This part of the process makes it possible to flag potential issues as they occur and to cycle them back through this lifecycle to make sure they resolved. Auditing also helps to make sure that data remains visible, under control, and able to guide future changes and enhancements.

source: Victor Holam

Types of Data Integration[7]

There are methods of bringing data together into an integrated view and there are techniques for bringing data together physically, for an integration version. One can argue that both are a type of data integration, the difference being whether the data was physically moved and/or manipulated. Below are a few common data integration approaches.

- Data Consolidation: Data consolidation physically brings data together from several separate systems, creating a version of the consolidated data in one data store. Often the goal of data consolidation is to reduce the number of data storage locations. Extract, transform, and load (ETL) technology supports data consolidation.

ETL pulls data from sources, transforms it into an understandable format, and then transfers it to another database or data warehouse. The ETL process cleans, filters, and transforms data, and then applies business rules before data populates the new source.

- Data Propagation: Data propagation is the use of applications to copy data from one location to another. It is event-driven and can be done synchronously or asynchronously. Most synchronous data propagation supports a two-way data exchange between the source and the target. Enterprise application integration (EAI) and enterprise data replication (EDR) technologies support data propagation. EAI integrates application systems for the exchange of messages and transactions. It is often used for real-time business transaction processing. Integration platform as a service (iPaaS) is a modern approach to EAI integration. EDR typically transfers large amounts of data between databases, instead of applications. base triggers and logs are used to capture and disseminate data changes between the source and remote databases.

- Data Virtualization: Virtualization uses an interface to provide a near real-time, unified view of data from disparate sources with different data models. Data can be viewed in one location, but is not stored in that single location. Data virtualization retrieves and interprets data, but does not require uniform formatting or a single point of access.

- Data Federation: Federation is technically a form of data virtualization. It uses a virtual database and creates a common data model for heterogeneous data from different systems. Data is brought together and viewable from a single point of access. Enterprise information integration (EII) is a technology that supports data federation. It uses data abstraction to provide a unified view of data from different sources. That data can then be presented or analyzed in new ways through applications. Virtualization and federation are good workarounds for situations where data consolidation is cost prohibitive or would cause too many security and compliance issues.

- Data Warehousing: Warehousing is included in this list because it is a commonly used term. However, its meaning is more generic than the other methods previously mentioned. Data warehouses are storage repositories for data. However, when the term “data warehousing,” is used, it implies the cleansing, reformatting, and storage of data, which is basically data integration.

Data Integration Techniques[8]

There are several organizational levels on which the integration can be performed. As we go down the level of automated integration increases.

- Manual Integration or Common User Interface - users operate with all the relevant information accessing all the source systems or web page interface. No unified view of the data exists.

- Application Based Integration - requires the particular applications to implement all the integration efforts. This approach is manageable only in case of very limited number of applications.

- Middleware Data Integration - transfers the integration logic from particular applications to a new middleware layer. Although the integration logic is not implemented in the applications anymore, there is still a need for the applications to partially participate in the data integration.

- Uniform Data Access or Virtual Integration - leaves data in the source systems and defines a set of views to provide and access the unified view to the customer across whole enterprise. For example, when a user accesses the customer information, the particular details of the customer are transparently acquired from the respective system. The main benefits of the virtual integration are nearly zero latency of the data updates propagation from the source system to the consolidated view, no need for separate store for the consolidated data. However, the drawbacks include limited possibility of data's history and version management, limitation to apply the method only to 'similar’ data sources (e.g. same type of database) and the fact that the access to the user data generates extra load on the source systems which may not have been designed to accommodate.

- Common Data Storage or Physical Data Integration - usually means creating a new system which keeps a copy of the data from the source systems to store and manage it independently of the original system. The most well know example of this approach is called Data Warehouse (DW). The benefits comprise data version management, combining data from very different sources (mainframes, databases, flat files, etc.). The physical integration, however, requires a separate system to handle the vast volumes of data.

Data Integration Process[9]

People usually oversimplify data integration by assuming it involves only extract, transform and load (ETL) tools. Though critical, an ETL tool is just one piece of a complex puzzle. The data integration framework (DIF) encompasses two categories of processes. The first category is the process to determine your data requirements and solution. The second is the process used to physically gather the data from its sources and transform it into information that businesspeople can use to analyze and make decisions.The more attention paid to the processes, the more robust, maintainable and understandable the data is both to IT and businesspeople.

- Determining Data Requirements (At the end you will know what data to source and store)

- Gathering business requirements

- Determining data and quality needs

- Data profiling or understanding data sources and associated quality both in the source system and across multiple source systems, if applicable

- Performing a data quality assessment against the metrics the business has requested

- Defining the gap between what data is available and its quality versus what the business has requested

- Revising business expectations or project costs and determining the selected data solution

- Modeling the data stores necessary – staging areas, data warehouse, operational data store and data mart(s) – both from a logical perspective (to confirm business requirements) and a physical perspective (to enable implementation).

- Gathering Data

- Data preparation, the most complex of these processes, includes gathering, reformatting, consolidating, transforming, cleansing and storing data both in staging areas and the data warehouse. It’s best to incorporate the data quality or cleansing activities into the initial process workflow. Many people either assume that the quality of their data is fine or that data quality is the transactional system’s problem. The reality is that there are many inconsistencies between source systems that are not apparent to the people who use the individual systems. It is only when data is consolidated that quality comes into question. In addition, business analytics may require dimensions, measures, or metrics that are not critical in data creation (e.g., product category). In these cases, it is important to define the processes to handle these situations in the data preparation processes. The end result of this stage is a data warehouse where information is stored and managed for “downstream” use by businesspeople.

- Data franchising is the process of reconstructing data into information that is usable by businesspeople for reporting and analysis. The data from the data warehouse may be filtered, aggregated, summarized, staged and stored in a data mart where it will be accessed by the businesspeople using business intelligence (BI) tools. These processes may be referred to by the IT staff as denormalization. It simply means the data has been reconstructed to allow the BI tools to better handle and manipulate the data.

- Data and meta data management are processes behind the scenes that pass the data and its definitions (meta data) between the other processes. Data and meta data management are often considered by both the IT staff and vendors to be weak cousins to the data preparation and data franchising processes.

Solving Issues With Data integration[10]

Data integration seeks to solve many of the following issues that come about when you have disparate information stored in different applications across an organization.

- Data Silos: A data silo, much like the grain silo it is named after, is a repository of data that is isolated. Generally, in businesses, this means that the information is under the control of a business unit or department and is not available across the organization. This can also occur when an organization has stored information in software that is incompatible. For example, maybe you have some of your marketing data in Salesforce, other data in Marketo, and still more in a database maintained by your Marketing team. But since these systems don't communicate, the information in each application is siloed, so you might be able to draw conclusions from the Salesforce data and the Marketo data, but you won't be able to bring those information sources together to understand the information in totality.

- Slow Analysis: Business leaders agree that today's decision making is heavily dependent on good information. Yet, even though they rely upon good data, companies are often frustrated by the amount of time it takes to integrate it. If your data is spread across multiple teams, databases, and applications, it can take a long time to gather and process the data so you can analyze it. And if the process takes long enough, the data will be outdated by the time you have a chance to analyze it. Business decisions need to be made in real time, and the way to do that is to have a system in place to integrate data before you need it.

- Deep Dives: When your data is scattered across different sources and applications, it's difficult to have a complete view of it. For example, maybe you have customer data from different devices and apps. Maybe you have data on purchases from your different storefronts and online sites, but you want to correlate that data with your customer information and you want to enrich it with timestamps and geographical information for a deep analysis of your sales data. If your systems aren't integrated, or if the data isn't compatible, you won't be able to correlate this information without considerable time and effort.

Benefits of Data Integration[11]

Even if a company is receiving all the data it needs, that data will most often reside in a number of separate data sources. Information from all of those different sources often needs to be pulled together for analysis, and that can be no small task for a professional trying to create a report or make an informed management decision. Without unified data, a single report typically involves logging into multiple accounts, on multiple sites, accessing data within native apps, copying over the data, reformatting, and cleansing, all before analysis can happen. This is central to the importance of data integration, and it brings us to the major benefits of these efforts:

- Data Integration Improves Collaboration and Unification of Systems: Employees in every department — and sometimes in disparate physical locations — increasingly need access to the company's data for shared and individual projects. IT needs a secure solution for delivering data via self-service access across all lines of business. Additionally, employees in almost every department are generating and improving data that the rest of the business needs. Data integration needs to be collaborative and unified in order to improve collaboration and unification across the organization.

- Data Integration Saves Time: When a company takes measures to integrate its data, it cuts down significantly on the time it takes to analyze that data. The automation of unified views cuts out the need for manually gathering data, and employees no longer need to build from scratch whenever they need to run a report. Additionally, using the right tools, rather than hand-coding the integration, returns even more time (and resources overall) to the dev team. All the time saved on these tasks can be put to other, better uses, with more hours earmarked for analysis and execution to make an organization more productive and competitive.

- Data Integration Reduces Errors (and Rework): There’s a lot to keep up with when it comes to a company’s data resources. To manually gather data, employees must know every location and account that they might need to explore — and have all necessary software installed before they begin — to ensure their data sets will be complete and accurate. If a data repository is added, and that employee is unaware, they will have an incomplete data set. Additionally, without a data integration solution that synchronizes data, reporting must be periodically redone to account for any changes. With automated updates, however, reports can be run easily in real-time, whenever they’re needed.

- Data Integration Delivers More Valuable Data: Data integration efforts actually improve the value of a business’ data over time. As data is integrated into a centralized system, quality issues are identified and necessary improvements are implemented, which ultimately results in more accurate data — the foundation for quality analysis.

Data Integration Challenges[12]

Data Integration is the process of identifying ways to bring data from disparate sources and combining them to have a unified view. Well, that is a challenging task by itself. From a technical standpoint, the technical aspect of this implementation is the first level of challenge. It is never easy to understand data residing in disparate sources and then design a common structure to fit them all at one place. Diving a bit deeper, let’s categorize the challenges and make a list of things to be considered as a challenge for any Data Integration project.

- Design Challenges

- To Have A Good Understanding Of Data: It is very important to have a person (or a team of people) who understand the data assets of the enterprise and also the source systems. I would like to call them Data Champions. The data champions should be able to lead the discussions about the long-term data integration goals in order to make it consistent and successful.

- Understanding Of Objectives And Deliverables: The next big thing is to have a good understanding of business requirements. What is the business purpose behind the data integration initiative? Understanding of the objectives and deliverables for the project is critical to the next steps. What are the source systems? Do the source systems have data to support the business requirements? What are the gaps between data and the requirements? These questions should be answered adequately.

- Analysis Of The Source Systems And Extraction: Having a good understanding of the options of extracting data from the source systems is critical to the overall success. Things like frequency of extracts, extent of data extraction (full extract or incremental), quality of the data in the source systems affect the timeline and overall direction of the project. In addition, it’s important to know about the volume of data extract to be able to plan the integration approach along with a knowledge of source system’s backup schedules, any specific maintenance windows etc. that may impact the data integration process.

- Implementation Challenges

- Tool Selection: The tool selection is also very important to the overall success of any data integration project. A feasibility study should be performed to select the right toolset for the job.

Small enterprises or companies who are just starting their data warehousing initiative are faced with this challenge and sometimes, making that decision isn’t easy considering the number of options available today. The feasibility study helps map out which tools are best suited for the overall data integration objective for the organization. Sometimes, for organizations that have built their data warehouse and a lot of ETL processes using a tool which is no more able to scale or the tool is no more relevant. For example, organizations that have invested years of work in OWB are facing that challenge as Oracle decided to do away with OWB to promote a much better tool, ODI. In these cases, even matured organizations need to do a feasibility analysis to estimate what it takes to upgrade the existing Data Integration infrastructure to the new toolset.

Data Integration in Modern Business

Data integration isn’t a one-size-fits-all solution; the right formula can vary based on numerous business needs.

- Leverage Big Data: Data lakes can be highly complex and massive in volume. Companies like Facebook and Google, for instance, process a non-stop influx of data from billions of users. This level of information consumption is commonly referred to as big data. As more big data enterprises crop up, more data becomes available for businesses to leverage. That means the need for sophisticated data integration efforts becomes central to operations for many organizations.

- Create Data Warehouses: Data integration initiatives — particularly among large businesses — are often used to create data warehouses, which combine multiple data sources into a relational database. Data warehouses allow users to run queries, compile reports, generate analysis, and retrieve data in a consistent format.

- Simplify Business Intelligence (BI): By delivering a unified view of data from numerous sources, data integration simplifies the Business Intelligence (BI) processes of analysis. Organizations can easily view, and quickly comprehend, the available data sets in order to derive actionable information on the current state of the business. With data integration, analysts can compile more information for more accurate evaluation without being overwhelmed by high volumes. Unlike business analytics, BI doesn’t use predictive analysis to make future projections; instead, it focuses on describing the present and past to aid in strategic decision-making. This use of data integration is well-suited to data warehousing, where high-level overview information in an easily consumable format aligns nicely.

- ETL and Data Integration: Extract, Transform, Load, commonly known as ETL, is a process within data integration wherein data is taken from the source system and delivered into the warehouse. This is the ongoing process that data warehousing undertakes to transform multiple data sources into useful, consistent information for business intelligence and analytical efforts.

See Also

Application Integration

Enterprise Application Integration (EAI)

Enterprise Information Integration (EII)

Business Process Integration

Enterprise Integration

Data Mining

Data Management

Data Warehouse

Customer Data Management (CDM)

Big Data

Business Intelligence

Data Analysis

Data Analytics

References

- ↑ Defining Data Integration IBM

- ↑ Explaining Data Integration Techopedia

- ↑ What is Data Integration? Pitney Bowes

- ↑ Understanding Data Integration Gartner

- ↑ Historical Background of Data Integration Wikipedia

- ↑ The Data Integration Lifecycle Victor Holam

- ↑ 5 Types of Data Integration Globalscape

- ↑ Data Integration Techniques DataIntegrationInfo

- ↑ What are the Essential Steps in the Data Integration Process? Athena

- ↑ Solving Issues With Data integration DZone

- ↑ Benefits of Data Integration Talend

- ↑ Data Integration Challenges DI Ninjas

Further Reading

- Seven Styles of Data Integration Information Builders

- Structuring data integration models and data integration architecture Techtarget

- Data Integration: Using ETL, EAI, and EII Tools to Create an Integrated Enterprise (Report Excerpt) BI-Best Practices

- Managing Data in Motion: Data Integration Best Practice Techniques and Technologies April reeve

- Data Integration: A Theoretical Perspective Maurizio Lenzerini

- Data Integration — Problems, Approaches, and Perspectives Patrick Ziegler and Klaus R. Dittrich