Acceptance Testing

Acceptance Testing is a type of testing done by users, customers, or other authorized entities to determine application/software needs and business processes. Acceptance testing is the most important phase of testing as this decides whether the client approves the application/software or not. It may involve functionality, usability, performance, and U.I of the application. It is also known as user acceptance testing (UAT), operational acceptance testing (OAT), and end-user testing. It is one of the final stages of the software’s testing cycle and often occurs before a client or customer accepts the new application. Acceptance tests are black-box system tests. Users of the system perform tests in line with what would occur in real-time scenarios and verify whether or not the software/application meets all specifications.[1]

Acceptance Testing is a level of software testing where a system is tested for acceptability. The purpose of this test is to evaluate the system’s compliance with the business requirements and assess whether it is acceptable for delivery.

source: [ https://www.istqb.org/ ISTBQ]

The acceptance testing process, which is also known as end-user testing, operational acceptance testing, or field testing, acts as a form of quality control to identify problems and defects while they can still be corrected relatively painlessly. It is one of the final stages of the software’s testing cycle and often occurs before a client or customer accepts the new application — and encourages close collaboration between developers and customers. Acceptance tests are designed to replicate the anticipated real-life use of the product to verify that it is fully functional and complies with the specifications agreed upon between the customer and the manufacturer. They may involve chemical tests, physical tests, or performance tests, which may be refined if needed. If the actual results match the expected results for each test case, the product will pass and be considered adequate. It will then either be rejected or accepted by the customer.[2]

Origins of Acceptance Testing[3]

- 1996: Automated tests identified as a practice of [^Extreme-Programming-XP|Extreme Programming], without much emphasis on the distinction between unit and acceptance testing, and with no particular notation or tool recommended

- 2002: Ward Cunningham, one of the inventors of Extreme Programming, publishes Fit, a tool for acceptance testing based on a tabular, Excel-like notation

- 2003: Bob Martin combines Fit with Wikis (another invention of Cunningham's), creating FitNesse

- 2003-2006: Fit/FitNesse combo eclipses most other tools and becomes the mainstream model for Agile acceptance testing

Who Performs Acceptance Testing[4]

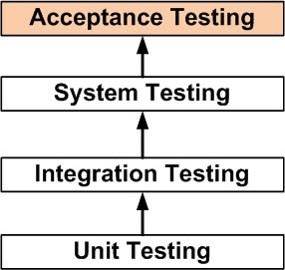

Acceptance Testing is the fourth and last level of software testing performed after System Testing and before making the system available for actual use.

- Internal Acceptance Testing (Also known as Alpha Testing) is performed by members of the organization that developed the software but who are not directly involved in the project (Development or Testing). Usually, it is the members of Product Management, Sales, and/or Customer Support.

- External Acceptance Testing is performed by people who are not employees of the organization that developed the software.

- Customer Acceptance Testing is performed by the customers of the organization that developed the software. They are the ones who asked the organization to develop the software. (This is in the case of the software not being owned by the organization that developed it.) - User Acceptance Testing (Also known as Beta Testing) is performed by the end users of the software. They can be the customers themselves or the customers’ customers.

Key Points for Acceptance Testing[5]

- Test that the entire system works according to specifications and serves its purpose

Acceptance testing ensures that the supplied system meets its requirements and other contract details. It is important to check the relevant business processes in an end-to-end manner in order to ensure that the system fully meets the business needs.

- Independence from the supplier/developer

Objective, non-biased acceptance testing requires a testing party that is independent of the system supplier or developer.

- Consider the end user's needs

The system’s end users are in a key role during test planning and while testing the business processes. However, experienced testing professionals are needed to discover issues that are overlooked by normal users. Testimate specializes in testing business and technical requirements from the end user’s point of view.

Types of Acceptance Testing[6]

Acceptance testing is the practice of confirming that a product, service, system, process, practice, or document meets a set of requirements. As the term suggests, acceptance testing is the process of accepting a project release or change request for launch. The following are common types of acceptance testing.

- Non-Functional Testing: Non-Functional testing is a broad term for any test designed to confirm a non-functional requirement. This includes areas such as accessibility, quality, security, operations, and design that aren't considered functional requirements by a particular business unit.

- Operational Testing: Testing conducted by teams who are responsible for support, maintenance, and day-to-day operations of a process, service, or system. Operational testing confirms requirements such as performance, interoperability, backup, recovery, and maintenance procedures.

- Performance Testing: Performance testing confirms that user interfaces and automation remain responsive under anticipated workload, volumes, and stresses.

- Regression Testing: Regression tests confirm that changes didn't break existing functionality or non-functional qualities.

- Smoke Testing: A basic test that a build is successful. Typically performed by developers before announcing that a release is ready for testing. The term extends from initial product development tests that switch on an electronic device for the first time in the hopes that it doesn't begin smoking and catch on fire.

- Security Testing: The practice of testing information technology for security vulnerabilities using known threats and attack patterns.

- User Acceptance Testing: Testing of user interfaces by stakeholders or test analysts representing stakeholders.

Acceptance Testing Process[7]

source: Software Testing Help

- Business Requirements Analysis: Business requirements are analyzed by referring to all the available documents within the project.

- System Requirement Specifications

- Business Requirements Document

- Use Cases

- Workflow diagrams

- Designed data matrix

- Design Acceptance Test Plan: There are certain items to be documented in the Acceptance Test Plan.

- Acceptance Testing strategy and approach.

- Entry and exit criteria should be well-defined.

- The scope of AT should be well-mentioned and it has to cover only the business requirements.

- Acceptance test design approach should be detailed so that anyone writing tests can easily understand the way in which it has to be written.

- Test Bed setup and actual testing schedule/timelines should be mentioned.

- As testing is conducted by different stakeholders, details on logging bugs should be mentioned as the stakeholders may not be aware of the procedure followed.

- Design and Review Acceptance Tests

Acceptance tests should be written at a scenario level mentioning what has to be done (not in-detail to include how to do). These should be written only for the identified areas of scope for business requirements, and each and every test has to be mapped to its referencing requirement.

All the written acceptance tests have to be reviewed to achieve high coverage on business requirements.

This is to make sure that any other tests apart from scope mentioned are not involved so that testing lies within the scheduled timelines.

- Acceptance Test Bed Set up

Test Bed should be set up similar to a Production environment. Very high-level checks are required to confirm on environment stability and usage. Share the credentials to use the environment only with a stakeholder who is performing this testing.

- Acceptance Test Data Set Up

Production data has to be prepared/populated as test data in the systems. Also, there should be a detailed document in such a way that the data has to be used for testing.

Do not have the test data like TestName1, TestCity1, etc., Instead have Albert, Mexico, etc. This gives a rich experience of real-time data and testing will be up-to-the-point.

- Acceptance Test Execution

Designed Acceptance tests have to be executed on the environment at this step. Ideally, all the tests should pass at the first attempt itself. There should be no functional bugs arising out of Acceptance testing, if any, then they should be reported at a high priority to be fixed.

Again, bugs fixed have to be verified and closed as a high priority task. Test execution report has to be shared on a daily basis.

Bugs logged in this phase should be discussed in a bug-triage meeting and has to undergo Root Cause Analysis procedure. This is the only point where acceptance testing assess whether all the business requirements are actually met by the product or not.

- Business Decision

There comes out a Go/No-Go decision for the product to be launched in Production. Go decision will take the product ahead to be released to the market. No-Go decision marks the product as Failure. Few factors of No-Go Decision:

- Poor Quality of the product.

- Too Many open Functional Bugs.

- Deviation from business requirements.

- Not up to the market standards and needs enhancements to match the current market standards.

Acceptance Testing in Extreme Programming[8]

Acceptance testing is a term used in agile software development methodologies, particularly extreme programming, referring to the functional testing of a user story by the software development team during the implementation phase.

The customer specifies scenarios to test when a user story has been correctly implemented. A story can have one or many acceptance tests, whatever it takes to ensure the functionality works. Acceptance tests are black-box system tests. Each acceptance test represents some expected result from the system. Customers are responsible for verifying the correctness of the acceptance tests and reviewing test scores to decide which failed tests are of highest priority. Acceptance tests are also used as regression tests prior to a production release. A user story is not considered complete until it has passed its acceptance tests. This means that new acceptance tests must be created for each iteration or the development team will report zero progress

Benefits and Pitfalls of Acceptance Testing[9]

Expected Benefits

Acceptance testing has the following benefits, complementing those which can be obtained from unit tests:

- encouraging closer collaboration between developers on the one hand and customers, users or domain experts on the other, as they entail that business requirements should be expressed

- providing a clear and unambiguous "contract" between customers and developers; a product which passes acceptance tests will be considered adequate (though customers and developers might refine existing tests or suggest new ones as necessary)

- decreasing the chance and severity both of new defects and regressions (defects impairing functionality previously reviewed and declared acceptable)

Common Pitfalls

Expressing acceptance tests in an overly technical manner. Customers and domain experts, the primary audience for acceptance tests, find acceptance tests that contain implementation details difficult to review and understand. To prevent acceptance tests from being overly concerned with technical implementation, involve customers and/or domain experts in the creation and discussion of acceptance tests. Acceptance tests that are unduly focused on technical implementation also run a the risk of failing due to minor or cosmetic changes which in reality do not have any impact on the product's behavior. For example, if an acceptance test references the label for a text field, and that label changes, the acceptance test fails even though the actual functioning of the product is not impacted.

See Also

References

- ↑ Definition - What is Acceptance Testing? -Economics Times

- ↑ Explaining Acceptance Testing -Techopedia

- ↑ History of Acceptance Testing -Agile Alliance

- ↑ Who performs Acceptance Testing? -Software Fundamentals

- ↑ Key Points for Acceptance Testing -Testimate

- ↑ What are the Different Types of Acceptance Testing?-Simplicable

- ↑ Acceptance Testing Process -Software Testing Help

- ↑ Acceptance Testing in Extreme Programming -Wikipedia

- ↑ What are the Benefits and Pitfalls of Acceptance Testing -agilealliance.org

Further Reading

- Acceptance Test Engineering Guide Grigori Melnik, Gerard Meszaros

- Automated Acceptance Testing: A Literature Review and an Industrial Case Study B. Haugset and G. K. Hanssen

- User Acceptance Testing - A step-by-step guide Brian Hambling, Pauline van Goethem