ModelOps

ModelOps is a holistic approach for rapidly and iteratively moving models through the analytics life cycle so they are deployed faster and deliver expected business value. ModelOps is based on the application development community's DevOps approach. But where DevOps focuses on application development, ModelOps focuses on getting models from the lab through validation, testing and deployment phases as quickly as possible, while ensuring quality results. It also focuses on ongoing monitoring and retraining of models to ensure peak performance.[1]

According to Gartner, "ModelOps (or AI model operationalization) is focused primarily on the governance and life cycle management of a wide range of operationalized artificial intelligence (AI) and decision models, including machine learning, knowledge graphs, rules, optimization, linguistic and agent-based models. Core capabilities include continuous integration/continuous delivery (CI/CD) integration, model development environments, champion-challenger testing, model versioning, model store and rollback."[2]

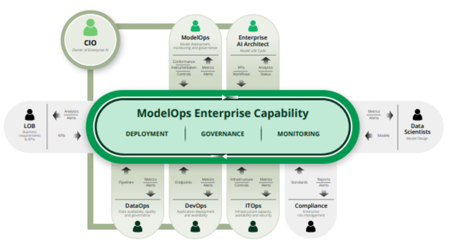

“ModelOps lies at the center of any organizations’ enterprise AI strategy”. ModelOps is about creating a shared service that runs across the organization — enabling robust scaling, governance, integration, monitoring and management of various AI models. Adopting a ModelOps strategy should facilitate improvements to the performance, scalability and reliability of AI models. ModelOps aims to eliminate internal friction between teams by sharing accountability and responsibility. It protects the organization’s interests, both internally and externally. ModelOps (and its MLOps subset which focus on ML models only) is a key capability that is required for successful AI/ML model operations once models have been developed. It is a discipline that is separate and apart from model development. Industry experts and analysts are recognizing that model development and model operations are different disciplines, requiring different capabilities, tools and even teams. Gartner, in a recent article, states, “Platform independence: AI pipelines span multiple environments from developer notebooks to edge to data center to cloud deployments. A true ModelOps framework allows you to bring standardization and scalability across these disparate environments so that development, training and deployment processes can run consistently and in a platform-agnostic manner.”[3]

History of ModelOps[4]

In a 2018 Gartner survey, 37% of respondents reported that they had deployed AI in some form; however, Gartner pointed out that enterprises were still far from implementing AI, citing deployment challenges. Enterprises were accumulating undeployed, unused, and unrefreshed models, and manually deployed, often at a business unit level, increasing the risk exposure of the entire enterprise. Independent analyst firm Forrester also covered this topic in a 2018 report on machine learning and predictive analytics vendors: “Data scientists regularly complain that their models are only sometimes or never deployed. A big part of the problem is organizational chaos in understanding how to apply and design models into applications. But another big part of the problem is technology. Models aren’t like software code because they need model management.”

In December 2018, Waldemar Hummer and Vinod Muthusamy of IBM Research AI, proposed ModelOps as “a programming model for reusable, platform-independent, and composable AI workflows” on IBM Programming Languages Day. In their presentation, they noted the difference between the application development lifecycle, represented by DevOps, and the AI application lifecycle.

The goal for developing ModelOps was to address the gap between model deployment and model governance, ensuring that all models were running in production with strong governance, aligned with technical and business KPI's, while managing the risk. In their presentation, Hummer and Muthusamy described a programmatic solution for AI-aware staged deployment and reusable components that would enable model versions to match business apps, and which would include AI model concepts such as model monitoring, drift detection, and active learning. The solution would also address the tension between model performance and business key performance indicators (KPIs), application and model logs, and model proxies and evolving policies. Various cloud platforms were part of the proposal. In June 2019, Hummer, Muthusamy, Thomas Rausch, Parijat Dube, and Kaoutar El Maghraoui presented a paper at the 2019 IEEE International Conference on Cloud Engineering (IC2E). The paper expanded on their 2018 presentation, proposing ModelOps as a cloud-based framework and platform for end-to-end development and lifecycle management of artificial intelligence (AI) applications. In the abstract, they stated that the framework would show how it is possible to extend the principles of software lifecycle management to enable automation, trust, reliability, traceability, quality control, and reproducibility of AI model pipelines. In March 2020, ModelOp, Inc. published the first comprehensive guide to ModelOps methodology. The objective of this publication was to provide an overview of the capabilities of ModelOps, as well as the technical and organizational requirements for implementing ModelOps practices.

In October 2020, ModelOp launched ModelOp.io, an online hub for ModelOps and MLOps resources. Alongside the launch of this website, ModelOp released a Request for Proposal (RFP) template. Resulting from interviews with industry experts and analysts, this RFP template was designed to address the functional requirements of ModelOps and MLOps solutions.

Increasing ROI With ModelOps[5]

ModelOps removes a key friction point in the analytics life cycle, which helps ensure that analytic investments deliver business value faster. A ModelOps approach gets analytics out of the lab and into use, enabling you to conquer the last mile of analytics.

- Strong Governance: Preserve data lineage and track-back information for governance and audit compliance.

- Faster Deployment: Deploy models in minutes, not months, with close collaboration between data scientists and IT.

- Continuous Monitoring: Deploy models with a monitoring mindset so analysts can monitor and retrain models as they degrade.

Many Large Enterprises Struggle to Scale AI[6]

- Number of Models: Each business will need to manage hundreds of models to account for business process variations, personalization, and unique customer segments.

- Technology Complexity: The rapid and ongoing innovation in the data & analytics space leads to complexity unmanageable for even the most expert IT teams.

- Regulatory Compliance: Adhering to strict and ever-increasing model regulatory requirements becomes more difficult as the use of AI expands across industries.

- Organization Silos: Ineffective collaboration across teams that need to work well together can make scaling difficult or impossible.

See Also

References

Further Reading

- MODELOPS: WHY BUSINESSES NEED TO ENSURE BETTER MODEL PERFORMANCE? Vivek Kumar, Analytics Insight

- ModelOps Is The Key To Enterprise AI Jun Wu, Forbes

- ModelOps: MLOps’ next frontier Kirsten Lloyd, Venture Beat