Software Architecture

Software Architecture Definition

The software architecture of a program or computing system is the structure or structures of the system, which comprise software elements, the externally visible properties of those elements, and the relationships among them. Architecture is concerned with the public side of interfaces; private details of elements—details having to do solely with internal implementation—are not architectural.[1]

The term and concept of Software architecture were brought out by the research work of Dijikstra in 1968 and David Parnas in the 1970s. The interconnected basic building components and the views of the end user, designer, developer, and tester are needed to build a complicated, critical system. The design and implementation of the high-level structure of the software are the backbones of software architecture.

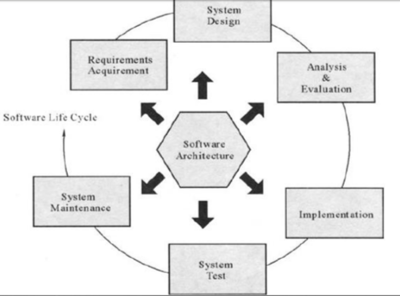

Architecture Centered Life Cycle

Figure 1. source: University of Colorado

Software application architecture is the process of defining a structured solution that meets all of the technical and operational requirements while optimizing common quality attributes such as performance, security, and manageability. It involves a series of decisions based on a wide range of factors, and each of these decisions can have a considerable impact on the quality, performance, maintainability, and overall success of the application. Philippe Kruchten, Grady Booch, Kurt Bittner, and Rich Reitman derived and refined a definition of architecture based on work by Mary Shaw and David Garlan (Shaw and Garlan 1996). Their definition is:

“Software architecture encompasses the set of significant decisions about the organization of a software system, including the selection of the structural elements and their interfaces by which the system is composed; behavior as specified in collaboration among those elements; composition of these structural and behavioral elements into larger subsystems; and an architectural style that guides this organization. Software architecture also involves functionality, usability, resilience, performance, reuse, comprehensibility, economic and technology constraints, tradeoffs, and aesthetic concerns.”[2]

Software architecture is a structured framework for conceptualizing software elements, relationships, and properties. This term also references software architecture documentation, facilitating stakeholder communication while documenting early and high-level decisions regarding design and design components and pattern reuse for different projects. The software architecture process works through the abstraction and separation of these concerns to reduce complexity. Architecture Description Language (ADL) describes software architecture. Different ADLs are developed by various organizations. Common ADL elements are connectors, components, and configuration.[3]

The software architecture of a program or computing system is a depiction of the system that aids in the understanding of how the system will behave. It serves as the blueprint for both the system and the project development, defining the work assignments that must be carried out by design and implementation teams. Architecture is the primary carrier of system qualities such as performance, modifiability, and security, none of which can be achieved without a unifying architectural vision. Architecture is an artifact for early analysis to ensure that a design approach will yield an acceptable system. By building effective architecture, you can identify design risks and mitigate them early in the development process.[4]

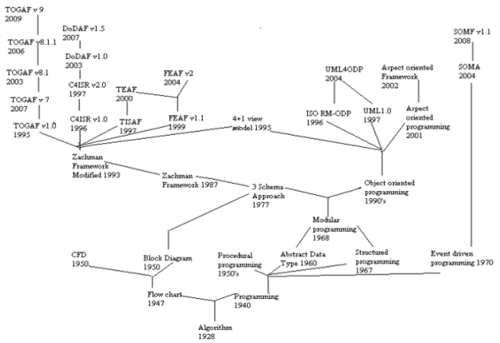

History and Evolution of Software Architecture (See Figure 2.)[5]

The basic principles of ‘software architecture’ have been applied since the mid-1980s, and it crossed various stages from the algorithm’s era by borrowing the concepts from others to get a shaped form. In 1928, An Algorithm was formulated to solve the problem by the finite sequence of instructions. Von Neumann developed a ‘Flow Chart’ that has a visual representation of the instruction flow to plan computer programs in 1947 by inheriting the idea from the flow process chart (1921) and multi flow chart (1944), which were used mostly in the area of electrical engineering. But, there is a gap to point out the flow of control. So, the ‘Control Flow Diagram’ (CFD) was developed in the late 1950s to describe the control flow of a business process and program. This was not enough to view the complex systems. The high-level view of the work and immediate access to particular points can’t be represented using this diagram. So, to reveal the entire system by dividing it into blocks, a ‘Block Diagram’ was developed in the late 1950s. A specific function for each block and the connection between blocks will be shown in a diagram. The introduction of the abstraction concept became a booster in the field of software architecture. It made a revolution and tremendous growth in that area. In that way, data structures that have similar behavior, data structures that have similar behavior, and certain data types and modules of one or more programming languages that have similar semantics are grouped in the late 1960s. This happened through the introduction of Abstract data types. It again leads to ‘Modular Programming’ that introduces the concept of separate parts called modules in software in 1968. Separation of concerns with the logical boundaries between components is called modules. In 1977, the ‘Three Schema Approach’ that adopts layered architecture based on modular programming was developed. It is used to build information systems using three different views in systems development. Here an application will be broken into tiers, and developers have to modify a specific layer not to rewrite the entire application. Flexible and reusable applications can be developed using this scheme.

Later, based on this three-tier approach, a layer of six perspectives was introduced in 1987 by John Zachman. That is called ‘The Zachman Framework,’ which still plays an important role in the era of ‘Enterprise Architecture’ and influenced frameworks DODAF, TOGAF, TEAF, and FEAF. In 1993 Zachman released the modified version of the Zachman Framework with more number of views. In 1995, the 4+1 view model was developed by Kruchten. The U.S. government encouraged the researchers to develop frameworks for defense-side applications, leading to the C4ISR Architecture Framework in 1996. ‘The Department of Defense Architecture Framework (DODAF)’ was released in 2003, which restructured the C4ISR framework ver2.0. The restructured C4ISR framework ver2.0 was released as ‘The Department of Defense Architecture Framework (DODAF)’ in 2003. ‘The Open Group Architecture Framework (TOGAF)’ was developed by the members of open architecture forums in 1995. Recently in 2009, TOGAF Version 9 was released. To integrate its myriad agencies and functions under single common enterprise architecture, the ‘Federal Enterprise Architecture Framework (FEA)’ was developed in 1999 by the Federal Government. ‘Treasury Enterprise Architecture Framework (TEAF)’ was developed to support the Treasury’s business processes regarding products of the US Department of Treasury and published in July 2000. A reference model RM-ODP was developed by Andrew Herbert in 1984. It combines the concepts of abstraction, composition, and the emergence of distributed processing developments. By including the set of UML profiles in the ODP, UML4ODP was released in 2004. In 2001, Aspect-oriented programming boomed out by inheriting the principles of OOPS. And it leads to Aspect-oriented software development in later 2002. IBM announced ‘Service Oriented Modeling Architecture (SOMA)’ in 2004, opposing distributed processing and Modular programming. It is the first publicly announced SOA-related methodology. In addition, to provide tactical and strategic solutions to enterprise problems, the SOMF ver 1.1 was released by Michael Bell.

Evolution of Software Architecture

Figure 2. source: IJACSA

Scope of Software Architecture[6]

Opinions vary as to the scope of software architectures:

- Overall, macroscopic system structure; refers to architecture as a higher-level abstraction of a software system that consists of a collection of computational components together with connectors that describe the interaction between these components.

- The important stuff—whatever that is; this refers to the fact that software architects should concern themselves with those decisions that have a high impact on the system and its stakeholders.

- That which is fundamental to understanding a system in its environment."

- Things people perceive as hard to change; since designing the architecture begins at the beginning of a software system's lifecycle, the architect should focus on decisions that "have to" be right the first time. Following this line of thought, architectural design issues may become non-architectural once their irreversibility can be overcome.

- A set of architectural design decisions; software architecture should not be considered merely a set of models or structures but should include the decisions that lead to these particular structures and the rationale behind them. This insight has led to substantial research into software architecture knowledge management.

- There is no sharp distinction between software architecture versus design and requirements engineering. They are all part of a "chain of intentionality" from high-level intentions to low-level details.

Software Architecture Patterns[7]

Mark Richards is a Boston-based software architect who’s been thinking for more than 30 years about how data should flow through software. His book, Software Architecture Patterns, focuses on five architectures that are commonly used to organize software systems. The best way to plan new programs is to study them and understand their strengths and weaknesses.

- Layered (n-tier) Architecture: This approach is probably the most common because it is usually built around the database, and many applications in business naturally lend themselves to storing information in tables. Many of the biggest and best software frameworks—like Java EE, Drupal, and Express—were built with this structure in mind, so many of the applications built with them naturally come out in a layered architecture. The code is arranged so the data enters the top layer and works its way down each layer until it reaches the bottom, which is usually a database. Along the way, each layer has a specific task, like checking the data for consistency or reformatting the values to keep them consistent. It’s common for different programmers to work independently on different layers.

- Event-Driven Architecture: Many programs spend most of their time waiting for something to happen. This is especially true for computers that work directly with humans, but it’s also common in areas like networks. Sometimes there’s data that needs processing, and other times there isn’t. The event-driven architecture helps manage this by building a central unit that accepts all data and then delegates it to the separate modules that handle the particular type. This handoff is said to generate an “event,” and it is delegated to the code assigned to that type. Programming a web page with JavaScript involves writing small modules that react to events like mouse clicks or keystrokes. The browser itself orchestrates all of the input and makes sure that only the right code sees the right events. Many different types of events are common in the browser, but the modules interact only with the events that concern them. This is very different from the layered architecture, where all data will typically pass through all layers.

- Microkernel Architecture: Many applications have a core set of operations that are used again and again in different patterns that depend upon the data and the task at hand. The popular development tool Eclipse, for instance, will open files, annotate them, edit them, and start-up background processors. The tool is famous for doing all of these jobs with Java code and then, when a button is pushed, compiling the code and running it. In this case, the basic routines for displaying a file and editing it are part of the microkernel. The Java compiler is just an extra part that’s bolted on to support the basic features in the microkernel. Other programmers have extended Eclipse to develop code for other languages with other compilers. Many don’t even use the Java compiler, but all use the same basic routines for editing and annotating files. The extra features that are layered on top are often called plug-ins. Many call this extensible approach a plug-in architecture instead. Richards likes to explain this with an example from the insurance business: “Claims processing is necessarily complex, but the actual steps are not. What makes it complex are all of the rules.” The solution is to push some basic tasks—like asking for a name or checking on payment—into the microkernel. The different business units can then write plug-ins for the different types of claims by knitting together the rules with calls to the basic functions in the kernel.

- Microservices Architecture: Software can be like a baby elephant: It is cute and fun when it’s little, but once it gets big, it is difficult to steer and resistant to change. The microservice architecture is designed to help developers avoid letting their babies grow up to be unwieldy, monolithic, and inflexible. Instead of building one big program, the goal is to create a number of different tiny programs and then create a new little program every time someone wants to add a new feature. Think of a herd of guinea pigs. “If you go onto your iPad and look at Netflix’s UI, every single thing on that interface comes from a separate service,” says Richards. The list of your favorites, the ratings you give to individual films, and the accounting information are all delivered in separate batches by separate services. It’s as if Netflix is a constellation of dozens of smaller websites that just happens to present itself as one service. This approach is similar to the event-driven and microkernel approaches, but it’s used mainly when the different tasks are easily separated. In many cases, different tasks can require different amounts of processing and may vary in use. The servers delivering Netflix’s content get pushed harder on Friday and Saturday nights, so they must be ready to scale up. The servers that track DVD returns, on the other hand, do the bulk of their work during the week, just after the post office delivers the day’s mail. By implementing these as separate services, the Netflix cloud can scale them up and down independently as demand changes.

- Space-Based Architecture: Many websites are built around a database, and they function well as long as the database is able to keep up with the load. But when usage peaks and the database can’t keep up with the constant challenge of writing a log of the transactions, the entire website fails. The space-based architecture is designed to avoid functional collapse under high load by splitting up the processing and storage between multiple servers. The data is spread out across the nodes, just like the responsibility for servicing calls. Some architects use the more amorphous term “cloud architecture.” The name “space-based” refers to the “tuple space” of the users, which is cut up to partition the work between the nodes. “It’s all in-memory objects,” says Richards. “The space-based architecture supports things that have unpredictable spikes by eliminating the database.” Storing the information in RAM makes many jobs much faster, and spreading out the storage with processing can simplify many basic tasks. But the distributed architecture can make some types of analysis more complex. Computations that must be spread out across the entire data set—like finding an average or doing a statistical analysis—must be split up into sub-jobs, spread out across all of the nodes, and then aggregated when it’s done.

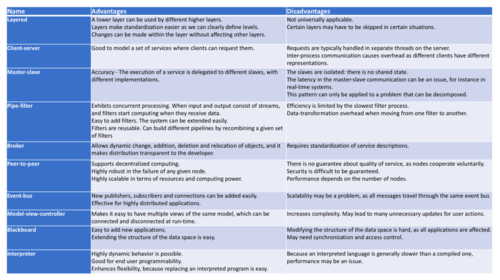

Comparison of Other Common Architectural Patterns (See Figure 3)

The table given below summarizes the pros and cons of each architectural pattern.

Figure 3. source: Towards Data Science

Software Architecture Vs. Software Design[8]

Software architecture exposes the structure of a system while hiding the implementation details. Architecture also focuses on how the elements and components within a system interact with one another. Software design delves deeper into the implementation details of the system. Design concerns include the selection of data structures and algorithms or the implementation details of individual components. Architecture and design concerns often overlap. Rather than use hard and fast rules to distinguish between architecture and design, it makes sense to combine them. In some cases, decisions are clearly more architectural in nature. In other cases, decisions focus heavily on design and how it helps to realize that architecture. An important detail is that architecture is design, but not all design is architectural. In practice, the architect is the one who draws the line between software architecture (architectural design) and detailed design (non-architectural design). There are no rules or guidelines that fit all cases — although there have been attempts to formalize the distinction. Current trends in software architecture assume that the design evolves over time and that a software architect cannot know everything up front to fully architect a system. The design generally evolves during the implementation stages of the system. The software architect continuously learns and tests the design against real-world requirements.

Impediments to Achieving Architectural Success[9]

- Lack of adequate architectural talent and/or experience

- Insufficient time spent on architectural design and analysis

- Failure to identify the quality drivers and design for them

- Failure to properly document and communicate the architecture

- Failure to evaluate the architecture beyond the mandatory government review

- Failure to understand that standards are not a substitute for a software architecture

- Failure to ensure that the architecture directs the implementation

- Failure to evolve the architecture and maintain documentation that is current

- Failure to understand that a software architecture does not come free with COTS or with the C4ISR Framework

See Also

- Software Architecture Analysis Method (SAAM)

- Client Server Architecture

- Architectural Risk

- Architectural Pattern

- Architectural Principles

- Architecture

- Architecture Description Language (ADL)

- Architecture Development Method (ADM)

- Service Oriented Architecture (SOA)

- The Open Group Architecture Framework (TOGAF)

- Architecture Driven Modernization

- Design Pattern

- Adaptive Enterprise Framework (AEF)

- Enterprise Architecture

- Architectural Style

References

- ↑ Definition of Software Architecture

- ↑ What is Software Architecture?

- ↑ Explaining Software Architecture

- ↑ Understanding Software Architecture

- ↑ History and Evolution of Software Architecture

- ↑ Scope of Software Architecture

- ↑ The Five Software Architecture Patterns

- ↑ What’s the relationship between software architecture and software design?

- ↑ Common Impediments to Achieving Architectural Success

Further Reading

- Software Architecture 101: What Makes it Good?

- An Introduction to Software Architecture

- Key Principles of Software Architecture

- The Importance of Software Architecture

- A Study on the Role of Software Architecture in the Evolution and Quality of Software

- Measurable Quality Characteristics of a Software System on Software Architecture Level