Distributed Computing

Distributed Computing is a type of computing in which different components and objects comprising an application can be located on different computers connected to a network. So, for example, a word-processing application might consist of an editor component on one computer, a spell-checker object on a second computer, and a thesaurus on a third computer. In some distributed computing systems, each of the three computers could be running a different operating system. One of the requirements of distributed computing is a set of standards that specify how objects communicate. There are currently two chief distributed computing standards: CORBA and DCOM.[1]

The ultimate goal of distributed computing is to maximize performance by connecting users and IT resources in a cost-effective, transparent, and reliable manner. It also ensures fault tolerance and enables resource accessibility in the event that one of the components fails. The idea of distributing resources within a computer network is not new. This first started with data entry terminals on mainframe computers, then moved into minicomputers, and is now possible in personal computers and client-server architecture with more tiers. A distributed computing architecture consists of a number of client machines with very lightweight software agents installed with one or more dedicated distributed computing management servers. The agents running on the client machines usually detect when the machine is idle and notify the management server that the machine is not in use and is available for a processing job. The agents then request an application package. When the client machine receives this application package from the management server to process, it runs the application software when it has free CPU cycles and sends the result back to the management server. When the user returns and requires the resources again, the management server returns the resources used to perform different tasks in the user's absence.[2]

Like other areas in computer science, distributed computing spans a wide range of subjects from applied to the very theoretical. On the theory side, distributed computing is a rich source of mathematically interesting problems in which an algorithm is pitted against an adversary representing the unpredictable elements of the system. Analysis of distributed algorithms often has a strong game-theoretic flavor because executions involve a complex interaction between the algorithm’s behavior and the system’s responses. Michael Fischer is one of the pioneering researchers in the theory of distributed computing. His work on using adversary arguments to prove lower bounds and impossibility results has shaped much of the research on the area. He is currently actively involved in studying security issues in distributed systems, including cryptographic tools and trust management. James Aspnes’ research emphasizes using randomization to solve fundamental problems in distributed computing. Many problems that are difficult or impossible to solve using a deterministic algorithm can be solved if processes can flip coins. Analyzing the resulting algorithms often requires using non-trivial techniques from probability theory.[3]

Parallel and Distributed Computing[4]

Distributed systems are groups of networked computers, which have the same goal for their work. The terms "concurrent computing", "parallel computing", and "distributed computing" have a lot of overlap, and no clear distinction exists between them. The same system may be characterized both as "parallel" and "distributed"; the processors in a typical distributed system run concurrently in parallel. Parallel computing may be seen as a particularly tightly coupled form of distributed computing, and distributed computing may be seen as a loosely coupled form of parallel computing. Nevertheless, it is possible to roughly classify concurrent systems as "parallel" or "distributed" using the following criteria:

In parallel computing, all processors may have access to shared memory to exchange information between processors. In distributed computing, each processor has its own private memory (distributed memory). Information is exchanged by passing messages between the processors.

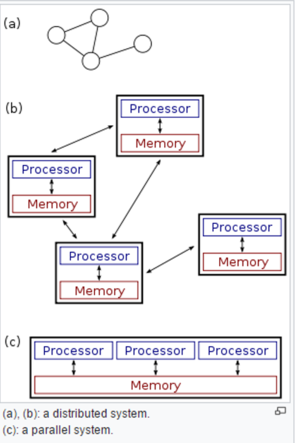

The figure below illustrates the difference between distributed and parallel systems. The figure below is a schematic view of a typical distributed system; as usual, the system is represented as a network topology in which each node is a computer and each line connecting the nodes is a communication link. Figure (b) shows the same distributed system in more detail: each computer has its own local memory, and information can be exchanged only by passing messages from one node to another by using the available communication links. Figure (c) shows a parallel system in which each processor has direct access to a shared memory. The situation is further complicated by the traditional uses of the terms parallel and distributed algorithm that do not quite match the above definitions of parallel and distributed systems (see below for a more detailed discussion). Nevertheless, as a rule of thumb, high-performance parallel computation in a shared-memory multiprocessor uses parallel algorithms, while the coordination of a large-scale distributed system uses distributed algorithms.

See Also

References