End-to-End Principle

Definition of End-to-End Principle[1]

The end-to-end principle is a classic design principle in computer networking. In networks designed according to the principle, application-specific features reside in the communicating end nodes of the network, rather than in intermediary nodes, such as gateways and routers, that exist to establish the network. The end-to-end principle originated in the work by Paul Baran in the 1960s, which addressed the requirement of network reliability when the building blocks are inherently unreliable. It was first articulated explicitly in 1981 by Saltzer, Reed, and Clark.

A basic premise of the principle is that the payoffs from adding features to a simple network quickly diminish, especially in cases in which the end hosts have to implement those functions only for reasons of conformance, i.e. completeness and correctness based on a specification. Template:Refn Furthermore, as implementing any specific function incurs some resource penalties regardless of whether the function is used or not, implementing a specific function in the network distributes these penalties among all clients, regardless of whether they use that function or not.

The canonical example for the end-to-end principle is that of an arbitrarily reliable file transfer between two end-points in a distributed network of some nontrivial size: The only way two end-points can obtain a completely reliable transfer is by transmitting and acknowledging a checksum for the entire data stream; in such a setting, lesser checksum and acknowledgment (ACK/NACK) protocols are justified only for the purpose of optimizing performance - they are useful to the vast majority of clients, but are not enough to fulfill the reliability requirement of this particular application. Thorough checksum is hence best done at the end-points, and the network maintains a relatively low level of complexity and reasonable performance for all clients.

The end-to-end principle is closely related and sometimes seen as a direct precursor to the principle of net neutrality.

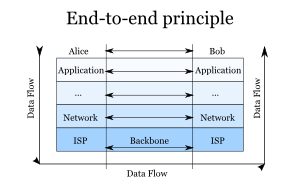

Net neutrality is business terminology for a fundamentally technical concept, the so-called End-to-end principle. The Internet is based on multiple layers of various protocols. The user interacts with the top layer, which passes the data down to the next layer. At the bottom of the stack, there is some physical medium, like a cable or a radio wave (essentially the ISP). On the receiving side, a similar stack of protocols handles the data from bottom to top until it reaches the user on the other end. The layers have no knowledge of the data being passed from above and do not alter it in any way. Each layer is directly addressing the matching layer on the other end. Processing happens at the communicating parties' ends, not in transit, hence the name.

source: Stef Blog

This end-to-end principle decentralizes the intelligence in the network. Telecom companies coming from a classical telephony background are used to having the intelligence of the network under their control, at the "center". On the Internet, however, the intelligence is at the edges - at the client's - while the network is mostly dumb. ISPs are just one of the lower layers in this stack. Thus, any kind of content sent through an ISP should be processed regardless of the source, destination or data making it up. That is the end-to-end principle and this is also what is now being relabeled as network neutrality. It is imperative to oblige infrastructure providers to adhere to the end-to-end principle and handle all data without prejudice.[2]

Concept and Example of End-to-End Principle[3]

Concept

The fundamental notion behind the end-to-end principle is that, for two processes communicating with each other via some communication means, the reliability obtained from that means cannot be expected to be perfectly aligned with the reliability requirements of the processes. In particular, meeting or exceeding very high-reliability requirements of communicating processes separated by networks of nontrivial size is more costly than obtaining the required degree of reliability by positive end-to-end acknowledgments and retransmissions (referred to as PAR or ARQ). Put differently, it is far easier to obtain reliability beyond a certain margin by mechanisms in the end hosts of a network rather than in the intermediary nodes, especially when the latter are beyond the control of, and not accountable to, the former. Positive end-to-end acknowledgments with infinite retries can obtain arbitrarily high reliability from any network with a higher than zero probability of successfully transmitting data from one end to another.

The end-to-end principle does not trivially extend to functions beyond end-to-end error control and correction. E.g., no straightforward end-to-end arguments can be made for communication parameters such as latency and throughput. In a 2001 paper, Blumenthal and Clark note: "From the beginning, the end-to-end arguments revolved around requirements that could be implemented correctly at the endpoints; if implementation inside the network is the only way to accomplish the requirement, then an end-to-end argument isn't appropriate in the first place."

The basic notion: reliability from unreliable parts

In the 1960s, Paul Baran and Donald Davies, in their pre-ARPANET elaborations of networking, made brief comments about reliability that capture the essence of the later end-to-end principle. To quote from a 1964 Baran paper, "Reliability and raw error rates are secondary. The network must be built with the expectation of heavy damage anyway. Powerful error removal methods exist." Similarly, Davies notes on end-to-end error control, "It is thought that all users of the network will provide themselves with some kind of error control and that without difficulty this could be made to show up a missing packet. Because of this, loss of packets, if it is sufficiently rare, can be tolerated."

The French CYCLADES network was the first to make the hosts responsible for the reliable delivery of data, rather than this being a centralized service of the network itself.

Early trade-offs: experiences in the ARPANET

The ARPANET was the first large-scale general-purpose packet switching network – implementing several of the basic notions previously touched on by Baran and Davies, and demonstrating several important aspects of the end-to-end principle:

- Packet switching pushes some logical functions toward the communication endpoints: If the basic premise of a distributed network is packet switching, then functions such as reordering and duplicate detection inevitably have to be implemented at the logical endpoints of such network. Consequently, the ARPANET featured two distinct levels of functionality:

- a lower level concerned with transporting data packets between neighboring network nodes (called IMPs), and

- a higher level concerned with various end-to-end aspects of data transmission.

Dave Clark, one of the authors of the end-to-end principle paper, concludes: "The discovery of packets is not a consequence of the end-to-end argument. It is the success of packets that make the end-to-end argument relevant."

- No arbitrarily reliable data transfer without end-to-end acknowledgment and retransmission mechanisms: The ARPANET was designed to provide reliable data transport between any two end points of the network – much like a simple I/O channel between a computer and a nearby peripheral device. In order to remedy any potential failures of packet transmission normal ARPANET messages were handed from one node to the next node with a positive acknowledgment and retransmission scheme; after a successful handover they were then discarded, no source-to-destination re-transmission in case of packet loss was catered for. However, in spite of significant efforts, perfect reliability as envisaged in the initial ARPANET specification turned out to be impossible to provide – a reality that became increasingly obvious once the ARPANET grew well beyond its initial four-node topology. The ARPANET thus provided a strong case for the inherent limits of network-based hop-by-hop reliability mechanisms in pursuit of true end-to-end reliability.

- Trade-off between reliability, latency, and throughput: The pursuit of perfect reliability may hurt other relevant parameters of a data transmission – most importantly latency and throughput. This is particularly important for applications that value predictable throughput and low latency over reliability – the classic example being interactive real-time voice applications. This use case was catered for in the ARPANET by providing a raw message service that dispensed with various reliability measures so as to provide faster and lower latency data transmission service to the end hosts.

The canonical case: TCP/IP

Internet Protocol (IP) is a connectionless datagram service with no delivery guarantees. On the internet, IP is used for nearly all communications. End-to-end acknowledgment and retransmission are the responsibility of the connection-oriented Transmission Control Protocol (TCP) which sits on top of IP. The functional split between IP and TCP exemplifies the proper application of the end-to-end principle to transport protocol design.

Example

An example of the end-to-end principle is that of an arbitrarily reliable file transfer between two endpoints in a distributed network of varying, nontrivial size: The only way two endpoints can obtain a completely reliable transfer is by transmitting and acknowledging a checksum for the entire data stream; in such a setting, lesser checksum and acknowledgment (ACK/NACK) protocols are justified only for the purpose of optimizing performance – they are useful to the vast majority of clients, but are not enough to fulfill the reliability requirement of this particular application.[why?] Thorough checksum is hence best done at the endpoints, and the network maintains a relatively low level of complexity and reasonable performance for all clients.

Pros and Cons of End-to-End-Principle[4]

Pros of the End-to-End principle

- Don’t impose a performance penalty on applications that don’t need it

- If we put reliability into Ethernet, IP, etc., then apps that don’t need reliability pay for it

- Complex middle = complex interface. Specifying policy is HARD!

- ATM failed. This is part of the reason.

- You need it at the endpoints anyway; may as well not do it twice

- Checksums on big files

- By keeping state at the end-points, not in the network, the only failure you can’t survive is a failure of the end-points

- The middle of the network is (historically) hard to change. Fosters innovation by anyone without being a “big player”.

Cons of the End-to-End principle

- Loss of efficiency

- The end-to-end principle is sometimes relaxed

- End-points are also hard to change en masse

- New versions of TCP can’t “just be deployed”

- End-points no longer trust each other to be good actors. Result?

- Routers now enforce bandwidth fairness (RED)

- Firewalls now impose security restrictions

- Caches now intercept your requests and satisfy them

- Akamai and other CDNs (“reverse caching”): good design

- Transparent proxy caching: breaks things

See Also

References

Further Reading

- End-to-End Arguments in System Design -J.H. Saltzer, D.P. Reed and D.D. Clark

- The end-to-end principle in distributed systems -Ted Kaminski

- End to End Principle in Internet Architecture as a Core Internet Value-Core Internet Values Org

- Can the End-to-End Principle Survive? -Phil Karn

- End-to-End Arguments in the Internet: Principles, Practices, and Theory -Matthias Bärwolff

- The End of End-to-End?-Technology Review