Predictive Modeling

What is Predictive Modeling?

Predictive modeling is a statistical technique that can be used to predict future behaviors or outcomes. It is useful in that it can help to improve underwriting accuracy, detect fraud and understand customer behavior. Predictive modeling utilizes a variety of techniques, such as machine learning and analytics, to make predictions. By understanding the underlying patterns within data sets, predictive models can provide valuable insights into potential future events or outcomes. This information can then be utilized by organizations in order to better manage risk and operations.

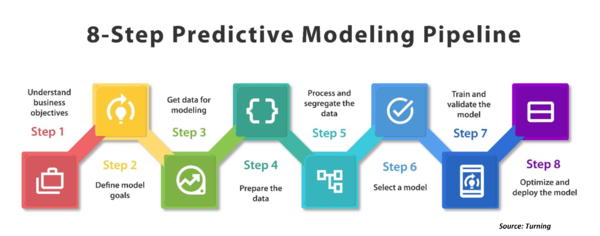

What are the steps of Predictive Modeling?

Step 1: Assess your data: It is important to assess data before predictive modeling in order to gain a better understanding of the data, identify any underlying trends or patterns, and ensure that the model produces accurate and useful results. By assessing the data beforehand, it is possible to determine if there are any missing variables or outliers that could influence the accuracy of the model. In addition, this assessment can help identify potential areas where additional features may need to be included in order to create an effective predictive model.

Step 2: Ask the right questions: When it comes to predictive modeling, asking the right questions is essential in order to ensure that the model provides an accurate prediction of future events. The questions asked should help guide the scope and requirements of the business problem, while also helping direct data collection and preprocessing, data cleaning and exploratory data analysis (EDA), model building, validating the model, deployment of the model, and ongoing monitoring. Asking these questions can ultimately help create a more successful predictive modeling process.

Step 3: Set off: Step 3 in the Predictive Modeling process is significant because it provides a recommendation based on predicted outcomes. This allows for tailored treatments and better patient outcomes compared to traditional approaches. It also helps assess the accuracy and potential applications of the predictive model in a hospital environment, helping healthcare providers make more informed decisions about how to best serve their patients.

Step 4: Develop the model: Developing the model is an important step in predictive modeling because it allows for the data to be accurately analyzed and for meaningful predictions to be made. The model provides a framework for properly understanding and interpreting the data, as well as predicting future outcomes based on current trends. Developing a model also allows us to track, monitor, and validate results over time in order to continually improve it.

Step 5: Launch the predictive model: It is important to launch the predictive model because it can help healthcare organizations better understand and predict patient outcomes, streamline processes, and optimize resources. By leveraging data such as past patient medical histories, treatments, lab test results, and other relevant information, predictive models can provide a more accurate picture of potential outcomes for each individual case. This helps hospitals make faster, more informed decisions about patient care and resource allocation.

Step 6: Ensure continuity: In predictive modeling, continuity is important because it helps ensure that the model will be able to provide reliable and accurate predictions for future events. By being able to maintain consistent parameters and associated data points, predictive models can accurately identify trends in past events that may be useful in predicting future outcomes. Keeping a continuous chain of data allows the model to remain up-to-date with current conditions, enabling the model to make more accurate predictions.

Step 7: Clean your data to avoid a misleading model: When it comes to predictive modeling, it is important to clean the data in order to ensure accurate and reliable results. Cleaning the data prior to modeling helps avoid unexpected results and errors that can occur during the process. In addition, having access to clean and accurate data is essential for predictive modeling since incorrect or incomplete information can lead to inaccurate predictions. Ultimately, data preparation makes up 80% of predictive modeling, making it a key factor in successful prediction models.

Step 8: Create new, useful variables to understand your records: The purpose of creating new variables in predictive modeling is to merge tables and extracts for the purpose of reporting and answering ad-hoc questions, as well as to improve reports or target retention or on-time completion.

Step 9: Learn a model: Learning a model is one of the steps of predictive modeling in order to make predictions about future events or trends. Through learning a model, data scientists are able to use machine learning algorithms to build and validate models that can accurately predict outcomes based on the data they have collected and preprocessed. This step is essential in predictive modeling because it allows for the development of more accurate models that can be deployed and monitored over time.

Step 10: Make predictions: Making predictions is the last step in predictive modeling because it allows for feedback and correction of predictions. This allows the predictive models to be evaluated for their ability to improve health care outcomes, while also ensuring that they are able to demonstrate their impact on health care outcomes. Predictive modeling is an iterative process and making predictions at the end of this process gives the modeler an opportunity to assess how well their model performed, allowing them to make improvements if necessary.

Step 11: Validation of Clinical Utility: It is important to validate the clinical utility of a predictive model in order to ensure its accuracy and usefulness. Validating the predictive model helps to confirm that it is reliable and accurate, thus making it more likely to be applicable in clinical settings. Furthermore, validating a predictive model can help identify any areas where improvements may need to be made by leveraging new data as time goes on.

What technologies and tools are used in Predictive Modeling?

- Linear Regression: Linear regression is a machine learning technique used for predictive modeling, which aims to predict future outcomes based on the relationship between binary dependent variables and independent variables. Linear regression can be used to assess trends and generate estimations or forecasts in business, as well as marketing by generating probability models that forecast customer behavior. It is also used to predict the unknown values of a discrete target variable based on known values of other variables. An example application of linear regression is predicting how likely an event (in this case, a person's survival on the Titanic) is to occur.

- Support Vector Machines (SVM): A Support Vector Machine (SVM) is a machine learning model used in predictive modeling. It relies on the kernel trick to create an ideal boundary between inputs and is less prone to overfitting than other classifiers. SVMs are used in linear regression, multiple linear regression, support vector regression, polynomial regression, and decision tree models. Naive Bayes can also be employed for predictive modeling purposes. SVM models are useful in finding patterns in data that can improve predictive accuracy and reduce risk or fraud related to customer expectations or marketing campaigns.

- Clustering Model: A Clustering Model is a technique used in predictive modeling to group data points together based on shared characteristics. This model has a range of applications, such as grouping customers with similar characteristics for targeted marketing campaigns or identifying patterns and predicting future outcomes by analyzing individual clusters. Additionally, the model can be used to identify unusual data entries that could indicate an impending problem.

- Decision Trees: A decision tree is a predictive modeling tool that helps to identify the target variable in a dataset and generate potential outcomes. It is used for data mining, which involves extracting information from large datasets. Through the use of linear regression, multiple regression, logistic regression, and other predictive modeling techniques, decision trees can be used to choose the best option from a range of possible decisions. Random Forest and Neural Networks are popular machine learning algorithms that are also used in combination with decision trees for predictive modeling.

- Neural Networks: Neural networks are complex algorithms that are used to recognize patterns in given datasets. They work via three layers – the input layer, which transfers data to the hidden layer; the hidden layer, which hides functions that build predictors; and the output layer, which gathers data from such predictors to generate an accurate outcome. Neural networks can be used in combination with other predictive models like time series or clustering for predictive modeling tasks.

- Gradient Boosted Model: A gradient-boosted model is a type of predictive model used to create rankings using a series of related decision trees. This type of model is more accurate than other techniques, as it is better at expressing data sets. It can also be used to predict possible search engine results for different applications. Predictive modeling, in general, is the process of making predictions about future events or outcomes by fitting data into models. Despite its potential benefits in making informed business decisions, such models may have limitations that need to be taken into consideration when making predictions. For further knowledge on the subject matter, additional reading and references are recommended.

- Random Forest: A Random Forest is a machine-learning method that is used in predictive modeling to identify patterns in data that are not easily found by other methods. It combines multiple decision trees, each making its own prediction, in order to make accurate predictions. Random forests can be used for both classification and regression and are known for their fast speed, accuracy, and ability to handle large volumes of data without overfitting. They are particularly suitable for predictive modeling because they can accurately predict outcomes even when there is missing data present.

- ARIMA: ARIMA is a predictive model that uses past data and auto-correlations to make predictions about future events. It is used in predictive modeling to help understand patterns in time series data, such as those found in the food industry or healthcare sector. In addition, ARIMA can be used for clinical risk intervention and predictive analytics, population health management, fee-for-quality models, and other forms of automation. To use it effectively, providers must adopt a standard practice for data collection and integration.

- Time Series Model: A Time Series Model is a type of predictive modeling that is used to analyze past data and predict future trends. This type of model can be used to determine the risk or probability of something happening in the future, such as customer support spikes. It takes into account multiple input parameters such as the type of data being predicted, the time period predicted, and the desired outcome.

- Classification Model: A classification model is a type of predictive modeling technique used to identify data points that belong to a certain group or class. This model is helpful for making decisions by analyzing historical data and providing broad analysis. Classification models are commonly used for answering yes/no questions, such as "will this loan be approved?" or "will this customer churn soon?" They can also be applied to target marketing campaigns or predict how many customers an eCommerce site may convert in a given week. The outlier model is another type of classification model which identifies unusual data in datasets, and it can be used for predictive modeling in retail and finance. Additionally, the time series models use time as an input factor in order to predict future trends or events.

- Outliers Model: An outlier model is used in predictive modeling to identify anomalous data which might indicate problems with a dataset. It can help to detect any fraudulent transactions by looking at multiple input parameters, such as amount, location, time, purchase history, and the nature of the purchase. This model can be used for predictive analytics in retail and finance scenarios where historical numerical data is available.

- Forecast Model: Forecast models are used in predictive modeling to predict future values for metrics that may not have any historical data. This can be achieved by grouping data and analyzing it, as well as managing multiple parameters to generate results. Forecasting is prone to human error and personal bias, so analysts often use regression or Bayesian models in order to achieve more accurate predictions. Time series models are also popular for identifying patterns in past data that could help predict future outcomes. An outlier model is a common tool used for detecting fraud and abnormalities in data. Ultimately, the forecast model is an important part of predictive modeling that helps organizations make better decisions about their operations moving forward.

How does Machine Learning play a role in Predictive Modeling?

Predictive modeling is a data analytics technique that relies on artificial intelligence to identify patterns in data and make predictions about future events. Using machine learning techniques, the accuracy of predictive models can be improved. By adjusting predictor variables, a predictive model can be created that produces the best possible results. This model can then be validated, tested, and used in real-world scenarios to determine how decisions will affect existing situations. Predictive modeling is also used in Precision Medicine to tailor treatment plans for specific patients.

How can predictive analytics be used to improve decision-making processes?

Predictive analytics is a technique that utilizes data and predictive models to accurately predict future events. It can be used to improve decision-making by providing insights into how customers or users will behave in the future. To do this, data must be collected and organized in a way that makes predictions possible. Predictive analytics professionals should also put processes in place to ensure that improvements are made over time. Predictive models should then be integrated into business practices, as they help streamline processes and optimize goals while measuring performance.

What are the different types of predictive modeling?

Predictive modeling is an analytical technique used to predict an event or outcome using mathematical and computational methods. It can be done parametrically or nonparametrically and can be used for a variety of purposes, such as predicting airline traffic volume or fuel efficiency based on linear regression models of engine speed versus load. There are two main types of predictive models: parametric and non-parametric.

- Parametric models use data about past outcomes to create a mathematical model that is then used to make predictions about future outcomes while

- non-parametric models rely on intuition, pattern recognition, and machine learning algorithms to make their predictions without the need for prior data.

Predictive models are typically validated by comparing them to data not used in the training process before being put into production.

What is the difference between predictive modeling and machine learning?

- Predictive analytics encompasses a variety of statistical techniques (including machine learning, predictive modeling, and data mining) to estimate future outcomes. Machine learning is a subfield of computer science that allows computers to learn without being explicitly programmed.

- Predictive modeling uses machine learning or deep learning to make predictions on data.

- Predictive modeling is used to find the "best fit" outcome, while machine learning is used to learn from data and improve predictions over time.

- Predictive modeling uses a regular linear regression, while machine learning uses a gradient-boosted model (GBM).

- Predictive modeling is a more traditional approach to data analysis, while machine learning is a more recent approach that builds models by training on data sets.

- Predictive modeling typically relies on existing models and assumptions, while machine learning allows for models to be developed from scratch.

- Predictive modeling is slower and more complex than machine learning, but it can be more accurate and dependable.

What are the benefits of predictive modeling?

Predictive modeling can provide businesses with a variety of benefits, including the ability to acquire a competitive advantage, better understand consumer demand, and assess and mitigate financial risks. Predictive models can also be used to improve existing products, boost revenue, and reduce time and expenses in predicting outcomes. Additionally, predictive models enable businesses to make better decisions based on large datasets which may otherwise not be available. By being adaptable to new problems while still protecting privacy and security concerns predictive modeling can help companies stay ahead of the competition.

What are the limitations of predictive modeling?

The potential limitations of using predictive modeling include that predictive models may not be generalizable across different cases without proper validation, as well as the fact that predictive modeling has its own limitations that cannot always be overcome. Transfer learning is being developed in order to address this limitation.

What are some common use cases for predictive modeling?

Predictive modeling is important because it can help clinicians make better decisions about patient care. It can also be used to identify activities that will improve patient satisfaction, resource utilization, and budget control in the healthcare industry. In other industries, predictive modeling can be used to plan inventory, assess promotional campaigns, boost revenue and generate better customer experiences. Predictive models are also useful for detecting and protecting against risks as well as reducing customer churn. Finally, predictive modeling can be used to predict maintenance risks and reduce costs in different industries.

What are some common challenges involved in predictive modeling?

Common challenges involved in predictive modeling include understanding the data to be used, having access to sufficient resources, and determining whether the predicted results are of clinical or business value. Additionally, stakeholders need to approve a challenge before predictive modeling can be implemented effectively.

What are some common best practices for predictive modeling?

One of the best practices for predictive modeling is properly understanding the scope and requirements of the business problem. It is also essential to collect and preprocess data, clean it, perform exploratory data analysis (EDA), build a predictive model, validate it before deployment, and track its performance afterward. Furthermore, it is important to consider which algorithm will be most suitable for your needs by evaluating the strengths of each model available.

See Also

Cluster Analysis

Decision Tree

Forecasting

Neural Network