IT Financial Management (ITFM)

Defition of IT Financial Management (ITFM) [1]

What is IT Financial Management?

IT Financial Management (ITFM) is the oversight of expenditures required to deliver IT products and services. The discipline is based on traditional enterprise financial and accounting best practices, such as mandating documentation of expenses and requiring regular audits and reports. However, IT financial management methods and practices are adapted to address the particular requirements of managing IT assets and resources.

Gartner professes that most organizations prioritize the following ITFM capabilities in their practices:

IT Financial Management (ITFM) - Goal[3]

The goal of Financial Management for IT Services (ITFM) is to optimize the cost of IT Services while taking into account quality and risk factors. The analysis balances cost against quality and risk to create intelligent, metric-based cost optimization strategies. Balancing is required since cost-cutting may not be the best strategy to deliver optimum consumer outputs. ITFM is a discipline based on standard financial and accounting principles but addresses specific principles that are applicable to IT services, such as fixed asset management, capital management, audit, and depreciation.

- For an internal IT organization, the goal is described as: "To provide cost-effective stewardship of the IT assets and resources used in providing IT services"

- For an outsourced IT organization or an IT organization that is run as if it were a separate entity (i.e., with full charging) the goal may be described as: "To be able to account fully for the spend on IT services and to be able to attribute these costs to the services delivered to the organization's customers and to assist management by providing detailed and costed business cases for proposed changes to IT services"

IT financial management helps an IT organization determine the financial value of IT services provided to its customers. ITIL refers to this activity as ITIL Service Value System (SVS)|service valuation, whereby each service is valued based on the cost of the service and value added by both the IT service provider and the customer's own assets. Service-based IT financial management aligns the basic activities, accounting, charging, and budgeting, with other customer facing ITIL processes. For example, the IT organization tracks expenses against the services outlined in the service catalog on a continuous basis through the IT accounting. Similarly, IT budgeting generally consists of an annual or multiyear effort that measures existing financial commitments and estimates future expenses related to its services. Because most IT organizations need to recover costs or generate a profit, they implement a charging process, and customers are billed for the IT services that they consume. Accounting, charging, and budgeting activities provide critical outputs and improve service through investing in the high-value services through rigorous service investment analysis, business cases, and portfolio management. Similarly, these outputs can lower costs through monitoring expenses related to a given service and determine whether it can be provided more effectively, referred to as service provisioning optimization in ITIL.[4]

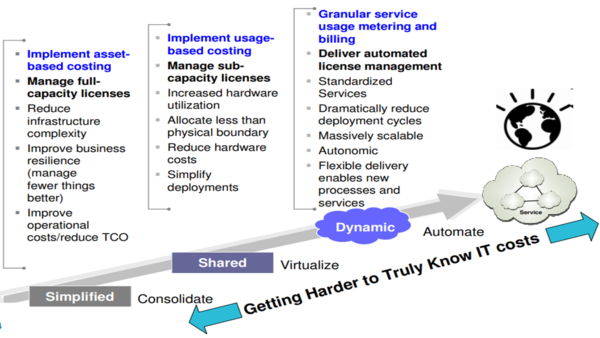

IT Financial Management (ITFM) Roadmap[5]

Below is a representation of how to plan your Financial Management Transformation Roadmap

IT Financial Management (ITFM) Tools[6]

IT financial management (ITFM) tools are gaining traction among IT leaders, as many organizations zero in on identifying and controlling IT costs, and generally provide better transparency to the broader business. But for every ITFM enthusiast, there are two more IT leaders who don’t fully understand what an ITFM tool is, or what it should do for them. ITFM tools enable technology leaders to manage their businesses with the same process-driven accuracy, financial visibility, and discipline as their corporate peers. In other words, ITFM tools provide visibility into precisely what IT is spending. Think of them as financial accounting for IT—a powerful tool to understand how an organization’s resources are being spent.

“One of the main reasons to adopt IT financial management tools is to gain the cost insight necessary to develop a cost allocation/chargeback strategy that is transparent, and based on the actual costs of running the business,” explains Apptio partner 6fusion. “Instead of charging a department based on their size or the number of computers, IT organizations can show actual spend numbers for each department. This allows the IT department to act more like an internal service provider, just as you would see pricing from an external service provider. The pricing of services is presented in a rational way that is also defensible.”

To arrive at this information, data is pulled directly from accounting general ledgers for software, hardware, and every IT soft cost in between. Data models are applied to combine those costs into stacks and cost centers for management purposes. Key reports are then built and made available for all stakeholders to access. And then you end up with a whole new level of visibility that enables better allocation decisions, accelerates initiatives for investments, and drives greater efficiency.

An increasing number of organizations are looking to initiate or improve their IT financial management efforts with the purchase of an ITFM tool, driving growth in the market. But before you go shopping for an ITFM tool, know why you need one. That will give you a much better basis for selecting the right tool. According to Gartner, there are five key questions to ask—and answer—before you scope out vendors:

- What is the mandate?

- What issue(s) need to be resolved and why?

- Who is the executive sponsor?

- What is driving their interest in better IT financials?

- What is the appetite for ITFM (willingness to commit resources)? Who has a vested interest in better IT financial management?

The answers to these questions will impact the "what" and determine the "how" of ITFM and, in turn, answer the question, "Where do I start?"

ITFM Vs. TBM[7]

According to Forrester, Technology Business Management, or TBM, is an expert stage of maturity where an organization has grown from understanding its IT costs, to managing those costs, and at the highest levels of maturity, to adhering to processes and models to manage and shape the demand of business technologies. At this high level of maturity that Forrester refers to as TBM, an organization has created a culture of cost accountability. IT organizations influence user behavior and demand of technologies by charging back costs incurred to the businesses that have consumed the technology. Activity based costing and fully integrated process models are used to provide an advanced level of chargeback. Taking a step down the maturity curve, Forrester recognizes IT financial management, or ITFM, as the advanced practice of managing IT costs. IT organizations are able to provide transparency around costs incurred back to the business through basic integrations with financial and operational systems, as well as the company’s ERP solution. Service-based costing and service models are the basis for this maturity level as defined by Forrester. “Better visibility also enables managers to control demand, which has resulted in reduced overall spend.”

Gartner looks at all these stages of maturity as part of the IT financial management umbrella and doesn’t identify the various maturity levels with a particular nomenclature. Gartner recognizes similar aspects and attributes to the practice of ITFM as Forrester, and through its research, has found that most of its clients prioritize the following ITFM capabilities in their practices: 1) Budgeting, 2) Forecasting, 3) Variance analysis, and 4) Cost optimization

Despite the differences in nomenclature, it really all comes down to what the organization is trying to achieve. There are IT organizations that are trying to solve a variety of challenges as they embark on their ITFM journey, regardless of what somebody else calls them. It may be initial development of a service catalog, providing more informed budgeting and forecasting by having more insights to true costs and demand, all the way to line-item chargeback or optimizing IT investments in support of an entire digital transformation. Or anywhere in between.

It doesn’t really matter what you call it as long as you are getting the service and the solution you need to meet your needs.

Flawed ITFM practices[8]

Over the years, attempts to impose traditional financial management practices onto the IT organisation have impeded true progress towards IT maturity. Here are a few of the most common poor practices.

- Different accounting for different purposes: IT management often finds itself in a no-win situation. All too often, business leaders demand that IT management systems be aligned to their specific accounting needs, leaving IT management without effective execution information. Conversely, using an accounting system specifically designed for IT often results in a lack of management-level information for effective decision making.

- No business accountability for IT investment decisions: For many companies, IT is the business, an inseparable part of core operations. Yet business responsibility for IT outcomes is often lacking. Only in the past decade has the business started to take a real interest in ITFM, deploying teams of finance experts or creating home-grown billing systems. But this does not address the real problem: the lack of business accountability for IT investment decisions.

- Faulty governance of price futures: Cost is made up of quantity and price. While IT managers are able to forecast and measure actual usage of resources, they have little or no influence over price variance caused by inflation, vendor negotiations or changes in currency exchange rates.

The business is mostly or wholly responsible for price variance that occurs after the date of plan approval. Yet, it is not uncommon to hear of IT leaders being criticised or penalised for all of these factors outside of their control. These price risks should be wholly or mostly governed centrally at a corporate level, where functional specialists can govern the entire price risk portfolio.

- Perpetual crisis management: IT management is often under pressure to reduce costs through unrealistic conforming budgets needed for yearly planning and business change cycles. This budget practice creates an incentive to have a short-term approach. Cuts in maintenance and support are the easy mark since there is often no clear linkage to the eventual downstream impact. With no traceability for decisions at that level, by the time the problems occur, the decision to make the cuts is forgotten. The cost reduction leader is promoted internally or externally before the impact of the praised cuts percolates up to the business level in terms of lower service levels, and the successor inherits a new crisis.

IT Financial Management (ITFM) Best Practices[9]

- Understand your source systems: Your source systems form the backbone of your ITFM practice. It’s critical to understand what data is available, and how to access it, in order to build and maintain proper interfaces between the source systems and your ITFM tool. Once you know what data is available from your source systems, you then just have to figure out how to “connect the dots” to your end goals of your ITFM program. Spend the time to understand your source systems – the better your understand the data found in them and its context, the better you’ll be able to meet your goals.

- Show your data owners some love: Don’t underestimate the value of building and maintaining good working relationships and open lines of communication with the individuals who own each source system. It will be critical for you to stay abreast of any changes in the data at the source, so you can make any necessary changes in your ITFM tool, and you will want to know who to contact to make changes to the structure or content of a file exported from your source systems which often happens as your ITFM program matures. Often, the only interaction data owners have with ITFM is at the beginning, or when problems arise. Spend a little extra time to develop those relationships – it will make life much easier down the road.

- Master your data mapping: Your various source systems contain a wide variety of data, which are translated to your ITFM structures via data mapping. For example, your GL might have fields called “Project ID”, “Investment ID”, and “Asset ID” but in your ITFM tool, these data elements all map to one field called “Internal ID”. Really understanding how data is mapped across systems will be helpful when it comes to building more maturity around your ITFM program. Be sure to document your data map – how source systems match up with your ITFM tool. This will make future enhancements much easier and is critical for bringing new team members up to speed.

- Optimize your timespan and refresh cadence for each data source: Different data sources have different refresh rates based on the type of data and system – optimizing the timespan of data for each data interface is critical for building and maintaining your import definitions and processes. Let’s say you have a data interface for time-tracking data. There are several options for the timespan of this data, which affect how you maintain this data (refresh cadence) in your ITFM tool: the timespan could be year-to-date, current month, current week, or it could be just new records since the last interface file was sent. Getting this right when you’re creating the interface is crucial. If time tracking data is imported to your Bill of IT weekly, for example, and only includes hours worked for a given week, then your import definition must be built to append the records to the current month’s time tracking table. By the end of the month, the table will have the entire month of labor hours which allows you to calculate and chargeback your labor costs. If the time tracking interface is imported at the end of each month and includes all the hours worked for the given month, then your import definition should be built to truncate the table and replace it with the contents of the new file. Getting these wrong will lead to lots of confusion and bad data. When you are adding a new source to your system, be sure to understand how it works and how you’ll use the data and choose – and document! – an optimal refresh cadence and timespan for importing new data.

- Cleanse and maintain your data on a regular schedule: The reality is that data trends towards messiness. When your system is based on multiple data sources that you don’t control (as all systems are), eventually some bad data will creep in. For example, you may find multiple entries for a single vendor, like “International Business Machines,” “IBM,” “IBM, Inc.”, and “International Business Machines, Inc.” It is important to normalize your data by picking ONE name for your vendor and regularly reviewing the data in the tool to ensure you don’t have these types of duplicates, which lead to inefficient and ineffective reporting to support your analysis. Many tools allow you to build rules to look for these situations and systematically assign the desired value or, at a minimum, highlight a potential duplicate in a control report to trigger an Admin to manually resolve the issue. Regardless of rules you build, make a practice of periodically reviewing key data tables, like your vendor, application, consumer, and services listings, to catch problems before they snowball.

- Build data validations appropriate to the data’s risk level: Robust data validation can be time consuming and is often skipped. This may work for some data sources, but not for others – knowing when to build robust data validation will help you maintain the integrity of your system without incurring unneeded costs or delays. Develop a risk assessment based on two factors: the data source’s level of trust, and the amount of transformation required in the data interface (low control to high control interfaces). Data that is imported with no transformation from a highly trusted source – data from a Chart of Accounts that becomes a lookup table, for example – typically requires no validation. Data that is either imported from a low trust source, or that requires some transformation, requires some validation. On the far end, “high control” data interfaces include complex import rules related to timespan and cadence, data transformation, and often require robust data validations against other lookup tables. Time tracking data is an example of risky data. Plan to configure processes to include a preliminary staging table with rules for archiving, validation, transformation, version control, and balance verification before the data ultimately progresses to the final target table. There are several examples of how such data might be validated in a tool: validating the Cost Centers against a Cost Center lookup table, validating the employee ID against an employee table, validating the Project ID against the Project lookup table, etc. Consider requesting a summary of hours for the equivalent timespan of the file that is imported and matching the sum of hours in the time tracking file with the summary cross-check file. If any validation errors exist, create a record and report it on your exception/control reports.

- Finally, understand the context around your data: Clean data isn’t the end; it’s still important to guard against poor analysis by maintaining a solid understanding of your data’s context. When data is not well understood it can easily lead to unhelpful – or worse, misleading – analysis. For example, one team developed a server rate using budget data and calculated a cost of $1,000 per server operating system. However, when it came time to charge back server costs based on utilization, the data they were receiving was actually the number of CPUs for each server. Without realizing it, the team was charging their partners incorrectly because they used one metric to develop the rate and were not receiving the same metric for use in their Bill of IT. Making sure you understand the data you are using will ensure the results of your calculations are meaningful and helpful in driving effective decision-making. There are a lot of things to consider to ensure you maintain clean data in your ITFM tool, but these key steps will help give you the data quality you need, and developing a thorough understanding of your data yields accuracy and usability, which in turn results in credibility and better decision-making.

See Also

References

- ↑ Defining IT Financial Management (ITFM) Techtarget

- ↑ ITFM Capabilities Upland Software

- ↑ Goal of IT Financial Management (ITFM) Wikipedia

- ↑ Defining Service-Based IT Financial Management Activities Inform IT

- ↑ Planning your Financial Management Transformation Roadmap IBM

- ↑ IT Financial Management (ITFM) Tools Apptio

- ↑ What’s the difference between IT Financial Management and Technology Business Management? Upland

- ↑ Flawed ITFM practices HP

- ↑ IT Financial Management (ITFM) Best Practices Nicus