Process

A process is a unique combination of tools, materials, methods, and people engaged in producing a measurable output; for example a manufacturing line for machine parts. All processes have inherent statistical variability which can be evaluated by statistical methods.[1]

Process is a series of systematic sequence of steps or actions that result in a deliverable. A process consumes resources aka "input" and produces a deliverable aka "output."

- Each step can be called a stage, phase, or procedure

- Changing the sequence of steps will result in a different deliverable

- Steps can execute in parallel

The two important things in a process are steps and deliverables. A step itself is a series of actions that produces a deliverable that is used by the next step in a process. A deliverable is "something" of value - a component part, piece of software, tool, template, etc. The boundaries of a process, therefore, are arbitrary - where one draws a line can be driven by factors such as time, and organizational unit.

Other Definitions of Process[2]

A process may refer to any of the following:

- A process or running process refers to a set of instructions currently being processed by the computer processor. For example, in Windows, you can see each of the processes running by opening the Processes tab in Task Manager. Windows Processes are Windows Services and background programs you normally don't see running on the computer. A process may be a printer program that runs in the background and monitors the ink levels and other printer settings while the computer is running. A typical computer has dozens of processes running all of the time to help manage the operating system, its hardware, and the software running on the computer.

- Process is also the act of manipulating, altering, or viewing data.

- In general, process refers to a set of predetermined rules in place that must be followed.

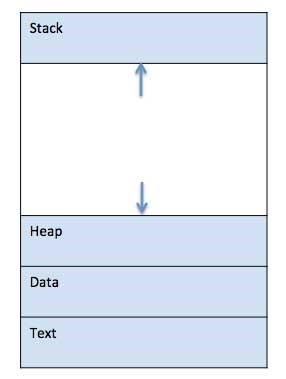

A process is basically a program in execution. The execution of a process must progress in a sequential fashion. A process is defined as an entity that represents the basic unit of work to be implemented in the system. To put it in simple terms, we write our computer programs in a text file and when we execute this program, it becomes a process that performs all the tasks mentioned in the program. When a program is loaded into the memory and becomes a process, it can be divided into four sections ─ stack, heap, text, and data. The following image shows a simplified layout of a process inside the main memory −

Process in Operating Systems[3]

In UNIX and some other operating systems, a process is started when a program is initiated (either by a user entering a shell command or by another program). Like a task, a process is a running program with which a particular set of data is associated so that the process can be kept track of. An application that is being shared by multiple users will generally have one process at some stage of execution for each user. A process can initiate a subprocess, which is called a child process (and the initiating process is sometimes referred to as its parent ). A child process is a replica of the parent process and shares some of its resources, but cannot exist if the parent is terminated. Processes can exchange information or synchronize their operation through several methods of interprocess communication (IPC).

Since most operating systems have many background tasks running, your computer is likely to have many more processes running than actual programs. This can be anything from a small background task, such as a spell-checker or system events handler to a full-blown application like Internet Explorer or Microsoft Word. For example, you may only have three programs running, but there may be twenty active processes. You can view active processes in Windows by opening the Task Manager (press Ctrl-Alt-Delete and click Task Manager). On a Mac, you can see active processes by opening Activity Monitor (in the Applications → Utilities folder). The term "process" can also be used as a verb, which means to perform a series of operations on a set of data. For example, your computer's CPU processes information sent to it by various programs. All processes are composed of one or more threads.[4]

Process and Threads[5]

Processes and threads have one goal: Getting a computer to do more than one thing at a time. To do that, the processor (or processors) must switch smoothly among several tasks, which requires application programs designed to share the computer's resources.

That is why programmers need to split what programs do into processes and threads.

Every program running on a computer uses at least one process. That process consists of an address space (the part of the computer's memory where the program is running) and a flow of control (a way to know which part of the program the processor is running at any instant). In other words, a process is a place to work and a way to keep track of what a program is doing. When several programs are running at the same time, each has its own address space and flow of control.

To serve multiple users, a process may need to fork, or make a copy of itself, to create a child process. Like its parent process, the child process has its own address space and flow of control. Usually, however, when a parent process is terminated, all of the child processes it has launched will also be killed automatically.

All that time adds up when many programs are running at once or when many users each require several processes running at the same time. The more processes running, the greater the percentage of time the CPU and operating system will spend doing expensive context switches. With enough processes to run, a server might eventually spend almost all of its time switching among processes and never do any real work.

To avoid that problem, programmers can use threads. A thread is like a child process, except all the threads associated with a given process share the same address space. For example, when there are many users for the same program, a programmer can write the application so that a new thread is created for each user. Each thread has its own flow of control, but it shares the same address space and most data with all other threads running in the same process. As far as each user can tell, the program appears to be running just for him.

The advantage? It takes much less CPU time to switch among threads than between processes because there's no need to switch address spaces. In addition, because they share address space, threads in a process can communicate more easily with one another.

If the program is running on a computer with multiple processors, a single-process program can be run by only one CPU, while a threaded program can divide the threads up among all available processors. So moving a threaded program to a multiprocessor server should make it run faster.

The downside? Programs using threads are harder to write and debug. Not all programming libraries are designed for use with threads. And not all legacy applications work well with threaded applications. Some programming tools also make it harder to design and test threaded code.

Process State[6]

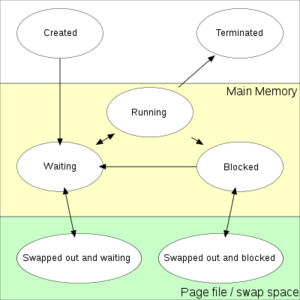

An operating system kernel that allows multitasking needs processes to have certain states. Names for these states are not standardized, but they have similar functionality. The following typical process states are possible on computer systems of all kinds. In most of these states, processes are "stored" in the main memory. The figure below shows the various process states, displayed in a state diagram, with arrows indicating possible transitions between states - as can be seen, some processes are stored in main memory (yellow), and some are stored in secondary memory (green).

- Created: When a process is first created, it occupies the "created" or "new" state. In this state, the process awaits admission to the "ready" state. Admission will be approved or delayed by a long-term, or admission, scheduler. Typically in most desktop computer systems, this admission will be approved automatically. However, for real-time operating systems this admission may be delayed. In a real-time system, admitting too many processes to the "ready" state may lead to oversaturation and over contention of the system's resources, leading to an inability to meet process deadlines.

- Ready: A "ready" or "waiting" process has been loaded into main memory and is awaiting execution on a CPU (to be context switched onto the CPU by the dispatcher, or short-term scheduler). There may be many "ready" processes at any one point of the system's execution—for example, in a one-processor system, only one process can be executed at any one time, and all other "concurrently executing" processes will be waiting for execution. A ready queue or run queue is used in computer scheduling. Modern computers are capable of running many different programs or processes at the same time. However, the CPU is only capable of handling one process at a time. Processes that are ready for the CPU are kept in a queue for "ready" processes. Other processes that are waiting for an event to occur, such as loading information from a hard drive or waiting on an internet connection, are not in the ready queue.

- Running: A process moves into the running state when it is chosen for execution. The process's instructions are executed by one of the CPUs (or cores) of the system. There is at most one running process per CPU or core. A process can run in either of the two modes, namely kernel mode or user mode.

- Kernel mode:

- Processes in kernel mode can access both: kernel and user addresses.

- Kernel mode allows unrestricted access to hardware including the execution of privileged instructions.

- Various instructions (such as I/O instructions and halt instructions) are privileged and can be executed only in kernel mode.

- A system call from a user program leads to a switch to kernel mode.

- User mode:

- Processes in user mode can access their own instructions and data but not kernel instructions and data (or those of other processes).

- When the computer system is executing on behalf of a user application, the system is in user mode. However, when a user application requests a service from the operating system (via a system call), the system must transition from user to kernel mode to fulfill the request.

- User mode avoids various catastrophic failures:

- There is an isolated virtual address space for each process in user mode.

- User mode ensures isolated execution of each process so that it does not affect other processes as such.

- No direct access to any hardware device is allowed.

- Blocked: A process transitions to a blocked state when it cannot carry on without an external change in state or event occurring. For example, a process may block a call to an I/O device such as a printer, if the printer is not available. Processes also commonly block when they require user input or require access to a critical section that must be executed atomically. Such critical sections are protected using a synchronization object such as a semaphore or mutex.

- Terminated: A process may be terminated, either from the "running" state by completing its execution or by explicitly being killed. In either of these cases, the process moves to the "terminated" state. The underlying program is no longer executing, but the process remains in the process table as a zombie process until its parent process calls the wait system call to read its exit status, at which point the process is removed from the process table, finally ending the process's lifetime. If the parent fails to call wait, this continues to consume the process table entry (concretely the process identifier or PID) and causes a resource leak.

Additional Process States

Two additional states are available for processes in systems that support virtual memory. In both of these states, processes are "stored" on secondary memory (typically a hard disk).

- Swapped out and waiting: (Also called suspended and waiting.) In systems that support virtual memory, a process may be swapped out, that is, removed from the main memory and placed on external storage by the scheduler. From here the process may be swapped back into the waiting state.

- Swapped out and blocked: (Also called suspended and blocked.) Processes that are blocked may also be swapped out. In this event, the process is both swapped out and blocked, and may be swapped back in again under the same circumstances as a swapped out and waiting for the process (although in this case, the process will move to the blocked state, and may still be waiting for a resource to become available).

See Also

- Business Process

- IT Strategy Process

- Process Analysis

- Process Analytics

- Process Architecture

- Process Capability

- Process Classification Framework (PCF)

- Process Control

- Process Flow

- Process Improvement

- Process Mining

- Process Model

- Process Optimization

- Process Performance Measurement

References

Popular Topics

- IT Strategy (Information Technology Strategy)

- IT Governance

- Enterprise Architecture

- Chief Information Officer (CIO)

- IT Sourcing (Information Technology Sourcing)

- IT Operations (Information Technology Operations)

- E-Strategy