Data Management

What is Data Management?

Data Management is a comprehensive collection of practices, concepts, procedures, processes, and a wide range of accompanying systems that allow for an organization to gain control of its data resources. Data Management as an overall practice is involved with the entire lifecycle of a given data asset from its original creation point to its final retirement, how it progresses and changes throughout its lifetime through the internal (and external) data streams of an enterprise. The Data Management Book of Knowledge (DMBOK) refers to Data Management (or Data Resource Management) as: “The development and execution of architectures, policies, practices and procedures that properly manage the full data lifecycle needs of an enterprise.” As well as, “The planning, execution, and oversight of policies, practices, and projects that acquire, control, protect, deliver, and enhance the value of data and information assets.”[1]

Data management tasks include the creation of data governance policies, analysis, and architecture; database management system (DMS) integration; data security and data source identification, segregation, and storage. Data management encompasses a variety of different techniques that facilitate and ensure data control and flow from creation to processing, utilization, and deletion. Data management is implemented through a cohesive infrastructure of technological resources and a governing framework that define the administrative processes used throughout the life cycle of data. It is a huge area, and this really is just an over-arching term for an entire segment of IT.[2]

Data management is concerned with the end-to-end lifecycle of data, from creation to retirement, and the controlled progression of data to and from each stage within its lifecycle. Data management minimizes the risks and costs of regulatory non-compliance, legal complications, and security breaches. It also provides access to accurate data when and where it is needed, without ambiguity or conflict, thereby avoiding miscommunication. Any kind of business data is subject to data management principles and procedures, but it is particularly useful in rectifying conflict among data from duplicative sources. Organizations that use cloud-based applications in particular find it hard to keep data orchestrated across systems. Data management practices can prevent ambiguity and make sure that data conforms to organizational best practices for access, storage, backup, and retirement, among other things. A common approach to data management is to utilize a master data file, called Master Data Management (MDM). This file provides a common definition of an asset and all its data properties in an effort to eliminate ambiguous or competing data policies and give the organization comprehensive stewardship over its data.[3]

Evolution of Data Mangement[4]

Beginning in the 1960s, the Association of Data Processing Service Organizations (ADAPSO) became one of a handful of groups that forwarded best practices for data management, especially in terms of professional training and data quality assurance metrics. Over time, information became more popular than data as a term to describe the objectives of corporate computing - as seen, for example, in the renaming of ADAPSO as the Information Technology Association of America (ITAA), or the National Microfilm Association renaming as the Association for Information and Image Management (AIIM) - but the practices of data management continued to evolve. In the 1970s, the relational database management system began to emerge at the center of data management efforts. Based on relational logic, the relational database provided improved means for assuring consistent data processing and for reducing or managing duplicated data. These traits were key for transactional applications. With the rise of the relational database, relational data modeling, schema creation, deduplication, and other techniques advanced to become bigger parts of common data management practice. The 1980s saw the creation of the Data Management Association International, or DAMA International chartered to improve data-related education. Data arose again as a leading descriptive term when IT professionals began to build data warehouses that employed relational techniques for offline data analytics that gave business managers a better view of their organizations' key trends for decision-making. Modeling, schema, and change management all called for different treatments with the advent of data warehousing that improved an organization's views of operations.

Principles of Data Management[5]

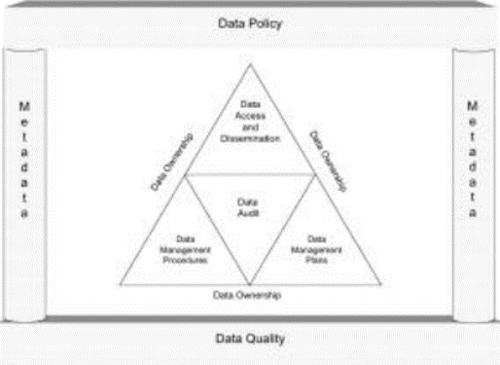

The key principles of Data Management are illustrated in Figure 1 and described below

Key principles of Data Management

Figure 1. source: IGGI

- Avoid re-collecting data: The largest potential for waste in Data Management is reacquiring an existing dataset. This has been done frequently by public and private sector organizations and must be avoided.

- Data lifecycle control: Good Data Management requires that the whole life cycle of datasets be managed carefully. This includes:

- Business justification, to ensure that thought has been given to why new data are required rather than existing data amended or used in new ways, how data can be specified for maximum use including the potential to meet other possible requirements, and why the costs of handling, storing and maintaining these data are acceptable and recoverable.

- Data specification and modeling, processing, database maintenance, and security, to ensure that data will be fit for purpose and held securely in their own databases.

- Ongoing data audit, to monitor the use and continued effectiveness of the data.

- Archiving and final destruction, to ensure that data are archived and maintained effectively until they are no longer needed or are uneconomical to retain

- Data policy: The fundamental step for any organization wishing to implement good Data Management procedures is to define a Data Policy. The document may have different names in different public bodies but in each, it should be a set of broad, high-level principles that form the guiding framework within which Data Management can operate.

- Data ownership: One key aspect of good Data Management is the clear identification of the owner of the data. Normally this is the organization or group of organizations that originally commissioned the data acquisition or compilation and retains managerial and financial control of the data. The Data Owner has legal rights over the dataset, the IPR, and the Copyright. Data Ownership implies the right to exploit the data, and if continued maintenance becomes unnecessary or uneconomical, the right to destroy them, subject to the provisions of the Public Records and Freedom of Information acts. Ownership can relate to a data item, a dataset, or a value-added dataset. IPR can be owned at different levels. For example, a merged or value-added dataset can be owned by one organization, even though other organizations own the constituent data. If the legal ownership is unclear, there are risks that the data can be wrongly exploited, used without payment of royalty to the owner, neglected, or lost.

- Metadata: All datasets must have appropriate metadata compiled for them. At the simplest level metadata are “data about data”. Metadata provides a summary of the characteristics of a dataset. A good metadata record enables the user of a dataset or other information resource to understand the content of what they are reviewing, its potential value, and its limitations.

- Data quality: Good Data Management also ensures that datasets are capable of meeting current needs successfully and are suitable for further exploitation. The ability to integrate data with other datasets is likely to add value, encourage ongoing use of the data and recover the costs of collecting the data. The creation, maintenance, and development of quality data require a clear and well-specified management regime.

- Data Steward: All datasets need to be managed by a named individual referred to here as the Data Steward; also known as dataset manager and data custodian. A Data Steward should be given formal responsibility for the stewardship of each major dataset. They should be accountable for the management and care of the data holdings assigned to them, in line with the defined data policy.

- Data Management Plan: The Data Steward is responsible for the development of a Data Management Plan for each dataset under their responsibility. The objective of the Data Management Plan is to ensure:

- That the dataset is fit for the purpose for which it is required.

- That the long-term management of the dataset is considered for potential re-use.

- Data Management procedures: Individual datasets may require the compilation of specific Data Management procedures. These may be needed where specific datasets require detailed operational procedures to ensure their quality; examples of this include scientific and statistical datasets.

- Data access and dissemination: Although this aspect will depend upon the business and the financial policy of the organization, the following guidelines should be followed.

- Public access to data should be provided in line with The Freedom of Information Act, The Data Protection Act, and The Human Rights Act.

- IPR and Copyright of datasets owned by public bodies must be protected, as data should be regarded as an asset.

- IPR and Copyright of third-party data must be respected.

- The potential for commercial reuse and exploitation of the dataset should be considered.

- The right to use or provide access to data can be passed to a third party, subject to agreed pricing and dissemination policies.

- Data audit: Data Management audits are recommended to ensure that the management environment for given datasets is being maintained. Their purpose is to provide assurance to the Data Management Champion that the resources expended are being used appropriately. Audits of major datasets should be commissioned to ascertain the level of compliance with data policies and the Data Management plans and procedures that have been prepared.

Data Management Techniques[6]

Data must always be easily accessible so that employees can work on data at any time and from anywhere. The Internet has been a useful tool in increasing data accessibility. Having data available online to access - when an employee is traveling, for example - is one technique used for managing large amounts of data.

- Data Entry: Part of data management techniques occurs before the data even enters the database. The data must be correct upon first entry, which means that the individual originally correcting the data must record the data correctly. For example, when a customer reports an address change via the phone, the customer service representative must hear the customer correctly so that the address is correctly entered into the database. One technique for avoiding error is to repeat the information back to the customer.

- Data Backup: Data that is lost can have a detrimental effect on the company by costing the company time as it attempts to replace lost data, according to IBM. Companies often rely on backup methods which store crucial information so that this information can be retrieved in the event that this information is lost.

- Data Cleansing: Data cleansing is the act of fixing incorrect data, consolidating data, and deleting irrelevant data. Data cleansing uses both automated software programs and manual input from a database administrator. While data cleansing removes errors and can increase company productivity and storage space, the data cleansing process can be expensive and time-consuming.

- Replacement Data: Data is sometimes backed up by software, as mentioned previously. When a piece of data is altered for whatever reason and the data stops functioning properly, the data can be replaced by the backup data that worked in the past. Then, the programmers can determine which changes corrupted the data and determine how this data can be better updated in the future.

- Customer Data Entry: Companies can set up websites that allow customers to enter data directly. This direct data entry saves the company money by not requiring them to staff an employee to fill out the necessary forms. Customers can also correct data themselves if there is a mistake. However, not all customers are tech-savvy enough to know how to access online databases through the Internet.

- Double-Checking: Data that is very crucial should always be checked by two pairs of eyes. When one employee edits data, that data should be edited using a different color to denote that the change was made. Then, a second employee can look at the data edit to ensure that there were no errors made.

- Data Management Consultants: Many consultant companies specialize in data quality management. These companies examine how a business carries out data management processes and provide recommendations on how these processes can be improved.

Data Management Process[7]

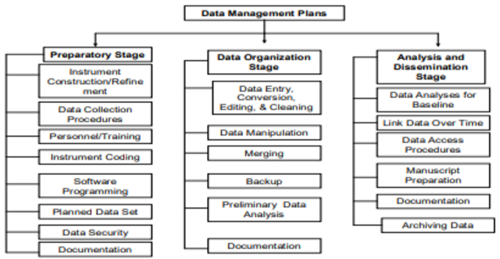

A comprehensive data management plan should be designed to organize data handling processes in order to assure data integrity and security. The stages, components, and strategies of one such plan are represented in Figure 2. The components outlined in each stage can be used as a step-by-step checklist to insure that any issues are addressed and properly documented.

The Data Management Process

Figure 2. source: A&M University

- PREPARATORY STAGE: The preparatory stage takes place during the project startup period and includes instrument construction and refinement, data collection procedures, personnel training, instrument coding, software programming for data entry, planning for data set security procedures, and documentation.

- Instrument Construction/Refinement: The structure and format of the research instrument are critical to data accuracy and completeness.

- Data Collection Procedures: Written standardized procedures facilitate consistency in data collection across study participants and data collectors. The adage “garbage in, garbage out” illustrates the issues in the management of raw data.

- Personnel/Training: When multiple data collectors are used, the interviewer training needs to be designed to establish consistency in data collection procedures. Written procedures are developed and included in a training manual to ensure consistency in approach.

- Instrument Coding: During the preparatory stage, data collection instruments and individual items are assigned a code name for ease of data entry and management. Code names should be meaningful and easy to remember. Coding and naming conventions should be standardized for files, variables, programs, and other entities in a data management system.

- Software Programming: Software programs allow for the entry, transfer, and analysis of data. Prior to data entry, data fields must be determined to assure accurate data entry. It is important to label all data programming steps in order to create a data set history.

- Security Procedures: One of the most critical components of data management is data security

- Documentation: The heart of effective data management is in the documentation. Documentation includes information about recruitment sites, data collectors, and participant progression through the study. A tracking system is necessary to document each participant’s progression and completion of required data collection and intervention components.

- DATA ORGANIZATION STAGE

- Data Entry, Conversion, Editing, and Cleaning: Accurate data cleansing is critical to the project’s success. If done improperly, the results of the study can be skewed. Data entry needs to be performed by well-trained and responsible individuals. Data must be entered with attention to detail and some individuals are better at this than others. After data entry is accomplished, several activities then need to be performed to check the quality of the data. The data must then be examined for accuracy.

- Data Manipulation: Prior to initiating statistical analysis, data manipulation also needs to be completed. Data manipulation includes re-coding and creating new variables and creating scales and subscales. New variables are created when it is useful to collapse categories to create fewer response options to achieve more meaningful results.

- Data Merging: With multiple data collection points in time, each time point is entered into separate data files. The files will then need to be merged to allow changes in variables across time. Checks are implemented to validate that the files were merged properly. The data sets are linked by participant identification numbers.

- Data Backup: A high priority is the creation of backup electronic copies of all files. Electronic copies of the system codes, data, and other related files were stored in the main server. Additional backup files may be stored on a zip disk. Hard copies of questionnaires may be kept in a locked cabinet in the project office.

- Documentation: During data entry, the data manager documents all of the item codes and recodes, variables names, and the creation of scales and subscales, and any other changes to the data. Additionally, all steps taken to transform, convert, or manipulate data, as well as file mergers must be documented.

- ANALYSIS AND DISSEMINATION STAGE

- Preliminary Data Analysis: Preliminary analysis of the data is a valuable tool that needs to be included prior to analysis in order to test the research hypotheses. The preliminary analysis can detect various issues that are not specifically related to the quality of data but may be important in making any inferences based on the data. In addition, the preliminary analysis allows interim reports for dissemination to project staff.

- Baseline Data Analysis: Baseline data analysis includes both descriptive and inferential statistics. Descriptive statistics were reported for each data collection stage. At this stage, an individual with statistical expertise provides consultation and supervises the analysis.

- Linking Longitudinal Data: In longitudinal data with multiple data collection stages, files for each data collection must be merged to enable data analyses of effects and patterns across time.

- Data Access Procedures: Limiting access to the data is a necessary part of the data management process.

- Manuscript Preparation: All research team members need to play a role in planning, developing, and submitting manuscripts. Senior team members can mentor less experienced members in planning analyses and writing reports of findings.

- Documentation. Documentation is also critical during the data analysis and dissemination stage. All data analysis activities must be documented to create an analysis history. A written summary of each analysis is useful for preventing unnecessary duplication of analyses.

- Archiving Data: Archived data includes all raw data, the database stored in datasets, the stored datasets, all analysis programs, all documentation, and all final standard operational procedures. In the archived data, the link between individual data and data sets remains separate. Hard copies of raw data and zip discs are secured in a locked storage area used solely for storing archival materials

Integrated Data Management[8]

Integrated Data Management (IDM) is a tools approach to facilitate data management and improve performance. IDM consists of an integrated, modular environment to manage enterprise application data, and optimize data-driven applications over its lifetime. IDM's purpose is to:

- Produce enterprise-ready applications faster

- Improve data access, speed iterative testing

- Empower collaboration between architects, developers, and DBAs

- Consistently achieve service level targets

- Automate and simplify operations

- Provide contextual intelligence across the solution stack

- Support business growth

- Accommodate new initiatives without expanding infrastructure

- Simplify application upgrades, consolidation, and retirement

- Facilitate alignment, consistency, and governance

- Define business policies and standards up front; share, extend, and apply throughout the lifecycle

Data Management Best Practices

[9]

The best way to manage data, and eventually get the insights needed to make data-driven decisions, is, to begin with, a business question and acquire the data that is needed to answer that question. Companies must collect vast amounts of information from various sources and then utilize best practices while going through the process of storing and managing the data, cleaning and mining the data, and then analyzing and visualizing the data in order to inform their business decisions. It’s important to keep in mind that data management best practices result in better analytics. By correctly managing and preparing the data for analytics, companies optimize their Big Data. A few data management best practices organizations and enterprises should strive to achieve include:

- Simplify access to traditional and emerging data

- Scrub data to infuse quality into existing business processes

- Shape data using flexible manipulation techniques

Data Management Benefits[10]

Having a strong data management plan is very important for the success of a company. Below are a few other benefits of a strong data management plan.

- Productivity: Good data management will make your organization more productive. On the flip side, poor data management will lead to your organization being very inefficient. Good data management makes it easier for employees to find and understand the information that they need to do their job. In addition, it allows them to easily validate the results or conclusions they may have. It also provides the structure for information to be easily shared with others and stored for future reference and easy retrieval.

- Cost Efficiency: Another benefit of proper data management can be that it should allow your organization to avoid unnecessary duplication. Storing and making all data easily referable ensures you never have employees conducting the same research, analysis, or work that has already been completed by another employee.

- Operational Nimbleness: In business, the speed at which a company can make decisions and change direction is a key factor in determining how successful a company can be. If a company takes too long to react to the market or its competitors it can spell disaster for the company. A good data management system can allow employees to access information and be notified of market or competitor changes faster. As a result, it allows a company to make decisions and take action significantly faster than companies that have poor data management and data sharing systems.

- Security Risks: In addition, there are multiple risks if your data is not managed properly and your information falls into the hands of the wrong people. For example electronics giant Sony was prey to computer attacks which led to the theft of over 77 million PlayStation users’ bank details. A strong data management system will greatly reduce the risk of this ever happening to your organization.

- Reduced Instances Of Data Loss: With a data management system and plan in place that all your employees know and follow it can greatly reduce the risk of losing vital information. With a data management plan things will be put in place to ensure that important information is backed up and retrievable from a secondary source if the primary source ever becomes inaccessible.

- More Accurate Decisions: Many organizations use different sources of information for planning, trends analysis, and managing performance. Within an organization, different employees may even use different sources of information to perform the same task if there is no data management process and they are unaware of the correct information source to use. The value of the information is only as good as the information source. The old idea of garbage in garbage out. This means that decision-makers across the organization are often analyzing different numbers in order to make decisions that will affect the company, and result in poor or inaccurate conclusions without a data management system in place. Data entry errors, conclusion errors, and processing inefficiencies are all risks for companies that don’t have a strong data management plan and system. For a great article on this topic click here. The corrective costs of inadequate data management can be significant and can run into millions of dollars from a single occurrence. The primary reasons for bad data and data loss are that there is no data management system or plan in place or the plan or system is of poor quality. The unfortunate part is that often organization realizes that they have an issue only after an issue arises. Instead of being proactive, most organizations are reactive, which in the long run costs them significantly more.

Data Management Challenges[11]

The good news: we’ve got more data than ever before to help us make better business decisions. The bad news: we’re drowning in that data and it’s becoming increasingly difficult to use it effectively. It’s a case of too much data, too little time, and too many lost opportunities. Unfortunately, high volume isn’t the only data challenge. There are numerous obstacles that can impede data analysis. Many organizations have been “limping along” dealing with these challenges manually or using workarounds that simply aren’t scalable. But growing volumes exacerbate the problem and turn these data speed bumps into roadblocks. Here are five common roadblocks to watch out for:

- Static reporting data: Chances are, your organization has millions of documents – from purchase orders to patient files to inventory records – packed with the intelligence you can’t use. Why? Because this type of data lacks context and interactivity, which decreases your ability to provide comparisons and drive improvement.

- Data extraction and manipulation: Having to rely solely on your IT staff to access data limits you and puts pressure on them. What’s more, you then need to put your data into a logical order – hopefully without error. Getting access to the underlying data you need from existing reports, web pages, and PDF documents in a timely fashion is critical in order to stay competitive.

- Integration of disparate data: Providing a unified view of your data from a range of sources – including PDFs, HTML, text documents, and Excel files – is a typically laborious, time-consuming exercise. (Just ask any of your IT staff.) Consolidating from so many sources can also cause unintentional errors. You need to be able to generate clean reports and assess their contents in order to make important and impactful decisions.

- Information and distribution challenges: If you can’t share valuable insights from sources such as annual sales report stats or warehouse inventory projections, what good is having the data in the first place? If you have bandwidth or network issues, you’re likely suffering from inefficient data delivery. Users need to be able to quickly access, manipulate and blend any type of data.

- Business intelligence (BI) challenges: From complexity to poor quality data to difficult-to-use data tools, the BI challenges are plentiful. If your everyday business staff doesn’t know how to use the systems you have in place, they simply won’t use them at all. Did we mention that BI challenges can lead to a lost competitive advantage?

See Also

Data Management encompasses the practices, architectural techniques, and tools for achieving consistent access to and delivery of data across the spectrum of data subject areas and data structure types in the enterprise. It's a broad area that includes several key practices and concepts essential for ensuring that data is accurate, available, and secure.

- Data Governance: The overall management of the availability, usability, integrity, and security of the data employed in an organization. This includes establishing policies, standards, and procedures to manage data across the enterprise.

- Data Quality: Covering the processes and technologies involved in ensuring that data is accurate, complete, and reliable. This might include data validation, cleansing, and enrichment practices.

- Data Security: Discussing the strategies and tools used to protect data from unauthorized access, corruption, or theft. This includes encryption, access control, and compliance with data protection regulations.

- Data Privacy: Highlighting the importance of managing personal data in compliance with privacy laws and standards, such as GDPR (General Data Protection Regulation) in the EU, to protect the rights of individuals.

- Database Management System (DBMS): Exploring the software tools that enable users to store, retrieve, and manage data in databases. Types of DBMS, such as relational databases, NoSQL databases, and in-memory databases, could be discussed.

- Data Warehouse: Covering the design, implementation, and use of data warehouses for the consolidation of data from multiple sources for analysis and reporting.

- Business Intelligence (BI): Discussing the technologies and practices for the collection, integration, analysis, and presentation of business information, supporting decision making.

- Big Data: Exploring the concepts, technologies, and challenges associated with managing and analyzing very large sets of data, including discussions on data lakes, Hadoop, and Spark.

- Data Architecture: Highlighting the structural design of data-related elements and the data management resources that form the enterprise architecture.

- Data Integration: Covering the processes and technologies involved in combining data from different sources into a unified view, including ETL (extract, transform, load) processes and data virtualization.

- Data Analytics: Discussing the techniques and tools used to analyze data to find trends, patterns, and insights, including descriptive, predictive, and prescriptive analytics.

- Master Data Management (MDM): Explaining the process of creating and maintaining a single, consistent, and accurate view of key enterprise data (such as customer, product, employee data) across the organization.

- Data Lifecycle Management (DLM): Covering the policies, processes, and tools used to manage the flow of data through its lifecycle, from creation and initial storage to the time when it becomes obsolete and is deleted.

- Metadata Management

- Master Data Management (MDM)

- Customer Data Management (CDM)

References

- ↑ Defining Data Management

- ↑ Explaining Data Management

- ↑ Understanding Data Management

- ↑ Evolution of Data Mangement

- ↑ Principles of Data Management

- ↑ The Art of Data Management

- ↑ A Step by Step Checklist of Data Management

- ↑ Integrated Data Management

- ↑ What are some Best Practices in Data Management?

- ↑ What are the Benefits of Data Management?

- ↑ What are the Challenges to Data Management?