Data Quality

Data Quality (DQ) is an intricate way of measuring data properties from different perspectives. It is a comprehensive examination of the application efficiency, reliability and fitness of data, especially data residing in a data warehouse. Inside an organization, adequate data quality is vital for transactional and operational processes, as well as the longevity of business intelligence (BI) and business analytics (BA) reporting. Data quality may be affected by the way in which data is entered, handled and maintained.[1]

Data Quality often has the following dimensions:

- Accuracy

- Completeness

- Consistency

- Integrity

- Reasonability

- Timeliness

- Uniqueness/ Deduplication

- Validity

- Accessibility[2]

History of Data Quality[3]

Before the rise of the inexpensive computer data storage, massive mainframe computers were used to maintain name and address data for delivery services. This was so that mail could be properly routed to its destination. The mainframes used business rules to correct common misspellings and typographical errors in name and address data, as well as to track customers who had moved, died, gone to prison, married, divorced, or experienced other life-changing events. Government agencies began to make postal data available to a few service companies to cross-reference customer data with the National Change of Address registry (NCOA). This technology saved large companies millions of dollars in comparison to manual correction of customer data. Large companies saved on postage, as bills and direct marketing materials made their way to the intended customer more accurately. Initially sold as a service, data quality moved inside the walls of corporations, as low-cost and powerful server technology became available. Companies with an emphasis on marketing often focused their quality efforts on name and address information, but data quality is recognized as an important property of all types of data. Principles of data quality can be applied to supply chain data, transactional data, and nearly every other category of data found. For example, making supply chain data conform to a certain standard has value to an organization by:

1) avoiding overstocking of similar but slightly different stock;

2) avoiding false stock-out;

3) improving the understanding of vendor purchases to negotiate volume discounts; and

4) avoiding logistics costs in stocking and shipping parts across a large organization. For companies with significant research efforts, data quality can include developing protocols for research methods, reducing measurement error, bounds checking of data, cross tabulation, modeling and outlier detection, verifying data integrity, etc.

Data Quality - Activities[4]

Data quality activities involve data rationalization and validation. Data quality efforts are often needed while integrating disparate applications that occur during merger and acquisition activities, but also when siloed data systems within a single organization are brought together for the first time in a data warehouse or big data lake. Data quality is also critical to the efficiency of horizontal business applications such as enterprise resource planning (ERP) or customer relationship management (CRM).

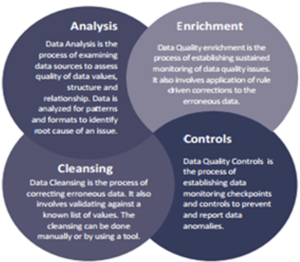

Data Quality Framework (Figure 1.)[5]

A Data Quality (DQ) framework examines the completeness, validity, consistency, timeliness and accuracy of enterprise data, as it moves from source to reporting. It also defines data enrichment and enhancement strategies to address any data quality issues with well-defined data control points.

Figure 1. source: CrossCountry

Dimensions of Data Quality (Figure 2.)[6]

To maintain the accuracy and value of the business-critical operational information that impact strategic decision-making, businesses should implement a data quality strategy that embeds data quality techniques into their business processes and into their enterprise applications and data integration. On the surface, it is obvious that data quality is about cleaning up bad data – data that are missing, incorrect or invalid in some way. But in order to ensure data are trustworthy, it is important to understand the key dimensions of data quality to assess how the data are “bad” in the first place.

- Completeness: Completeness is defined as expected comprehensiveness. Data can be complete even if optional data is missing. As long as the data meets the expectations then the data is considered complete. For example, a customer’s first name and last name are mandatory but middle name is optional; so a record can be considered complete even if a middle name is not available. Questions you can ask yourself: Is all the requisite information available? Do any data values have missing elements? Or are they in an unusable state?

- Consistency: Consistency means data across all systems reflects the same information and are in synch with each other across the enterprise. Examples:

- A business unit status is closed but there are sales for that business unit.

- Employee status is terminated but pay status is active.

Questions you can ask yourself: Are data values the same across the data sets? Are there any distinct occurrences of the same data instances that provide conflicting information?

- Conformity: Conformity means the data is following the set of standard data definitions like data type, size and format. For example, date of birth of customer is in the format “mm/dd/yyyy”

Questions you can ask yourself: Do data values comply with the specified formats? If so, do all the data values comply with those formats? Maintaining conformance to specific formats is important.

- Accuracy: Accuracy is the degree to which data correctly reflects the real world object OR an event being described. Examples:

- Sales of the business unit are the real value.

- Address of an employee in the employee database is the real address.

Questions you can ask yourself: Do data objects accurately represent the “real world” values they are expected to model? Are there incorrect spellings of product or person names, addresses, and even untimely or not current data? These issues can impact operational and analytical applications.

- Integrity: Integrity means validity of data across the relationships and ensures that all data in a database can be traced and connected to other data. For example, in a customer database, there should be a valid customer, addresses and relationship between them. If there is an address relationship data without a customer then that data is not valid and is considered an orphaned record.

Ask yourself: Is there are any data missing important relationship linkages? The inability to link related records together may actually introduce duplication across your systems.

- Timeliness{ Timeliness references whether information is available when it is expected and needed. Timeliness of data is very important. This is reflected in:

- Companies that are required to publish their quarterly results within a given frame of time

- Customer service providing up-to date information to the customers

- Credit system checking in real-time on the credit card account activity

The timeliness depends on user expectation. Online availability of data could be required for room allocation system in hospitality, but nightly data could be perfectly acceptable for a billing system.

Figure 2. source: Smartbridge

The Data Quality Cycle (Figure 3.)[7]

The foundational components of the Data Quality Cycle required to Discover, Profile, Establish Rules, Monitor, Report, Remediate, and continuously improve Data Quality are illustrated in the figure below:

- Data Discovery: The process of finding, gathering, organizing and reporting metadata about your data (e.g., files/tables, record/row definitions, field/column definitions, keys)

- Data Profiling: The process of analyzing your data in detail, comparing the data to its metadata, calculating data statistics and reporting the measures of quality for the data at a point in time

- Data Quality Rules: Based on the business requirements for each Data Quality measure, the business and technical rules that the data must adhere to in order to be considered of high quality

- Data Quality Monitoring: The ongoing monitoring of Data Quality, based on the results of executing the Data Quality rules, and the comparison of those results to defined error thresholds, the *creation and storage of Data Quality exceptions and the generation of appropriate notifications

- Data Quality Reporting: The reporting, dashboards and scorecards used to report and trend ongoing Data Quality measures and to drill down into detailed Data Quality exceptions

- Data Remediation: The ongoing correction of Data Quality exceptions and issues as they are reported

Figure 3. source: Knowledgent

The challenges of Data Quality[8]

Because big data has the 4V characteristics, when enterprises use and process big data, extracting high-quality and real data from the massive, variable, and complicated data sets becomes an urgent issue. At present, big data quality faces the following challenges:

- The diversity of data sources brings abundant data types and complex data structures and increases the difficulty of data integration.

- Data volume is tremendous, and it is difficult to judge data quality within a reasonable amount of time.

- Data change very fast and the “timeliness” of data is very short, which necessitates higher requirements for processing technology.

- No unified and approved data quality standards have been formed, and research on the data quality of big data has just begun.

Benefits of Good Data Quality[9]

When considering the business value of good data quality, the primary purpose is to make a business more efficient and profitable. The 451 Group research that tabulated the top 5 benefits below included other downstream benefits, such as “better supplier performance” and “more informed decision making.”

- Increased revenues: When a business is able to make decisions on a foundation of high quality, validated data, positive top-line outcomes are a likely result. Unreliable data results in less confident decisions that can often lead to missteps and rework that don’t deliver increased revenues.

- Reduced costs: If data quality enables an organization to complete a project correctly the first time around, it also enables the organization to operate more efficiently and complete more projects. Project delays due to course corrections burn through budgets and slow business growth.

- Less time spent reconciling data: Manually reconciling data is a time sink that consumes costly resources, as manual reconciliation does not scale. As data sources and associated error rates increase, the law of diminishing returns impedes progress, which implies that rules-based automation can help contain reconciliation time and effort.

- Greater confidence in analytical systems: You would be surprised how often organizations use data in which they have little confidence. The 451 Research study revealed that 60 percent of executives lack confidence in their organizations’ data quality, yet alarmingly some data, even if flawed is considered to be better than no data when deadlines loom. Data quality tools can ensure only trusted data is used for decision-making, which, in turn, increases confidence in those analytical systems.

- Increased customer satisfaction: Imagine calling your IT service provider and always having to tell it the model number, OS version and patch levels for your PC. It would be much better if the provider always knew the versions of everything you had installed on your system when you called. IT customer service is a very data-intensive operation that benefits greatly from up-to-date, validated information. In the world of IT Service Management (ITSM), knowledge helps to drive customer satisfaction.

See Also

Data Cleansing

Data Management

Data Warehouse

Customer Data Management (CDM)

Cutomer Data Integration

Big Data

Business Intelligence

Data Analysis

Data Analytics

Predictive Analytics

References

- ↑ What is Data Quality Techopedia

- ↑ Data Quality Dimensions Dataversity

- ↑ History of Data Quality Wikipedia

- ↑ What activities are involved in data quality? Informatica

- ↑ Data Quality Framework CrossCountry

- ↑ 6 Dimensions of Data Quality Smartbridge

- ↑ The Data Quality Cycle Knowledgent

- ↑ The challenges of Data Quality Li Cai , Yangyong Zhu

- ↑ Top 5 Benefits of good data quality Pradeep Bhanot

Further Reading

- Committing to Data Quality Review Limor Peer. Ann Green, Elizabeth Stephenson

- 20 Years of Data Quality Research: Themes, Trends and Synergies Shazia Sadiq, Naiem Khodabandehloo, Yeganeh Marta Indulska

- Barriers to master data quality Anders Haug, Jan Stentoft Arlbjørn

- Big data and the legal framework for data quality Thomas Hoeren