Artificial Neural Network (ANN)

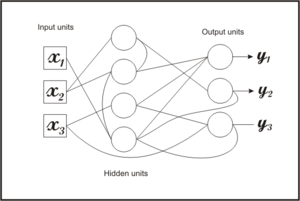

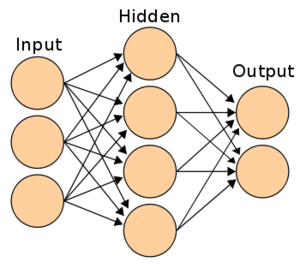

Artificial Neural Networks (ANN) are one of the main tools used in machine learning. As the “neural” part of their name suggests, they are brain-inspired systems which are intended to replicate the way that we humans learn. Neural networks consist of input and output layers, as well as (in most cases) a hidden layer consisting of units that transform the input into something that the output layer can use. They are excellent tools for finding patterns which are far too complex or numerous for a human programmer to extract and teach the machine to recognize.[1]

How Artificial Neural Network (ANN) Works

How do artificial neural networks work?[2]

Artificial neural networks use different layers of mathematical processing to make sense of the information it’s fed. Typically, an artificial neural network has anywhere from dozens to millions of artificial neurons—called units—arranged in a series of layers. The input layer receives various forms of information from the outside world. This is the data that the network aims to process or learn about. From the input unit, the data goes through one or more hidden units. The hidden unit’s job is to transform the input into something the output unit can use.

source: Analytics Vidya

The majority of neural networks are fully connected from one layer to another. These connections are weighted; the higher the number the greater influence one unit has on another, similar to a human brain. As the data goes through each unit the network is learning more about the data. On the other side of the network is the output units, and this is where the network responds to the data that it was given and processed.

Cognitive neuroscientists have learned a tremendous amount about the human brain since computer scientists first attempted the original artificial neural network. One of the things they learned is that different parts of the brain are responsible for processing different aspects of information and these parts are arranged hierarchically. So, input comes into the brain and each level of neurons provide insight and then the information gets passed on to the next, more senior level. That’s precisely the mechanism that ANNs are trying to replicate.

In order for ANNs to learn, they need to have a tremendous amount of information thrown at them called a training set. When you are trying to teach an ANN how to differentiate a cat from dog, the training set would provide thousands of images tagged as a dog so the network would begin to learn. Once it has been trained with the significant amount of data, it will try to classify future data based on what it thinks it’s seeing (or hearing, depending on the data set) throughout the different units. During the training period, the machine’s output is compared to the human- provided description of what should be observed. If they are the same, the machine is validated. If it’s incorrect, it uses back propagation to adjust its learning—going back through the layers to tweak the mathematical equation. Known as deep learning, this is what makes a network intelligent.

Types of Artificial Neural Networks (ANN)

What kinds are there?[3]

Along with now using deep learning, it’s important to know that there are a multitude of different architectures of artificial neural networks. The typical ANN is setup in a way where each neuron is connected to every other neuron in the next layer. These are specifically called feed forward artificial neural networks (even though ANNs are generally all feed forward). We’ve learned that by connecting neurons to other neurons in certain patterns, we can get even better results in specific scenarios.

- Recurrent Neural Networks: Recurrent Neural Networks (RNN) were created to address the flaw in artificial neural networks that didn’t make decisions based on previous knowledge. A typical ANN had learned to make decisions based on context in training, but once it was making decisions for use, the decisions were made independent of each other.

RNNs are very useful in natural language processing since prior words or characters are useful in understanding the context of another word. There are plenty of different implementations, but the intention is always the same. We want to retain information. We can achieve this through having bi-directional RNNs, or we can implement a recurrent hidden layer that gets modified with each feedforward.

- Convolutional Neural Networks: Convolutional Neural Networks (CNN), sometimes called LeNets (named after Yann LeCun), are artificial neural networks where the connections between layers appear to be somewhat arbitrary. However, the reason for the synapses to be setup the way they are is to help reduce the number of parameters that need to be optimized. This is done by noting a certain symmetry in how the neurons are connected, and so you can essentially “re-use” neurons to have identical copies without necessarily needing the same number of synapses. CNNs are commonly used in working with images thanks to their ability to recognize patterns in surrounding pixels. There’s redundant information contained when you look at each individual pixel compared to its surrounding pixels, and you can actually compress some of this information thanks to their symmetrical properties.

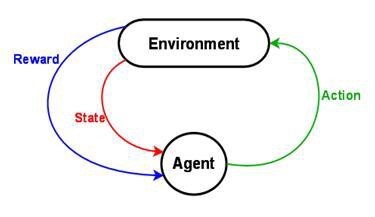

- Reinforcement Learning: Reinforcement Learning is a generic term used for the behavior that computers exhibit when trying to maximize a certain reward, which means that it in itself isn’t an artificial neural network architecture. However, you can apply reinforcement learning or genetic algorithms to build an artificial neural network architecture that you might not have thought to use before. An example of reinforcement learning is the company DeepMind being able to teach a program to master various Atari games.

Neural networks are powering just about everything we do, including language translation, animal recognition, picture captioning, text summarization and just about anything else you can think of.

History of Neural Networks in Business[4]

The history of neural network development can be divided into "ve main stages, spanning over 150 years. These stages are shown in the figure below, where key research developments in computing and neural networks are listed along with evidence of the impact these developments had on the business community. The subdivision of this history into five stages is not the only viewpoint, and many other excellent reviews of historical developments have been written. The five stages proposed here, however, each reflect a change in the research environment and the resourcing and interests of business.

The five stages of neural network research development, and its business impact.

source: Pergamon

Much of the preliminary research and development was achieved during Stage 1 which here is considered to be pre-World War II (i.e. prior to 1945). During this time most of the foundations for future neural network research had been formed. The basic design principles of analytic engines had been invented by Charles Babbage in 1834, which became the forerunner to the modern electronic computer.

Stage 2 is characterised by the age of computer simulation. In 1946, Wilkes designed the first operational stored-program computer. Over the ensuing years, the development of electronic computers progressed rapidly, and in 1954 General Electric Company became the first corporation to use a computer when they installed a UNIVAC I to automate the payroll system. The advances in computing enabled neural network researchers to experiment with their ideas, and in 1949 Donald Hebb wrote The Organization of Behaviour, where he proposed a rule to allow neural network weights to be adapted to reflect the learning process explored by Pavlov. By this stage the fields of artificial intelligence and neural networks were causing much excitement amongst researchers, and the general public was soon to become captivated by the idea of `thinking machinesa. In 1962, Bernard Widrow appeared on the US documentary program Science in Action and showed how his neural network could learn to predict the weather, blackjack, and the stock market. For the remainder of the 1960s this excitement continued to grow.

The third stage, from 1969 until 1982, is commonly called the `quiet years'. During this time, however, there were significant developments in the computer industry. In 1971 the "rst microprocessor was developed by the Intel Corporation. Computers were starting to become more common in businesses worldwide, and several computer companies and software companies were formed during the mid-seventies. SPSS Inc. and Nestor Inc. in 1975 and Apple Computer Corporation in 1977 are a few examples of companies which formed then, and later became heavily involved in neural networks. In 1981, IBM introduced the IBM PC which brought computing power to businesses and households across the world. While these rapid developments in the computing industry were occurring, some researchers started looking at alternative neural network models which might overcome the limitations observed by Minsky and Papert. The concept of self-organisation in the human brain and neural network models was explored by Willshaw and von der Malsburg, and consolidated by Kohonen in 1982. This work helped to revive interest in neural networks, as did the efforts of Hopfield who was looking at the concepts of storing and retrieving memories. Thus, by the end of this third stage, research into neural networks had diversified, and was starting to look promising again.

From 1983 until 1990 marks the 4th Stage where neural network research blossomed. In 1983 the US government funded neural network research for the first time through the Defence Advanced Research Projects Agency (DARPA), providing testament to the growing feeling of optimism surrounding the field. An important breakthrough was then made in 1985 which impacted on the future of neural networks considerably. Backpropagation was discovered independently by two researchers which provided a learning rule for neural networks which overcame the limitations described by Minsky and Papert. Within years of its discovery the neural network field grew dramatically in size and momentum. Rumelhart and McClelland's (1986) book, Parallel Distributed Processing, became the neural network `bible'. In 1987, the Institute of Electrical and Electronic Engineers (IEEE) held the 1st International Conference on Neural Networks, and these conferences have been held annually ever since.

In 1991, the banks started to use neural networks to make decisions about loan applicants and speculate about financial prediction. This marks the start of the 5th Stage. Within a couple of years many neural network companies had been formed including Neuraltech Inc. in 1993 and Trajecta Inc. in 1995. Many of these companies produced easy-to-use neural network software containing a variety of architectures and learning rules. The impact on business was almost instantaneous. By 1996, 95% of the top 100 banks in the US were utilising intelligent techniques including neural networks. Within competitive industries like banking, finance, retail, and marketing, companies realised that they could use these techniques to help give them a `competitive edge'. In 1998, IBM announced a company-wide initiative for the estimated $70 billion business intelligence market. Research during this 5th stage still continues, but it is now more industry driven. Now that the business world is becoming increasingly dependent upon intelligent techniques like neural networks to solve a variety of problems, new research problems are emerging. Researchers are now devising techniques for extracting rules from neural networks, and combining neural networks with other intelligent techniques like genetic algorithms, fuzzy logic and expert systems. As more complex business problems are tackled, more research challenges are created.

source: Michal Tkáč, Robert Verner

Criticism and Counters to Artificial Neural Network (ANN)[5]

Criticism

- Training issues: A common criticism of neural networks, particularly in robotics, is that they require too much training for real-world operation.[citation needed] Potential solutions include randomly shuffling training examples, by using a numerical optimization algorithm that does not take too large steps when changing the network connections following an example and by grouping examples in so-called mini-batches. Improving the training efficiency and convergence capability has always been an ongoing research area for neural network. For example, by introducing a recursive least squares algorithm for CMAC neural network, the training process only takes one step to converge.

- Theoretical issues: No neural network has solved computationally difficult problems such as the n-Queens problem, the travelling salesman problem, or the problem of factoring large integers. A fundamental objection is that they do not reflect how real neurons function. Back propagation is a critical part of most artificial neural networks, although no such mechanism exists in biological neural networks. How information is coded by real neurons is not known. Sensor neurons fire action potentials more frequently with sensor activation and muscle cells pull more strongly when their associated motor neurons receive action potentials more frequently. Other than the case of relaying information from a sensor neuron to a motor neuron, almost nothing of the principles of how information is handled by biological neural networks is known. This is a subject of active research in neural coding. The motivation behind artificial neural networks is not necessarily to strictly replicate neural function, but to use biological neural networks as an inspiration. A central claim of artificial neural networks is therefore that it embodies some new and powerful general principle for processing information. Unfortunately, these general principles are ill-defined. It is often claimed that they are emergent from the network itself. This allows simple statistical association (the basic function of artificial neural networks) to be described as learning or recognition. Alexander Dewdney commented that, as a result, artificial neural networks have a "something-for-nothing quality, one that imparts a peculiar aura of laziness and a distinct lack of curiosity about just how good these computing systems are. No human hand (or mind) intervenes; solutions are found as if by magic; and no one, it seems, has learned anything". Biological brains use both shallow and deep circuits as reported by brain anatomy, displaying a wide variety of invariance. Weng argued that the brain self-wires largely according to signal statistics and therefore, a serial cascade cannot catch all major statistical dependencies.

- Hardware issues: Large and effective neural networks require considerable computing resources. While the brain has hardware tailored to the task of processing signals through a graph of neurons, simulating even a simplified neuron on von Neumann architecture may compel a neural network designer to fill many millions of database rows for its connections – which can consume vast amounts of memory and storage. Furthermore, the designer often needs to transmit signals through many of these connections and their associated neurons – which must often be matched with enormous CPU processing power and time. Schmidhuber notes that the resurgence of neural networks in the twenty-first century is largely attributable to advances in hardware: from 1991 to 2015, computing power, especially as delivered by GPGPUs (on GPUs), has increased around a million-fold, making the standard backpropagation algorithm feasible for training networks that are several layers deeper than before. The use of accelerators such as FPGAs and GPUs can reduce training times from months to days. Neuromorphic engineering addresses the hardware difficulty directly, by constructing non-von-Neumann chips to directly implement neural networks in circuitry. Another chip optimized for neural network processing is called a Tensor Processing Unit, or TPU.

Practical counter examples to criticisms

Arguments against Dewdney's position are that neural networks have been successfully used to solve many complex and diverse tasks, ranging from autonomously flying aircraft to detecting credit card fraud to mastering the game of Go.

Technology writer Roger Bridgman commented: "Neural networks, for instance, are in the dock not only because they have been hyped to high heaven, (what hasn't?) but also because you could create a successful net without understanding how it worked: the bunch of numbers that captures its behaviour would in all probability be "an opaque, unreadable table...valueless as a scientific resource".

In spite of his emphatic declaration that science is not technology, Dewdney seems here to pillory neural nets as bad science when most of those devising them are just trying to be good engineers. An unreadable table that a useful machine could read would still be well worth having

Although it is true that analyzing what has been learned by an artificial neural network is difficult, it is much easier to do so than to analyze what has been learned by a biological neural network. Furthermore, researchers involved in exploring learning algorithms for neural networks are gradually uncovering general principles that allow a learning machine to be successful. For example, local vs non-local learning and shallow vs deep architecture.

Hybrid approaches: Advocates of hybrid models (combining neural networks and symbolic approaches), claim that such a mixture can better capture the mechanisms of the human mind

See Also

Artificial Intelligence (AI)

Artificial General Intelligence (AGI)

Deep Learning

Cognitive Computing

Human Computer Interaction (HCI)

Human-Centered Design (HCD)

Machine-to-Machine (M2M)

Machine Learning

Predictive Analytics

Data Analytics

Data Analysis

Big Data

References

- ↑ Definition: What does Artificial Neural Network (ANN) mean? Digital Trends

- ↑ How do artificial neural networks work? Forbes

- ↑ What are the different kinds of Artificial Neural Networks (ANN)? Aaron @josh.ai

- ↑ History of Neural Networks in Business Pergamon

- ↑ Criticism and Counters to Artificial Neural Network (ANN) Wikipedia

Further Reading

- Artificial Neural Networks Technology U Toronto

- How does Artificial Neural Network (ANN) algorithm work? Simplified! Tavish Srivastava

- A Beginner's Guide to Neural Networks and Deep Learning Skymind.AI

- How to Make an Artificial Neural Net With DNA IEEE Spectrum

- Energy analysis of a building using artificial neural network: A review RajeshKumar, R.K.Aggarwal, J.D.Sharma

- An implementation of artificial neural-network potentials for atomistic materials simulations: Performance for TiO2 Nongnuch Artrith, Alexander Urban